Problem Statement

I’ve spent the last year building AI agents in enterprise environments. During this time, I’ve extensively applied emerging standards like Model Context Protocol (MCP) from Anthropic and the more recent Agent-to-Agent (A2A) Protocol for agent communication and coordination. What I’ve learned: there’s a massive gap between building a quick proof-of-concept with these protocols and deploying a production-grade system. The concerns that get overlooked in production deployments are exactly what will take you down at 3 AM:

- Multi-tenant isolation with row-level security (because one leaked document = lawsuit)

- JWT-based authentication across microservices (no shared sessions, fully stateless)

- Real-time observability of agent actions (when agents misbehave, you need to know WHY)

- Cost tracking and budgeting per user and model (because OpenAI bills compound FAST)

- Hybrid search combining BM25 and vector embeddings (keyword matching + semantic understanding)

- Graceful degradation when embeddings aren’t available (real data is messy)

- Integration testing against real databases (mocks lie to you)

Disregarding security concerns can lead to incidents like the Salesloft breach where their AI chatbot inadvertently stored authentication tokens for hundreds of services, which exposed customer data across multiple platforms. More recently in October 2025, Filevine (a billion-dollar legal AI platform) exposed 100,000+ confidential legal documents through an unauthenticated API endpoint that returned full admin tokens to their Box filesystem. No authentication required, just a simple API call. I’ve personally witnessed security issues from inadequate AuthN/AuthZ controls and cost overruns exceeding hundreds of thousands of dollars, which are preventable with proper security and budget enforcement.

The good news is that MCP and A2A protocols provide the foundation to solve these problems. Most articles treat these as competing standards but they are complementary. In this guide, I’ll show you exactly how to combine MCP and A2A to build a system that handles real production concerns: multi-tenancy, authentication, cost control, and observability.

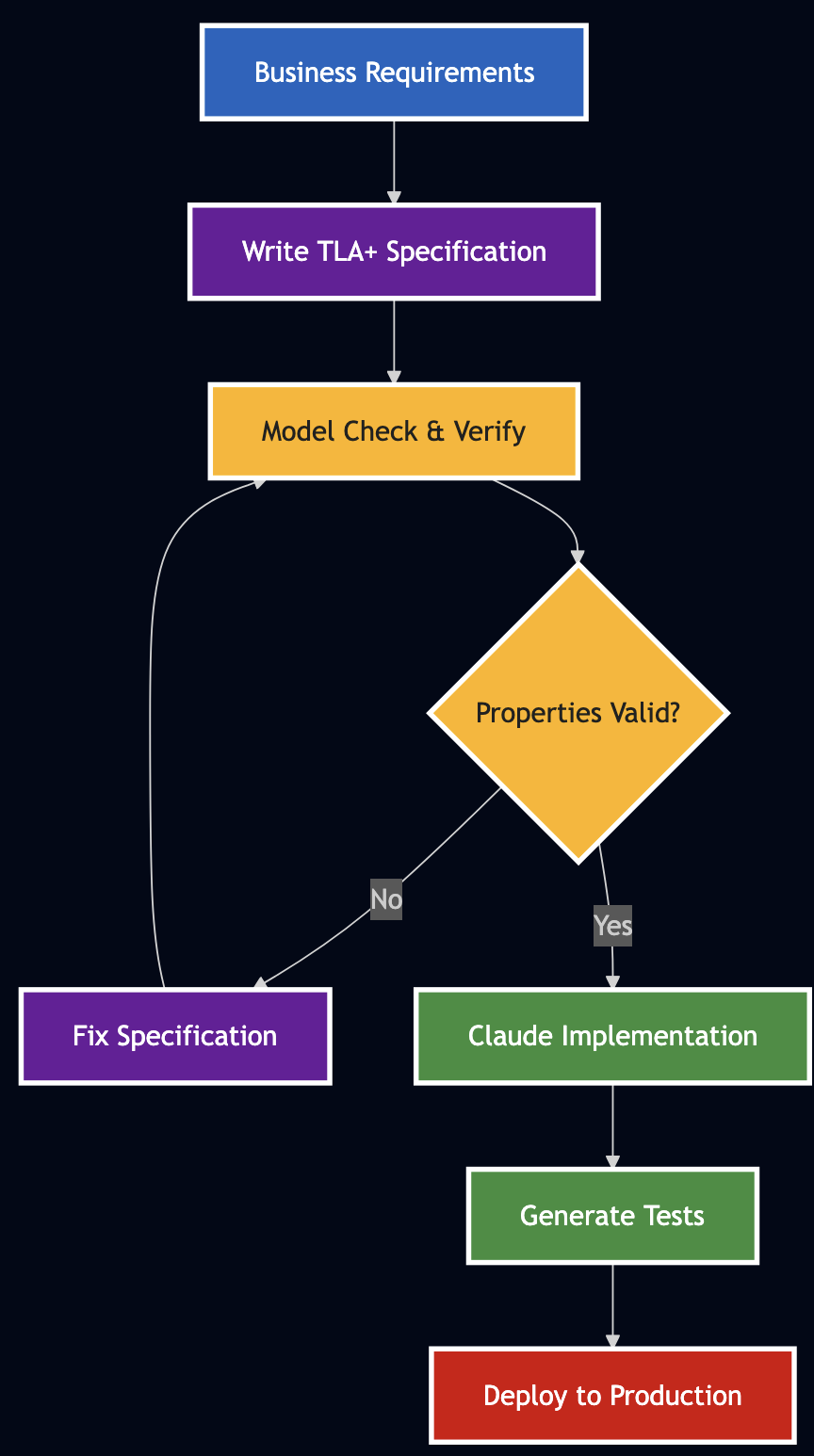

Reference Implementation

To demonstrate these concepts in action, I’ve built a reference implementation that showcases production-ready patterns.

Architecture Philosophy:

Three principles guided every decision:

- Go for servers, Python for workflows – Use the right tool for each job. Go handles high-throughput protocol servers. Python handles AI workflows.

- Database-level security – Multi-tenancy enforced via PostgreSQL row-level security (RLS), not application code. Impossible to bypass accidentally.

- Stateless everything – Every service can scale horizontally. No sticky sessions, no shared state, no single points of failure.

All containerized, fully tested, and ready for production deployment.

Tech Stack Summary:

- Go 1.22 (protocol servers)

- Python 3.11 (AI workflows)

- PostgreSQL 16 + pgvector (vector search with RLS)

- Ollama (local LLM)

- Docker Compose (local development)

- Kubernetes manifests (production deployment)

GitHub: Complete implementation available

But before we dive into the implementation, let’s understand the fundamental problem these protocols solve and why you need both.

Part 1: Understanding MCP and A2A

The Core Problem: Integration Chaos

Prior to MCP protocol in 2024, you had to build custom integration with LLM providers, data sources and AI frameworks. Every AI application had to reinvent authentication, data access, and orchestration, which doesn’t scale. MCP and A2A emerged to solve different aspects of this chaos:

The MCP Side: Standardized Tool Execution

Think of MCP as a standardized toolbox for AI models. Instead of every AI application writing custom integrations for databases, APIs, and file systems, MCP provides a JSON-RPC 2.0 protocol that models use to:

- Call tools (search documents, retrieve data, update records)

- Access resources (files, databases, APIs)

- Send prompts (inject context into model calls)

From the MCP vs A2A comparison:

“MCP excels at synchronous, stateless tool execution. It’s perfect when you need an AI model to retrieve information, execute a function, and return results immediately.”

Here’s what MCP looks like in practice:

{

"jsonrpc": "2.0",

"id": 1,

"method": "tools/call",

"params": {

"name": "hybrid_search",

"arguments": {

"query": "machine learning best practices",

"limit": 5,

"bm25_weight": 0.5,

"vector_weight": 0.5

}

}

}

The server executes the tool and returns results. Simple, stateless, fast.

Why JSON-RPC 2.0? Because it’s:

- Language-agnostic – Works with any language that speaks HTTP

- Batch-capable – Multiple requests in one HTTP call

- Error-standardized – Consistent error codes across implementations

- Widely adopted – 20+ years of production battle-testing

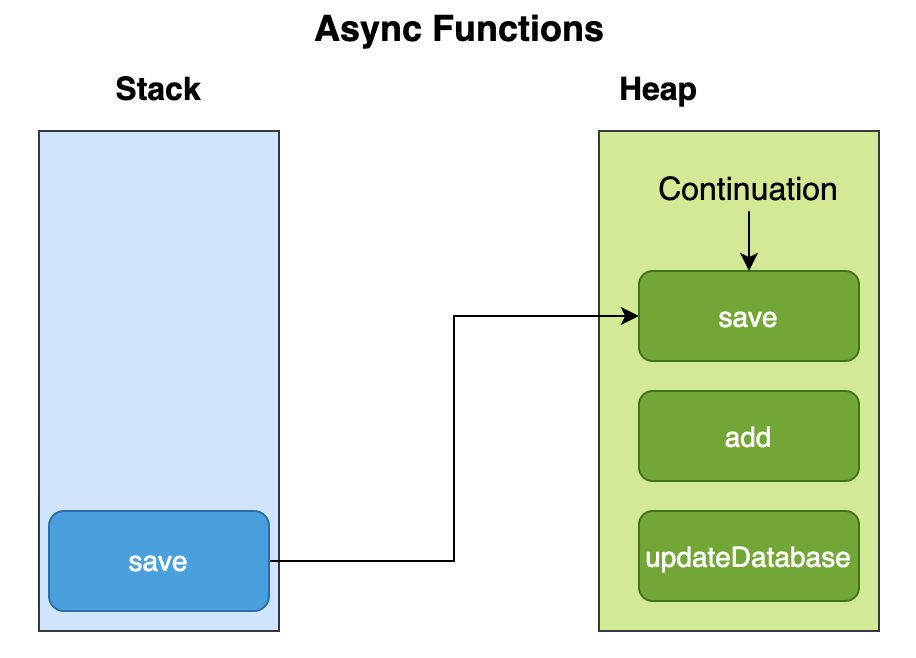

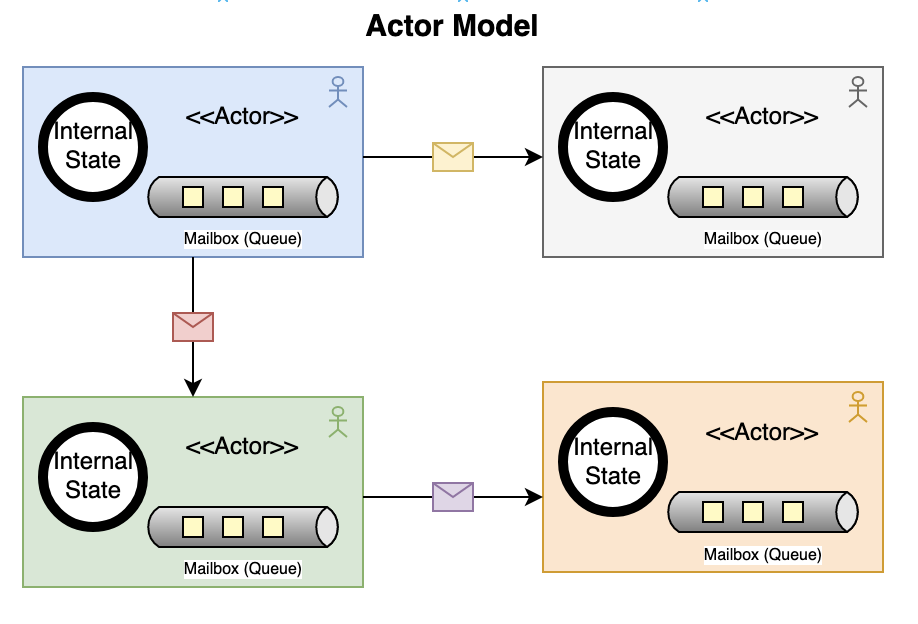

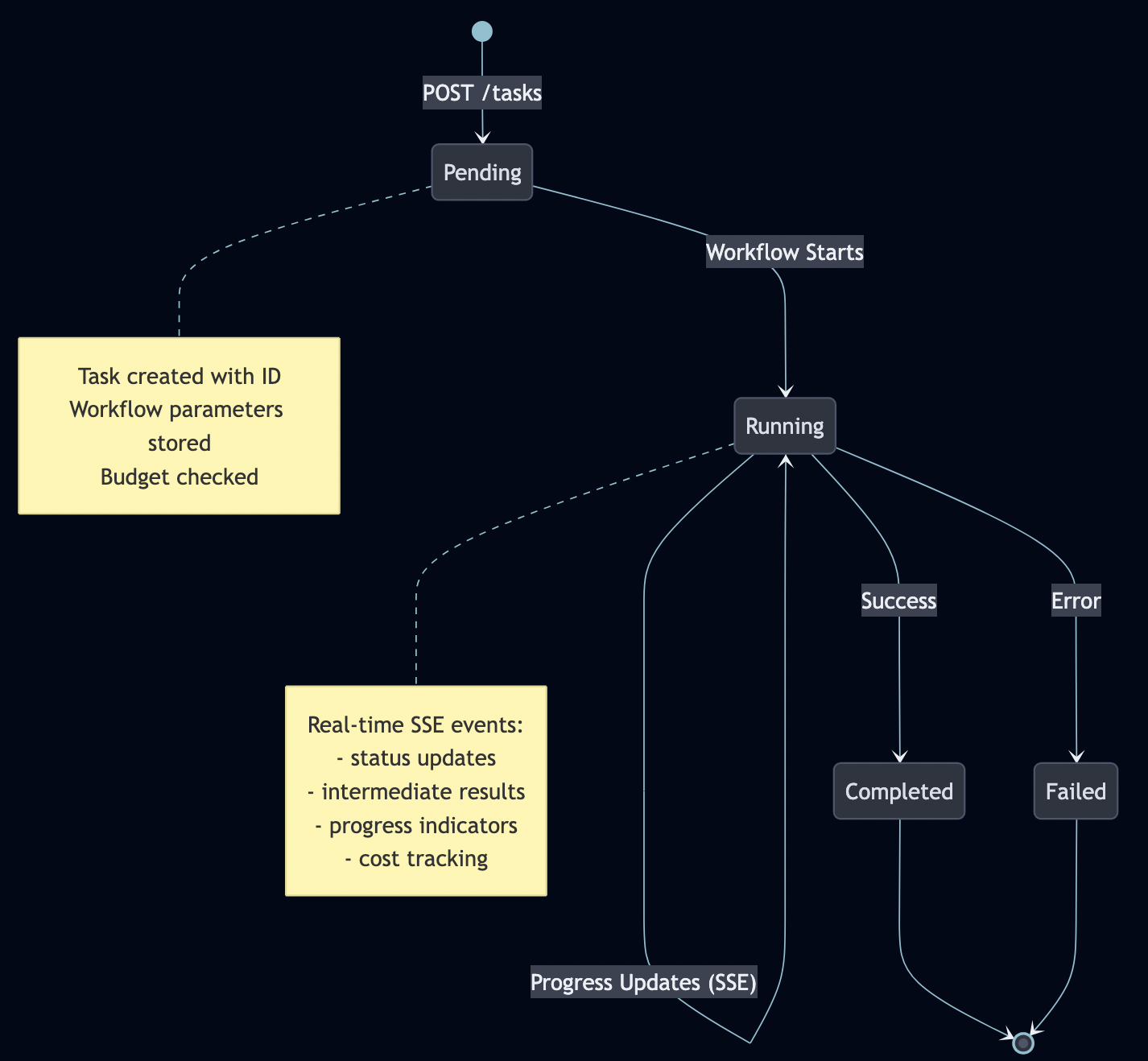

The A2A Side: Stateful Workflow Orchestration

A2A handles what MCP doesn’t: multi-step, stateful workflows where agents collaborate. From the A2A Protocol docs:

“A2A is designed for asynchronous, stateful orchestration of complex tasks that require multiple steps, agent coordination, and long-running processes.”

A2A provides:

- Task creation and management with persistent state

- Real-time streaming of progress updates (Server-Sent Events)

- Agent coordination across multiple services

- Artifact management for intermediate results

Why Both Protocols Matter

Here’s a real scenario from my fintech work that illustrates why you need both:

Use Case: Compliance analyst needs to research a company across 10,000 documents, verify regulatory compliance, cross-reference with SEC filings, and generate an audit-ready report.

With MCP alone:

- ? No way to track multi-step progress

- ? Can’t coordinate multiple tools

- ? No intermediate result storage

- ? Client must orchestrate everything

With A2A alone:

- ? Every tool is custom-integrated

- ? No standardized data access

- ? Reinventing authentication per tool

- ? Coupling agent logic to data sources

With MCP + A2A:

- ? A2A orchestrates the multi-step workflow

- ? MCP provides standardized tool execution

- ? Real-time progress via SSE

- ? Stateful coordination with stateless tools

- ? Authentication handled once (JWT in MCP)

- ? Intermediate results stored as artifacts

As noted in OneReach’s guide:

“Use MCP when you need fast, stateless tool execution. Use A2A when you need complex, stateful orchestration. Use both when building production systems.”

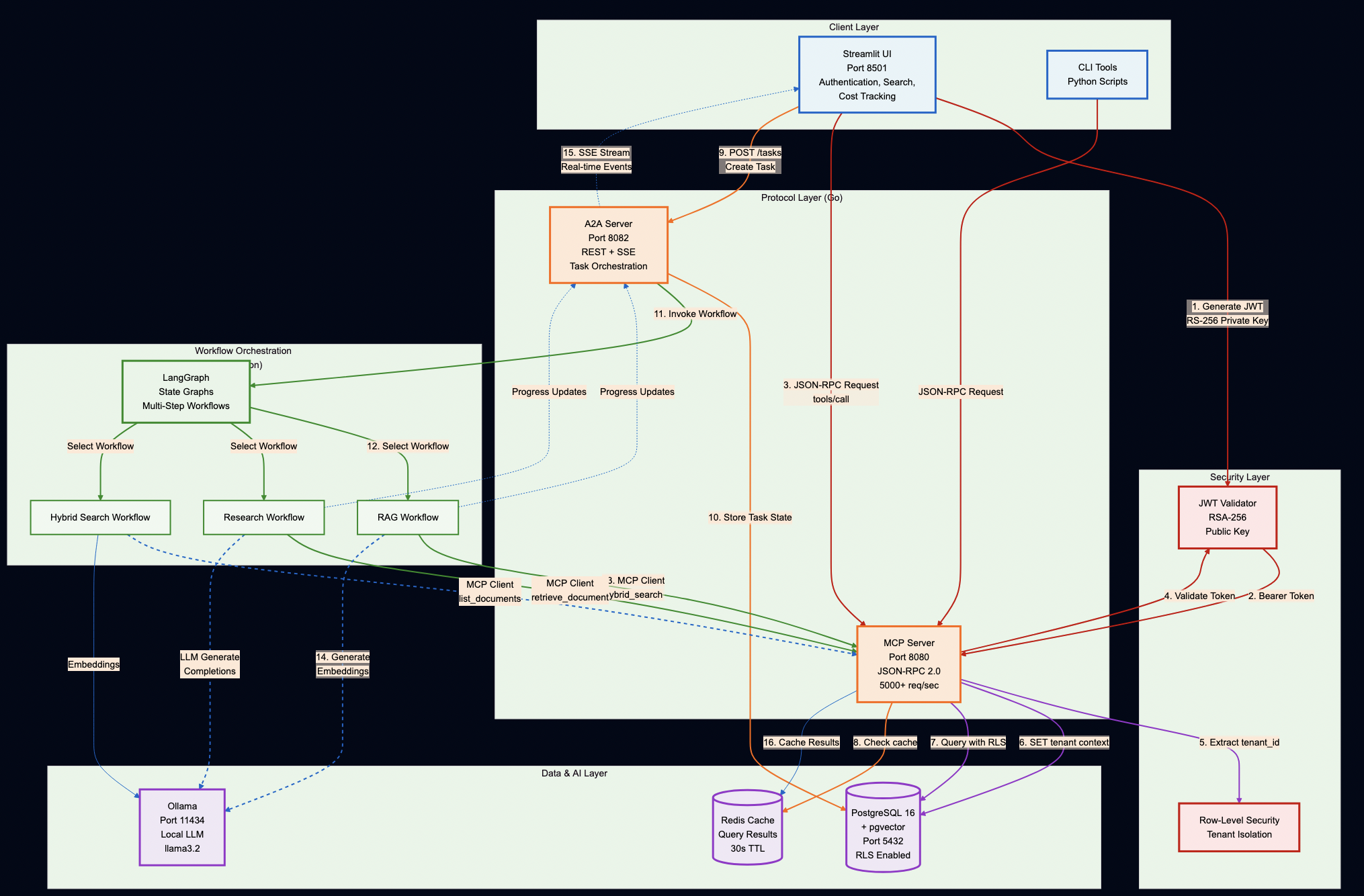

Part 2: Architecture

System Overview

Key Design Decisions

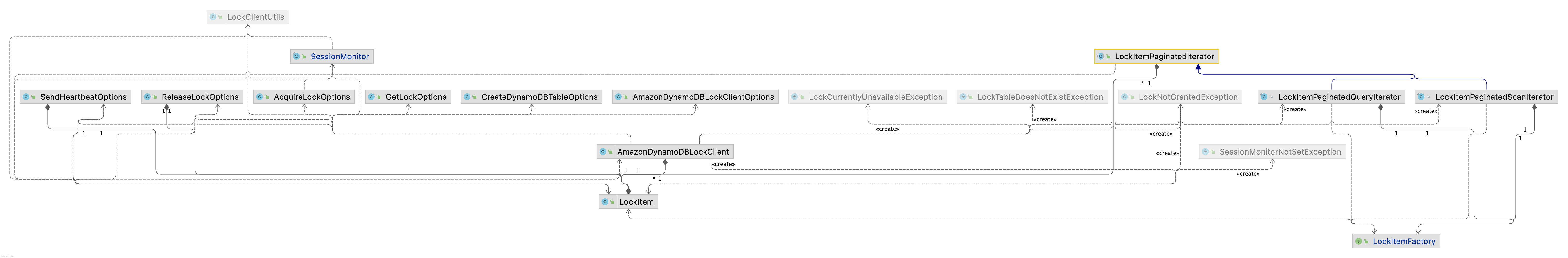

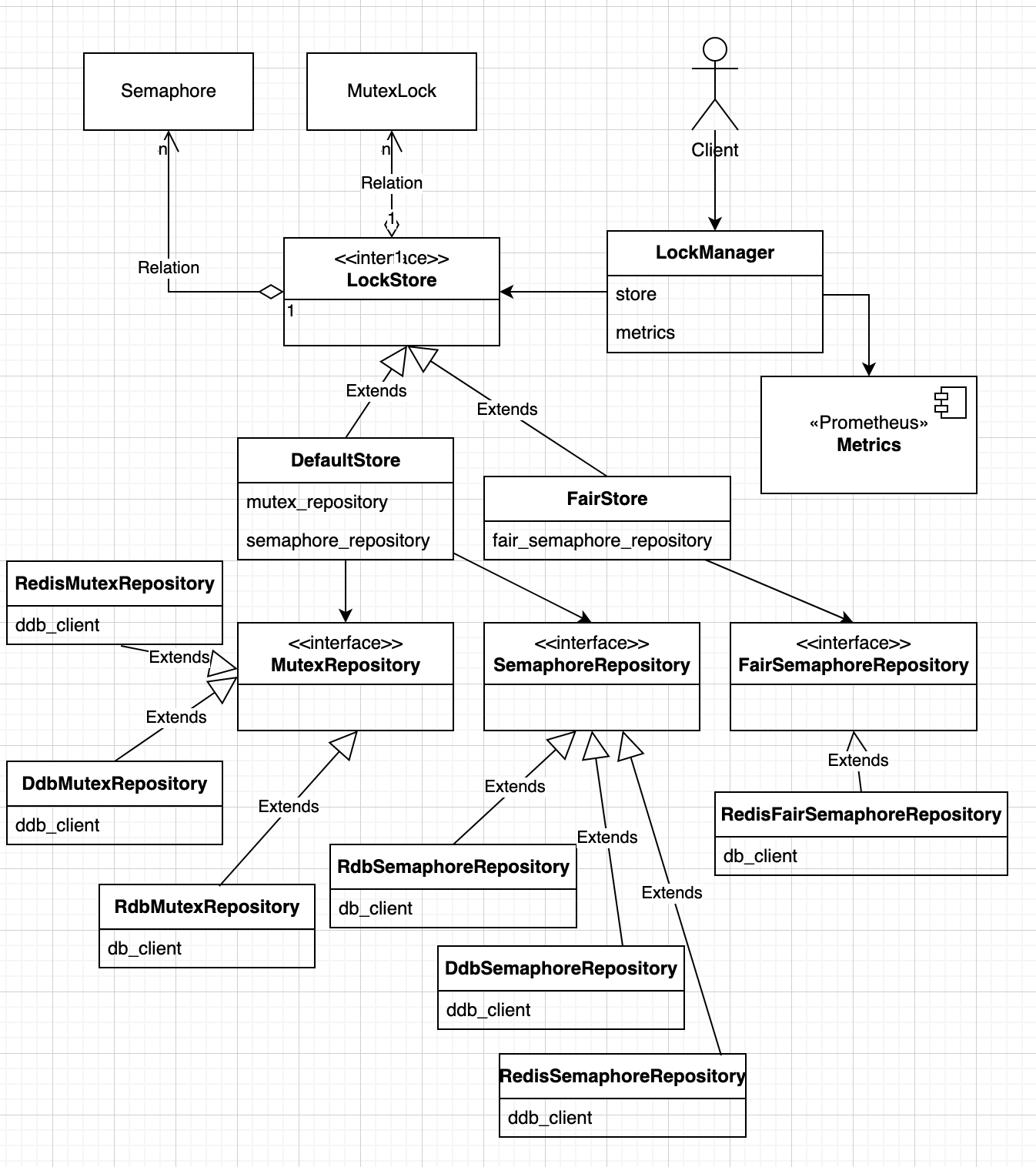

Protocol Servers (Go):

- MCP Server – Secure document retrieval with pgvector and hybrid search. Go’s concurrency model handles 5,000+ req/sec, and its type safety catches integration bugs at compile time (not at runtime).

- A2A Server – Multi-step workflow orchestration with Server-Sent Events for real-time progress tracking. Stateless design enables horizontal scaling.

AI Workflows (Python):

- LangGraph Workflows – RAG, research, and hybrid pipelines. Python was the right choice here because the AI ecosystem (LangChain, embeddings, model integrations) lives in Python.

User Interface & Database:

- Streamlit UI – Production-ready authentication, search interface, cost tracking dashboard, and real-time task streaming

- PostgreSQL with pgvector – Multi-tenant document storage with row-level security policies enforced at the database level (not application level)

- Ollama – Local LLM inference for development and testing (no OpenAI API keys required)

Database Security:

Application-level tenant filtering for database is not enough so row-level security policies are enforced:

// ? BAD: Application-level filtering (can be bypassed)

func GetDocuments(tenantID string) ([]Document, error) {

query := "SELECT * FROM documents WHERE tenant_id = ?"

// What if someone forgets the WHERE clause?

// What if there's a SQL injection?

// What if a bug skips this check?

}

-- ? GOOD: Database-level Row-Level Security (impossible to bypass)

ALTER TABLE documents ENABLE ROW LEVEL SECURITY;

CREATE POLICY tenant_isolation ON documents

USING (tenant_id = current_setting('app.current_tenant_id')::uuid);

Every query automatically filters by tenant so there is no way to accidentally leak data. Even if your application has a bug, the database enforces isolation.

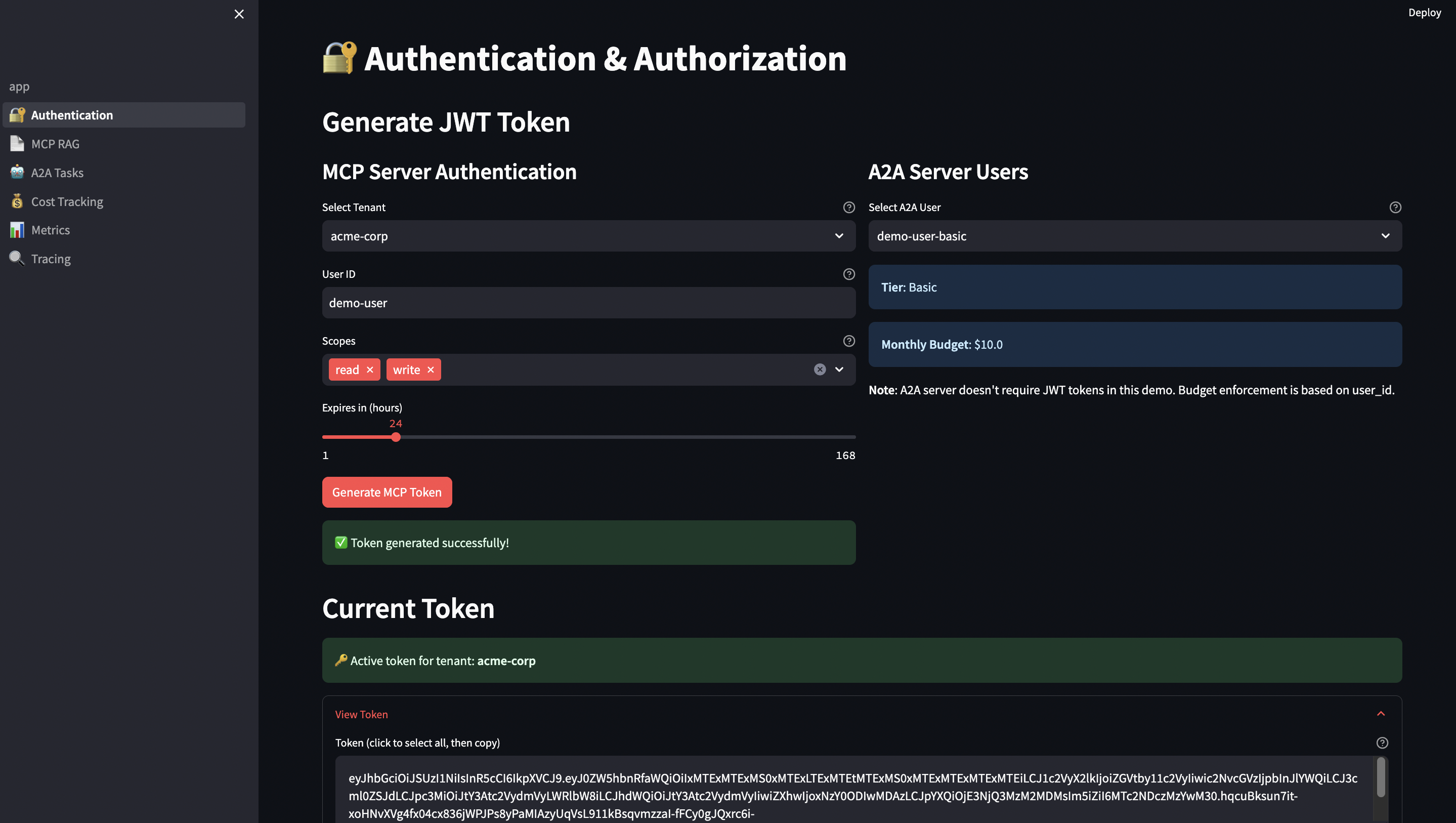

JWT Authentication

MCP server and UI share RSA keys for token verification, which provides:

- Asymmetric: MCP server only needs public key (can’t forge tokens)

- Rotation: Rotate private key without redeploying services

- Auditability: Know which key signed which token

- Standard: Widely supported, well-understood

// mcp-server/internal/auth/jwt.go

func (v *JWTValidator) ValidateToken(tokenString string) (*Claims, error) {

token, err := jwt.ParseWithClaims(tokenString, &Claims{}, func(token *jwt.Token) (interface{}, error) {

if _, ok := token.Method.(*jwt.SigningMethodRSA); !ok {

return nil, fmt.Errorf("unexpected signing method: %v", token.Header["alg"])

}

return v.publicKey, nil

})

if err != nil {

return nil, fmt.Errorf("failed to parse token: %w", err)

}

claims, ok := token.Claims.(*Claims)

if !ok || !token.Valid {

return nil, fmt.Errorf("invalid token claims")

}

return claims, nil

}

Tokens are validated on every request—no session state, fully stateless.

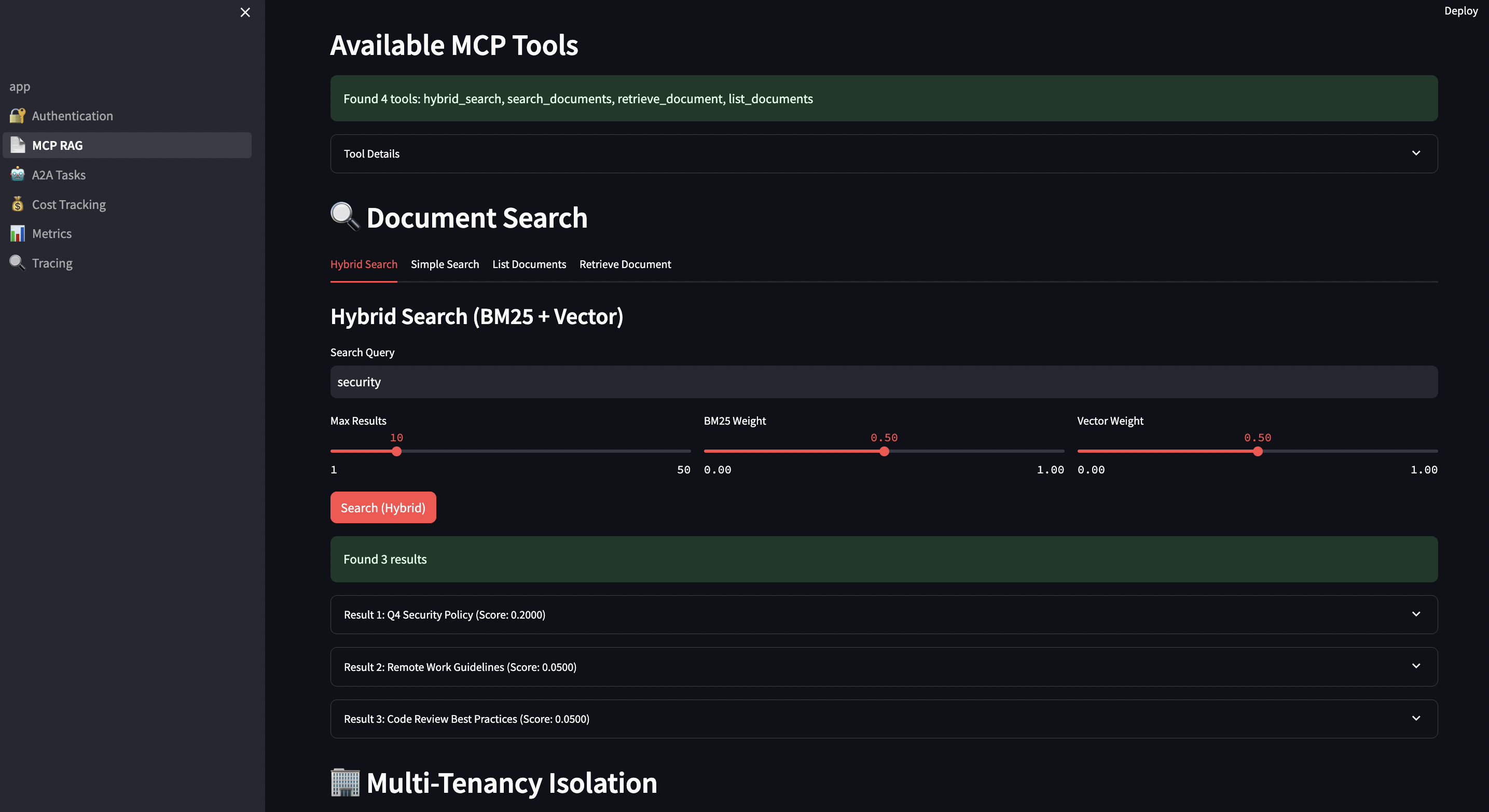

4. Hybrid Search

In some of past RAG implementation, I used Vector search alone, which is not enough for production RAG.

Why hybrid search matters:

| Scenario | BM25 (Keyword) | Vector (Semantic) | Hybrid |

|---|---|---|---|

| Exact term: “GDPR Article 17” | ? Perfect | ? Misses | ? Perfect |

| Concept: “right to be forgotten” | ? Misses | ? Good | ? Perfect |

| Legal citation: “Smith v. Jones 2024” | ? Perfect | ? Poor | ? Perfect |

| Misspelling: “machien learning” | ? Misses | ? Finds | ? Finds |

Real-world example from my fintech work:

Query: "SEC disclosure requirements GDPR data breach" Vector-only results: 1. "Privacy Policy" (0.87 similarity) 2. "Data Protection Guide" (0.84 similarity) 3. "General Security Practices" (0.81 similarity) ? Missed: Actual SEC regulation text Hybrid results (0.5 BM25 + 0.5 Vector): 1. "SEC Rule 10b-5 Disclosure Requirements" (0.92 combined) 2. "GDPR Article 33 Breach Notification" (0.89 combined) 3. "Cross-Border Regulatory Compliance" (0.85 combined) ? Found: Exactly what we needed

The reference implementation (hybrid_search.go) uses PostgreSQL’s full-text search (BM25-like) combined with pgvector:

// Hybrid search query using Reciprocal Rank Fusion

query := `

WITH bm25_results AS (

SELECT

id,

ts_rank_cd(

to_tsvector('english', title || ' ' || content),

plainto_tsquery('english', $1)

) AS bm25_score,

ROW_NUMBER() OVER (ORDER BY ts_rank_cd(...) DESC) AS bm25_rank

FROM documents

WHERE to_tsvector('english', title || ' ' || content) @@ plainto_tsquery('english', $1)

),

vector_results AS (

SELECT

id,

1 - (embedding <=> $2) AS vector_score,

ROW_NUMBER() OVER (ORDER BY embedding <=> $2) AS vector_rank

FROM documents

WHERE embedding IS NOT NULL

),

combined AS (

SELECT

COALESCE(b.id, v.id) AS id,

-- Reciprocal Rank Fusion score

(

COALESCE(1.0 / (60 + b.bm25_rank), 0) * $3 +

COALESCE(1.0 / (60 + v.vector_rank), 0) * $4

) AS combined_score

FROM bm25_results b

FULL OUTER JOIN vector_results v ON b.id = v.id

)

SELECT * FROM combined

ORDER BY combined_score DESC

LIMIT $7

`

Why Reciprocal Rank Fusion (RRF)? Because:

- Score normalization: BM25 scores and vector similarities aren’t comparable

- Rank-based: Uses position, not raw scores

- Research-backed: Used by search engines (Elasticsearch, Vespa)

- Tunable: Adjust k parameter (60 in our case) for different behaviors

Part 3: The MCP Server – Secure Document Retrieval

Understanding JSON-RPC 2.0

Before we dive into implementation, let’s understand why MCP chose JSON-RPC 2.0.

JSON-RPC 2.0 Request Structure:

{

"jsonrpc": "2.0",

"id": 1,

"method": "tools/call",

"params": {

"name": "hybrid_search",

"arguments": {"query": "machine learning", "limit": 10}

}

}

JSON-RPC 2.0 Response Structure:

{

"jsonrpc": "2.0",

"id": 1,

"result": {

"content": [{

"type": "text",

"text": "[{\"doc_id\": \"123\", \"title\": \"ML Guide\", ...}]"

}],

"isError": false

}

}

Error Response:

{

"jsonrpc": "2.0",

"id": 1,

"error": {

"code": -32602,

"message": "Invalid params",

"data": {"field": "query", "reason": "required"}

}

}

Standard Error Codes:

-32700: Parse error (invalid JSON)-32600: Invalid request (missing required fields)-32601: Method not found-32602: Invalid params-32603: Internal error

Custom MCP Error Codes:

-32001: Authentication required-32002: Authorization failed-32003: Rate limit exceeded-32004: Resource not found-32005: Validation error

MCP Tool Implementation

MCP tools follow a standard interface:

// mcp-server/internal/tools/tool.go

type Tool interface {

Definition() protocol.ToolDefinition

Execute(ctx context.Context, args map[string]interface{}) (protocol.ToolCallResult, error)

}

Here’s the complete hybrid search tool (hybrid_search.go) implementation with detailed comments:

// mcp-server/internal/tools/hybrid_search.go

type HybridSearchTool struct {

db database.Store

}

func (t *HybridSearchTool) Execute(ctx context.Context, args map[string]interface{}) (protocol.ToolCallResult, error) {

// 1. AUTHENTICATION: Extract tenant from JWT claims

// This happens at middleware level, but we verify here

tenantID, ok := ctx.Value(auth.ContextKeyTenantID).(string)

if !ok {

return protocol.ToolCallResult{IsError: true}, fmt.Errorf("tenant ID not found in context")

}

// 2. PARAMETER PARSING: Extract and validate arguments

query, _ := args["query"].(string)

if query == "" {

return protocol.ToolCallResult{IsError: true}, fmt.Errorf("query is required")

}

limit, _ := args["limit"].(float64)

if limit <= 0 {

limit = 10 // default

}

if limit > 50 {

limit = 50 // max cap

}

bm25Weight, _ := args["bm25_weight"].(float64)

vectorWeight, _ := args["vector_weight"].(float64)

// 3. WEIGHT NORMALIZATION: Ensure weights sum to 1.0

if bm25Weight == 0 && vectorWeight == 0 {

bm25Weight = 0.5

vectorWeight = 0.5

}

// 4. EMBEDDING GENERATION: Using Ollama for query embedding

var embedding []float32

if vectorWeight > 0 {

embedding = generateEmbedding(query) // Calls Ollama API

}

// 5. DATABASE QUERY: Execute hybrid search with RLS

params := database.HybridSearchParams{

Query: query,

Embedding: embedding,

Limit: int(limit),

BM25Weight: bm25Weight,

VectorWeight: vectorWeight,

}

results, err := t.db.HybridSearch(ctx, tenantID, params)

if err != nil {

return protocol.ToolCallResult{IsError: true}, err

}

// 6. RESPONSE FORMATTING: Convert to JSON for client

jsonData, _ := json.Marshal(results)

return protocol.ToolCallResult{

Content: []protocol.ContentBlock{{Type: "text", Text: string(jsonData)}},

IsError: false,

}, nil

}

The NULL Embedding Problem

Real-world data is messy. Not every document has an embedding. Here’s what happened:

Initial Implementation (Broken):

// ? This crashes with NULL embeddings

var embedding pgvector.Vector

err = tx.QueryRow(ctx, query, docID).Scan(

&doc.ID,

&doc.TenantID,

&doc.Title,

&doc.Content,

&doc.Metadata,

&embedding, // CRASH: can't scan <nil> into pgvector.Vector

&doc.CreatedAt,

&doc.UpdatedAt,

)

Error:

can't scan into dest[5]: unsupported data type: <nil>

The Fix (Correct):

// ? Use pointer types for nullable fields

var embedding *pgvector.Vector // Pointer allows NULL

err = tx.QueryRow(ctx, query, docID).Scan(

&doc.ID,

&doc.TenantID,

&doc.Title,

&doc.Content,

&doc.Metadata,

&embedding, // Can be NULL now

&doc.CreatedAt,

&doc.UpdatedAt,

)

// Handle NULL embeddings gracefully

if embedding != nil && embedding.Slice() != nil {

doc.Embedding = embedding.Slice()

} else {

doc.Embedding = nil // Explicitly set to nil

}

return doc, nil

Hybrid search handles this elegantly—documents without embeddings get vector_score = 0 but still appear in results if they match BM25:

-- Hybrid search handles NULL embeddings gracefully

WITH bm25_results AS (

SELECT id, ts_rank(to_tsvector('english', content), query) AS bm25_score

FROM documents

WHERE to_tsvector('english', content) @@ query

),

vector_results AS (

SELECT id, 1 - (embedding <=> $1) AS vector_score

FROM documents

WHERE embedding IS NOT NULL -- ? Skip NULL embeddings

)

SELECT

d.*,

COALESCE(b.bm25_score, 0) AS bm25_score,

COALESCE(v.vector_score, 0) AS vector_score,

($2 * COALESCE(b.bm25_score, 0) + $3 * COALESCE(v.vector_score, 0)) AS combined_score

FROM documents d

LEFT JOIN bm25_results b ON d.id = b.id

LEFT JOIN vector_results v ON d.id = v.id

WHERE COALESCE(b.bm25_score, 0) > 0 OR COALESCE(v.vector_score, 0) > 0

ORDER BY combined_score DESC

LIMIT $4;

Why this matters:

- ? Documents without embeddings still searchable (BM25)

- ? New documents usable immediately (embeddings generated async)

- ? System degrades gracefully (not all-or-nothing)

- ? Zero downtime for embedding model updates

Tenant Isolation in Action

Every MCP request sets the tenant context at the database transaction level:

// mcp-server/internal/database/postgres.go

func (db *DB) SetTenantContext(ctx context.Context, tx pgx.Tx, tenantID string) error {

// Note: SET commands don't support parameter binding

// TenantID is validated as UUID by JWT validator, so this is safe

query := fmt.Sprintf("SET LOCAL app.current_tenant_id = '%s'", tenantID)

_, err := tx.Exec(ctx, query)

return err

}

Combined with RLS policies, this ensures complete tenant isolation at the database level.

Real-world security test:

// Integration test: Verify tenant isolation

func TestTenantIsolation(t *testing.T) {

// Create documents for two tenants

tenant1Doc := createDocument(t, db, "tenant-1", "Secret Data A")

tenant2Doc := createDocument(t, db, "tenant-2", "Secret Data B")

// Query as tenant-1

ctx1 := contextWithTenant(ctx, "tenant-1")

results1, _ := db.ListDocuments(ctx1, "tenant-1", ListParams{Limit: 100})

// Query as tenant-2

ctx2 := contextWithTenant(ctx, "tenant-2")

results2, _ := db.ListDocuments(ctx2, "tenant-2", ListParams{Limit: 100})

// Assertions

assert.Contains(t, results1, tenant1Doc)

assert.NotContains(t, results1, tenant2Doc) // ? Cannot see other tenant

assert.Contains(t, results2, tenant2Doc)

assert.NotContains(t, results2, tenant1Doc) // ? Cannot see other tenant

}

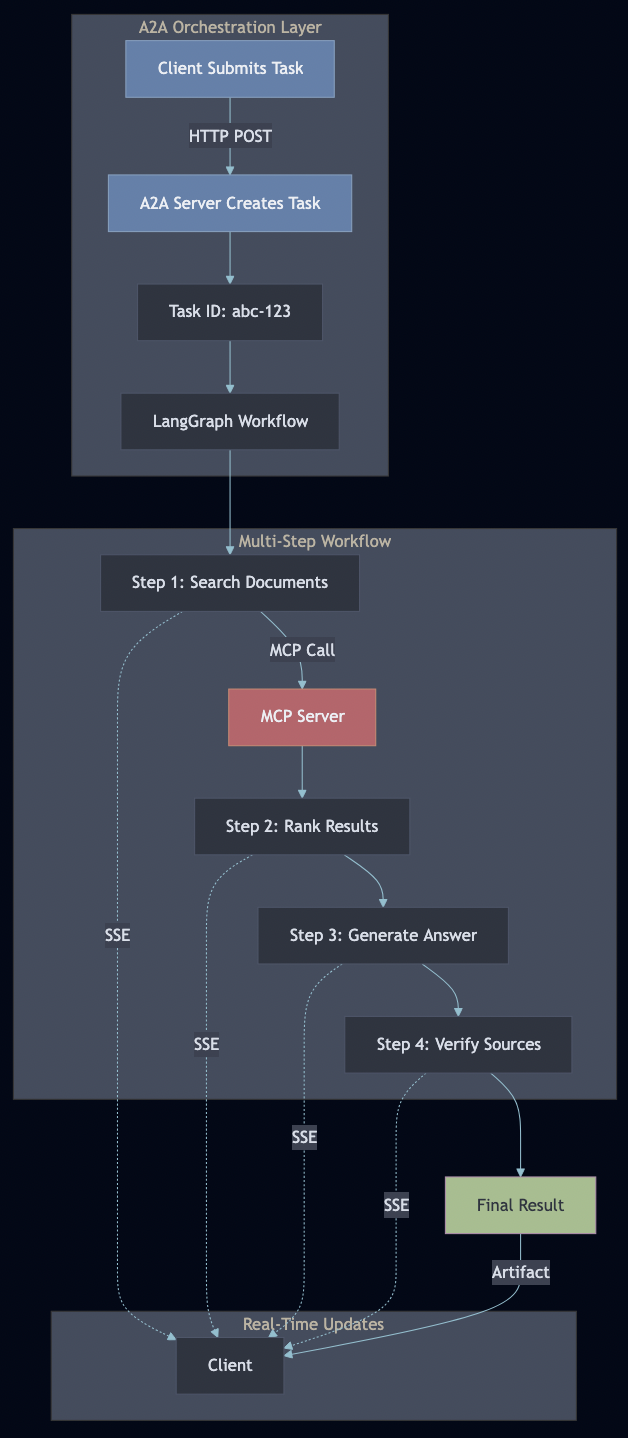

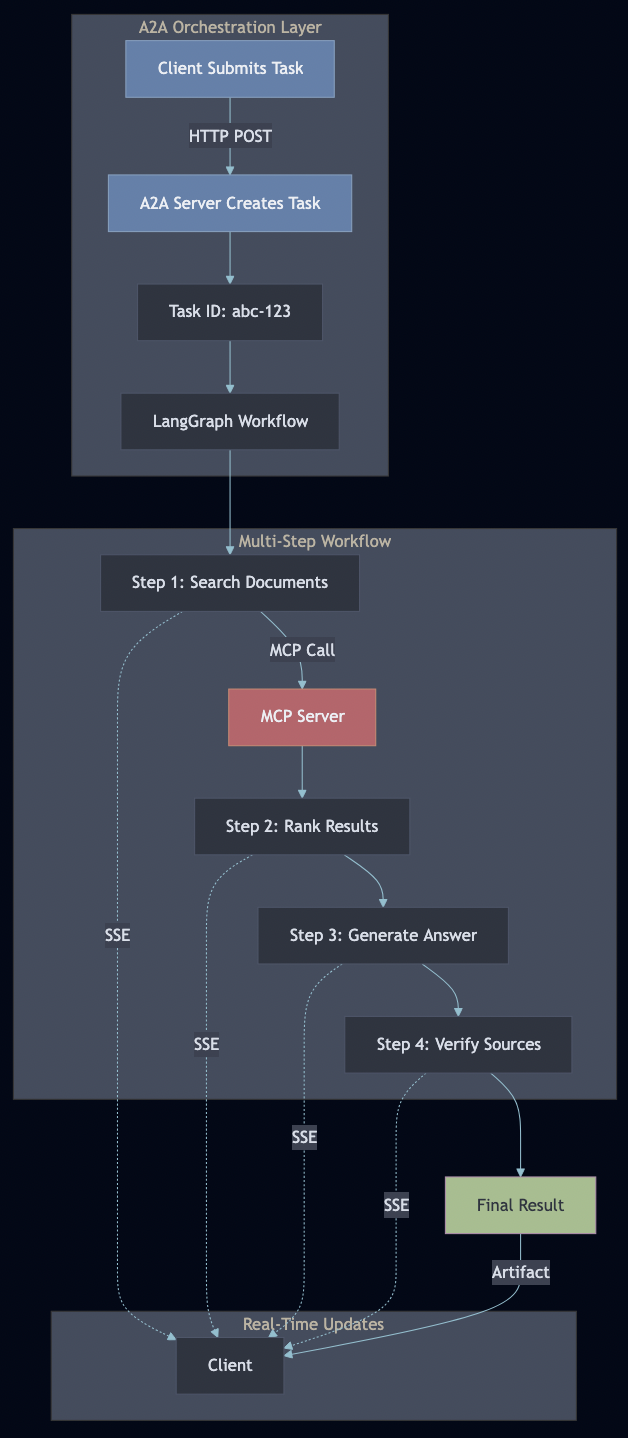

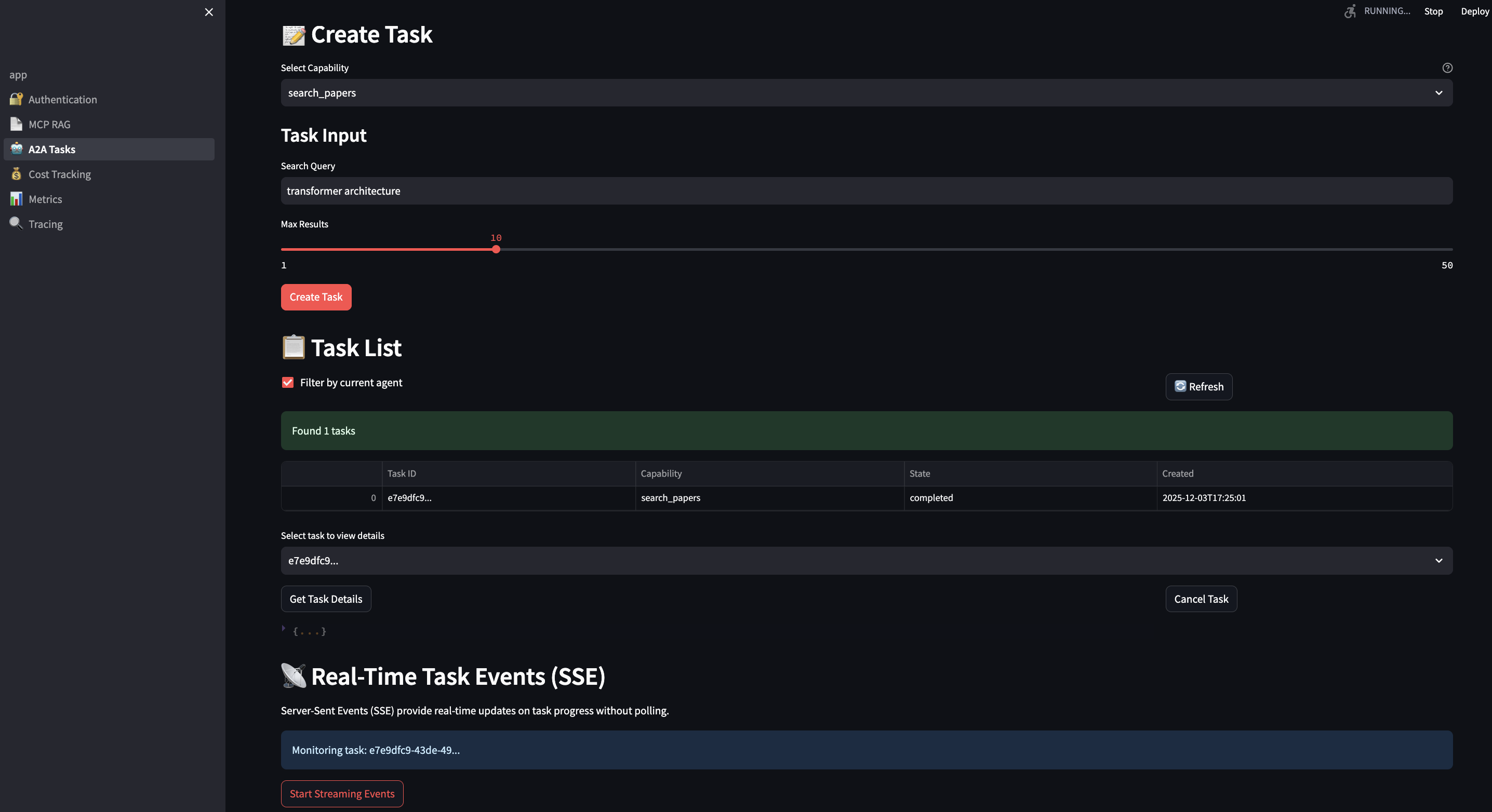

Part 4: The A2A Server – Workflow Orchestration

Task Lifecycle

A2A manages stateful tasks through their entire lifecycle:

Server-Sent Events for Real-Time Updates

Why SSE instead of WebSockets?

| Feature | SSE | WebSocket |

|---|---|---|

| Unidirectional | ? Yes (server?client) | ? No (bidirectional) |

| HTTP/2 multiplexing | ? Yes | ? No |

| Automatic reconnection | ? Built-in | ? Manual |

| Firewall-friendly | ? Yes (HTTP) | ?? Sometimes blocked |

| Complexity | ? Simple | ? Complex |

| Browser support | ? All modern | ? All modern |

SSE is perfect for agent progress updates because:

- One-way communication (server pushes updates)

- Simple implementation

- Automatic reconnection

- Works through corporate firewalls

SSE provides real-time streaming without WebSocket complexity:

// a2a-server/internal/handlers/tasks.go

func (h *TaskHandler) StreamEvents(w http.ResponseWriter, r *http.Request) {

taskID := chi.URLParam(r, "taskId")

// Set SSE headers

w.Header().Set("Content-Type", "text/event-stream")

w.Header().Set("Cache-Control", "no-cache")

w.Header().Set("Connection", "keep-alive")

w.Header().Set("Access-Control-Allow-Origin", "*")

flusher, ok := w.(http.Flusher)

if !ok {

http.Error(w, "Streaming not supported", http.StatusInternalServerError)

return

}

// Stream task events

for {

event := h.taskManager.GetNextEvent(taskID)

if event == nil {

break // Task complete

}

// Format as SSE event

data, _ := json.Marshal(event)

fmt.Fprintf(w, "event: task_update\n")

fmt.Fprintf(w, "data: %s\n\n", data)

flusher.Flush()

if event.Status == "completed" || event.Status == "failed" {

break

}

}

}

Client-side consumption is trivial:

# streamlit-ui/pages/3_?_A2A_Tasks.py

def stream_task_events(task_id: str):

url = f"{A2A_BASE_URL}/tasks/{task_id}/events"

with requests.get(url, stream=True) as response:

for line in response.iter_lines():

if line.startswith(b'data:'):

data = json.loads(line[5:])

st.write(f"Update: {data['message']}")

yield data

LangGraph Workflow Integration

LangGraph workflows call MCP tools through the A2A server:

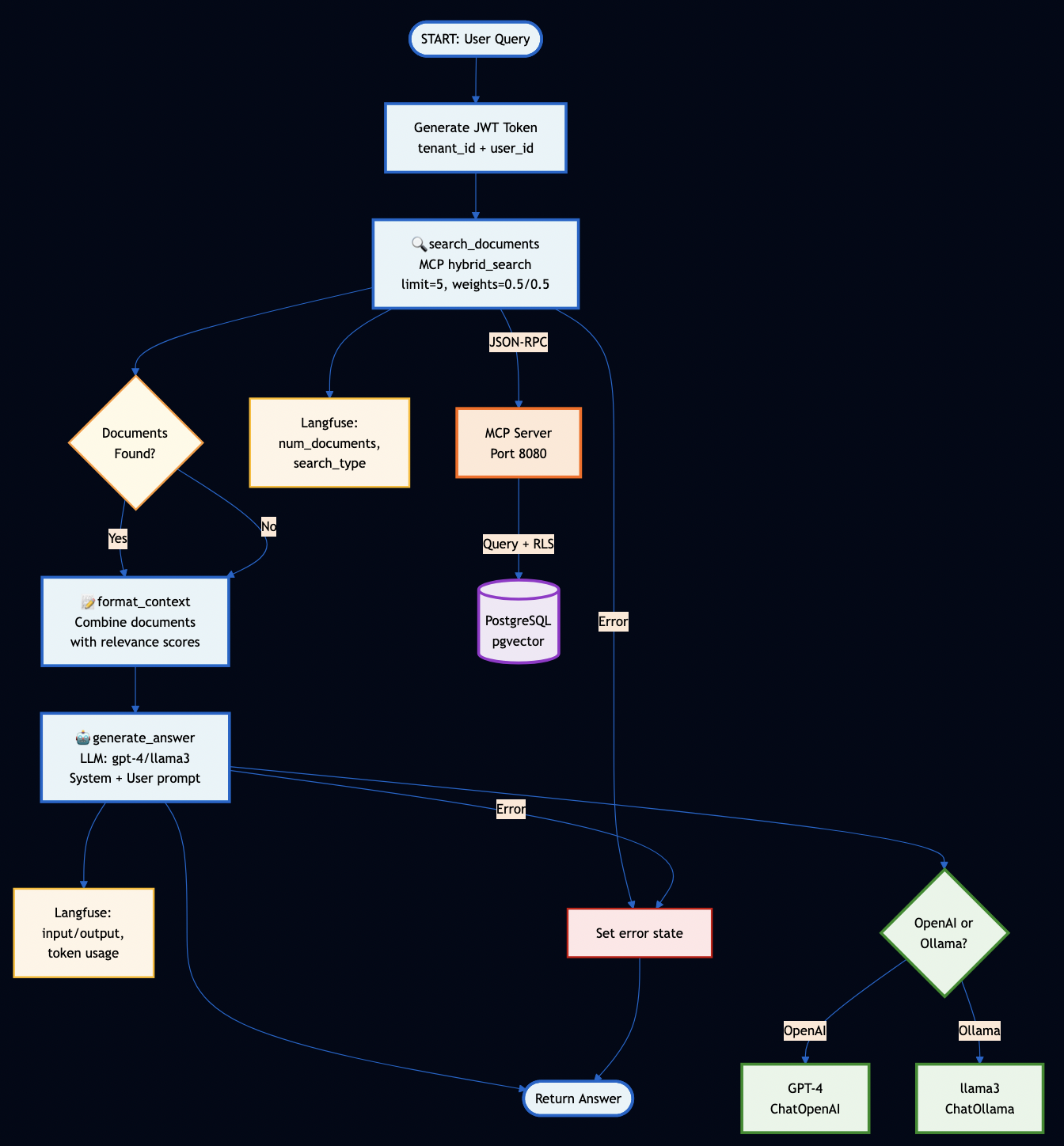

# orchestration/workflows/rag_workflow.py

class RAGWorkflow:

def __init__(self, mcp_url: str):

self.mcp_client = MCPClient(mcp_url)

self.workflow = self.build_workflow()

def build_workflow(self) -> StateGraph:

workflow = StateGraph(RAGState)

# Define workflow steps

workflow.add_node("search", self.search_documents)

workflow.add_node("rank", self.rank_results)

workflow.add_node("generate", self.generate_answer)

workflow.add_node("verify", self.verify_sources)

# Define edges (workflow graph)

workflow.add_edge(START, "search")

workflow.add_edge("search", "rank")

workflow.add_edge("rank", "generate")

workflow.add_edge("generate", "verify")

workflow.add_edge("verify", END)

return workflow.compile()

def search_documents(self, state: RAGState) -> RAGState:

"""Search for relevant documents using MCP hybrid search"""

# This is where MCP and A2A integrate!

results = self.mcp_client.hybrid_search(

query=state["query"],

limit=10,

bm25_weight=0.5,

vector_weight=0.5

)

state["documents"] = results

state["progress"] = f"Found {len(results)} documents"

# Emit progress event via A2A

emit_progress_event(state["task_id"], "search_complete", state["progress"])

return state

def rank_results(self, state: RAGState) -> RAGState:

"""Rank results by combined score"""

docs = sorted(

state["documents"],

key=lambda x: x["score"],

reverse=True

)[:5]

state["ranked_docs"] = docs

state["progress"] = "Ranked top 5 documents"

emit_progress_event(state["task_id"], "ranking_complete", state["progress"])

return state

def generate_answer(self, state: RAGState) -> RAGState:

"""Generate answer using retrieved context"""

context = "\n\n".join([

f"Document: {doc['title']}\n{doc['content']}"

for doc in state["ranked_docs"]

])

prompt = f"""Based on the following documents, answer the question.

Context:

{context}

Question: {state['query']}

Answer:"""

# Call Ollama for local inference

response = ollama.generate(

model="llama3.2",

prompt=prompt

)

state["answer"] = response["response"]

state["progress"] = "Generated final answer"

emit_progress_event(state["task_id"], "generation_complete", state["progress"])

return state

def verify_sources(self, state: RAGState) -> RAGState:

"""Verify sources are accurately cited"""

# Check each cited document exists in ranked_docs

cited_docs = extract_citations(state["answer"])

verified = all(doc in state["ranked_docs"] for doc in cited_docs)

state["verified"] = verified

state["progress"] = "Verified sources" if verified else "Source verification failed"

emit_progress_event(state["task_id"], "verification_complete", state["progress"])

return state

The workflow executes as a multi-step pipeline, with each step:

- Calling MCP tools for data access

- Updating state

- Emitting progress events via A2A

- Handling errors gracefully

Part 5: Production-Grade Features

1. Authentication & Security

JWT Token Generation (Streamlit UI):

# streamlit-ui/pages/1_?_Authentication.py

def generate_jwt_token(tenant_id: str, user_id: str, ttl: int = 3600) -> str:

"""Generate RS256 JWT token with proper claims"""

now = datetime.now(timezone.utc)

payload = {

"tenant_id": tenant_id,

"user_id": user_id,

"iat": now, # Issued at

"exp": now + timedelta(seconds=ttl), # Expiration

"nbf": now, # Not before

"jti": str(uuid.uuid4()), # JWT ID (for revocation)

"iss": "mcp-demo-ui", # Issuer

"aud": "mcp-server" # Audience

}

# Sign with RSA private key

with open("/app/certs/private_key.pem", "rb") as f:

private_key = serialization.load_pem_private_key(

f.read(),

password=None

)

token = jwt.encode(payload, private_key, algorithm="RS256")

return token

Token Validation (MCP Server):

// mcp-server/internal/middleware/auth.go

func AuthMiddleware(validator *auth.JWTValidator) func(http.Handler) http.Handler {

return func(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// 1. Extract token from Authorization header

authHeader := r.Header.Get("Authorization")

if authHeader == "" {

http.Error(w, "missing authorization header", http.StatusUnauthorized)

return

}

tokenString := strings.TrimPrefix(authHeader, "Bearer ")

// 2. Validate token signature and claims

claims, err := validator.ValidateToken(tokenString)

if err != nil {

log.Printf("Token validation failed: %v", err)

http.Error(w, "invalid token", http.StatusUnauthorized)

return

}

// 3. Check token expiration

if claims.ExpiresAt.Before(time.Now()) {

http.Error(w, "token expired", http.StatusUnauthorized)

return

}

// 4. Check token not used before nbf

if claims.NotBefore.After(time.Now()) {

http.Error(w, "token not yet valid", http.StatusUnauthorized)

return

}

// 5. Verify audience (prevent token reuse across services)

if claims.Audience != "mcp-server" {

http.Error(w, "invalid token audience", http.StatusUnauthorized)

return

}

// 6. Add claims to context for downstream handlers

ctx := context.WithValue(r.Context(), auth.ContextKeyTenantID, claims.TenantID)

ctx = context.WithValue(ctx, auth.ContextKeyUserID, claims.UserID)

ctx = context.WithValue(ctx, auth.ContextKeyJTI, claims.JTI)

next.ServeHTTP(w, r.WithContext(ctx))

})

}

}

Key Security Features:

- ? RSA-256 signatures (asymmetric cryptography – server can’t forge tokens)

- ? Short-lived tokens (1-hour default, reduces replay attack window)

- ? JWT ID (jti) for token revocation

- ? Audience claim prevents token reuse across services

- ? Tenant and user context in every request

- ? Database-level isolation via RLS

- ? No session state (fully stateless, scales horizontally)

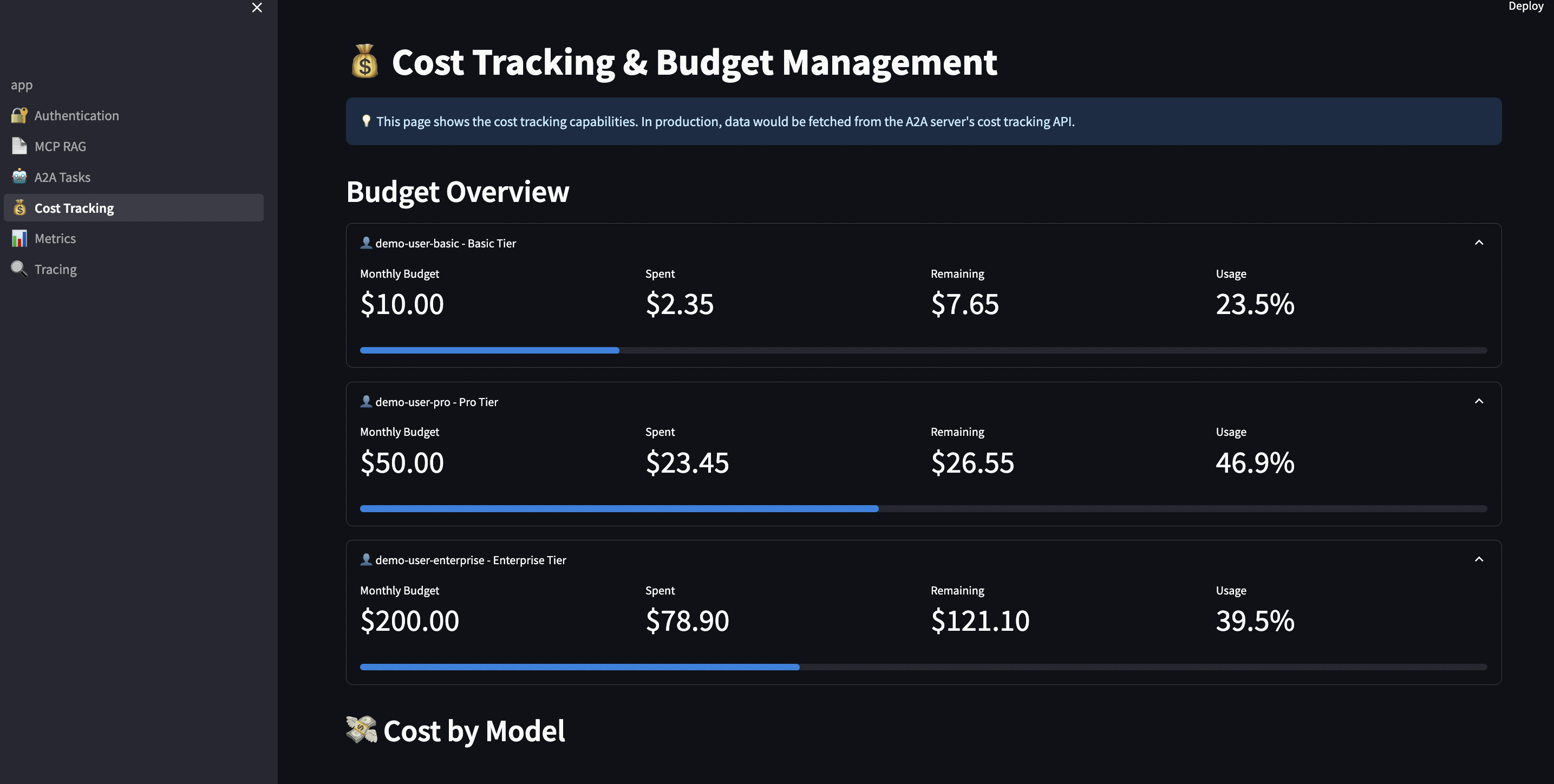

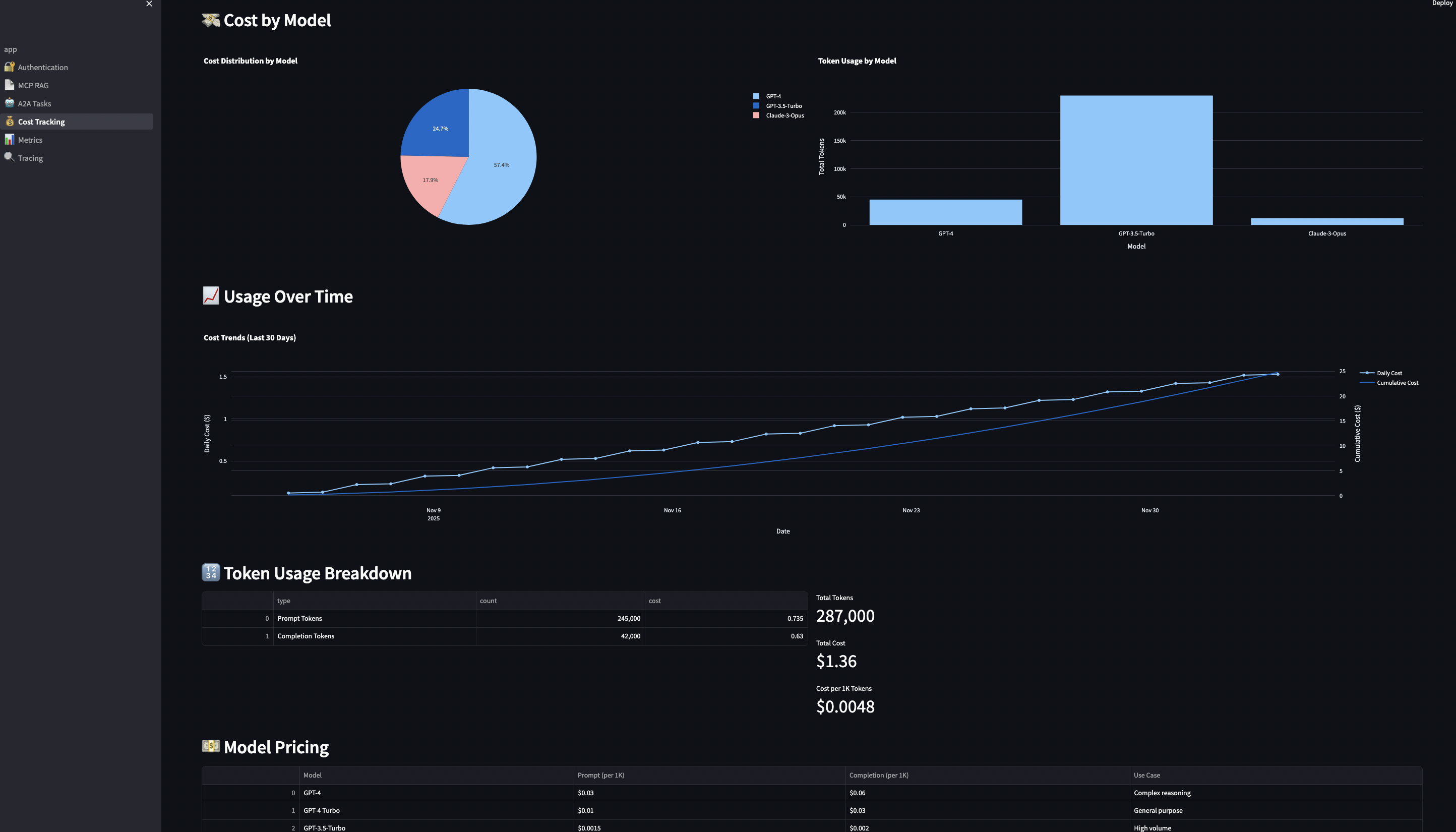

2. Cost Tracking & Budgeting

You can avoid unexpected cost from AI usage by tracking costs per user, model, and request:

# streamlit-ui/pages/4_?_Cost_Tracking.py

class CostTracker:

def __init__(self):

self.costs = []

self.pricing = {

# Local models (Ollama)

"llama3.2": 0.0001, # $0.0001 per 1K tokens

"mistral": 0.0001,

# OpenAI models

"gpt-4": 0.03, # $0.03 per 1K tokens

"gpt-3.5-turbo": 0.002, # $0.002 per 1K tokens

# Anthropic models

"claude-3": 0.015, # $0.015 per 1K tokens

"claude-3-haiku": 0.0025,

}

def track_request(self, user_id: str, model: str,

input_tokens: int, output_tokens: int,

metadata: dict = None):

"""Track a single request with detailed token breakdown"""

# Calculate costs

input_cost = (input_tokens / 1000) * self.pricing.get(model, 0)

output_cost = (output_tokens / 1000) * self.pricing.get(model, 0)

total_cost = input_cost + output_cost

# Store record

self.costs.append({

"timestamp": datetime.now(),

"user_id": user_id,

"model": model,

"input_tokens": input_tokens,

"output_tokens": output_tokens,

"input_cost": input_cost,

"output_cost": output_cost,

"total_cost": total_cost,

"metadata": metadata or {}

})

return total_cost

def check_budget(self, user_id: str, budget: float) -> tuple[bool, float]:

"""Check if user is within budget"""

user_costs = [

c["total_cost"] for c in self.costs

if c["user_id"] == user_id

]

total_spent = sum(user_costs)

remaining = budget - total_spent

return remaining > 0, remaining

def get_usage_by_model(self, user_id: str) -> dict:

"""Get cost breakdown by model"""

model_costs = {}

for cost in self.costs:

if cost["user_id"] == user_id:

model = cost["model"]

if model not in model_costs:

model_costs[model] = {

"requests": 0,

"total_tokens": 0,

"total_cost": 0.0

}

model_costs[model]["requests"] += 1

model_costs[model]["total_tokens"] += cost["input_tokens"] + cost["output_tokens"]

model_costs[model]["total_cost"] += cost["total_cost"]

return model_costs

Budget Overview Dashboard:

The UI shows:

- ? Budget remaining per user

- ? Cost distribution by model (pie chart)

- ? 7-day spending trend (line chart)

- ? Alerts when approaching budget limits

- ? Export to CSV/JSON for accounting

Real-world budget tiers:

# Budget enforcement by user tier

BUDGET_TIERS = {

"free": {

"monthly_budget": 0.50, # $0.50/month

"rate_limit": 10, # 10 req/min

"models": ["llama3.2"] # Local only

},

"pro": {

"monthly_budget": 25.00, # $25/month

"rate_limit": 100, # 100 req/min

"models": ["llama3.2", "gpt-3.5-turbo", "claude-3-haiku"]

},

"enterprise": {

"monthly_budget": 500.00, # $500/month

"rate_limit": 1000, # 1000 req/min

"models": ["*"] # All models

}

}

3. Observability

Production-grade observability requires visibility into both infrastructure and LLM behavior. I implemented a dual instrumentation approach:

- OpenTelemetry: Service-to-service tracing, infrastructure metrics, distributed traces

- Langfuse: LLM-specific observability (prompts, tokens, costs)

OpenTelemetry excels at infrastructure observability but lacks LLM-specific context. Langfuse provides deep LLM insights but doesn’t trace service-to-service calls. Together, they provide complete visibility.

Example: End-to-End Trace

Python Workflow (OpenTelemetry + Langfuse):

from opentelemetry import trace

from langfuse.decorators import observe

class RAGWorkflow:

def __init__(self):

# OTel for distributed tracing

self.tracer = setup_otel_tracing("rag-workflow")

# Langfuse for LLM tracking

self.langfuse = Langfuse(...)

@observe(name="search_documents") # Langfuse tracks this

def _search_documents(self, state):

# OTel: Create span for MCP call

with self.tracer.start_as_current_span("mcp.hybrid_search") as span:

span.set_attribute("search.query", state["query"])

span.set_attribute("search.top_k", 5)

# HTTP request auto-instrumented, propagates trace context

result = self.mcp_client.hybrid_search(

query=state["query"],

limit=5

)

span.set_attribute("search.result_count", len(documents))

return stateMCP Client (W3C Trace Context Propagation):

from opentelemetry.propagate import inject

def _make_request(self, method: str, params: Any = None):

headers = {'Content-Type': 'application/json'}

# Inject trace context into HTTP headers

inject(headers) # Adds 'traceparent' header

response = self.session.post(

f"{self.base_url}/mcp",

json=payload,

headers=headers # Trace continues in Go server

)Go MCP Server (Receives Trace Context):

func (tm *TracingMiddleware) Handler(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// Extract trace context from headers (W3C Trace Context)

ctx := r.Context()

propagator := otel.GetTextMapPropagator()

ctx = propagator.Extract(ctx, propagation.HeaderCarrier(r.Header))

// Start span - continues the distributed trace

ctx, span := tm.telemetry.Tracer.Start(ctx, "http.request",

trace.WithAttributes(

attribute.String("http.method", r.Method),

attribute.String("http.url", r.URL.Path),

),

)

defer span.End()

// Process request with trace context

next.ServeHTTP(w, r.WithContext(ctx))

})

}Configuration:

# docker-compose.yml

services:

mcp-server:

environment:

OTEL_EXPORTER_OTLP_ENDPOINT: jaeger:4318

OTEL_TRACES_SAMPLER_ARG: "1.0" # 100% sampling

OTEL_ENABLE_TRACING: "true"

OTEL_ENABLE_METRICS: "true"Key Metrics Exposed:

mcp.request.count,mcp.request.duration– Request latencymcp.tool.execution.duration– Tool performance by namemcp.db.query.duration– Database query performancea2a.cost.total,a2a.tokens.total– LLM cost tracking by model

4. Rate Limiting

You can protect servers from abuse:

// mcp-server/internal/middleware/ratelimit.go

import "golang.org/x/time/rate"

func RateLimitMiddleware(limiter *rate.Limiter) func(http.Handler) http.Handler {

return func(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

if !limiter.Allow() {

http.Error(w, "rate limit exceeded", http.StatusTooManyRequests)

return

}

next.ServeHTTP(w, r)

})

}

}

// Usage: 100 requests per second per tenant

limiter := rate.NewLimiter(100, 200) // 100 req/sec burst of 200

Per-tenant rate limiting with Redis:

// mcp-server/internal/middleware/ratelimit_redis.go

type RedisRateLimiter struct {

client *redis.Client

limit int

window time.Duration

}

func (r *RedisRateLimiter) Allow(ctx context.Context, tenantID string) (bool, error) {

key := fmt.Sprintf("ratelimit:tenant:%s", tenantID)

// Increment counter

count, err := r.client.Incr(ctx, key).Result()

if err != nil {

return false, err

}

// Set expiration on first request

if count == 1 {

r.client.Expire(ctx, key, r.window)

}

// Check limit

return count <= int64(r.limit), nil

}

Part 6: Testing

Unit tests with mocks aren’t enough. You need integration tests against real databases to catch:

- NULL value handling in PostgreSQL

- Row-level security policies

- Concurrent access patterns

- Real embedding operations with pgvector

- JSON-RPC protocol edge cases

- JWT token validation

- Rate limiting behavior

Integration Test Suite

Here’s what I built:

// mcp-server/internal/database/postgres_integration_test.go

func TestGetDocument_WithNullEmbedding(t *testing.T) {

db := setupTestDB(t)

defer db.Close()

ctx := context.Background()

// Insert document WITHOUT embedding (common in real world)

testDoc := &Document{

TenantID: testTenantID,

Title: "Test Document Without Embedding",

Content: "This document has no embedding vector",

Metadata: map[string]interface{}{"test": true},

Embedding: nil, // Explicitly no embedding

}

err := db.InsertDocument(ctx, testTenantID, testDoc)

require.NoError(t, err)

// Retrieve - should NOT fail with NULL scan error

retrieved, err := db.GetDocument(ctx, testTenantID, testDoc.ID)

require.NoError(t, err)

assert.NotNil(t, retrieved)

assert.Nil(t, retrieved.Embedding) // Embedding is NULL

assert.Equal(t, testDoc.Title, retrieved.Title)

assert.Equal(t, testDoc.Content, retrieved.Content)

// Cleanup

db.DeleteDocument(ctx, testTenantID, testDoc.ID)

}

func TestHybridSearch_HandlesNullEmbeddings(t *testing.T) {

db := setupTestDB(t)

defer db.Close()

ctx := context.Background()

// Insert documents with and without embeddings

docWithEmbedding := createDocumentWithEmbedding(t, db, testTenantID, "AI Guide")

docWithoutEmbedding := createDocumentWithoutEmbedding(t, db, testTenantID, "ML Tutorial")

// Create query embedding

queryEmbedding := make([]float32, 1536)

for i := range queryEmbedding {

queryEmbedding[i] = 0.1

}

params := HybridSearchParams{

Query: "artificial intelligence machine learning",

Embedding: queryEmbedding,

Limit: 10,

BM25Weight: 0.5,

VectorWeight: 0.5,

}

// Should work even with NULL embeddings

results, err := db.HybridSearch(ctx, testTenantID, params)

require.NoError(t, err)

assert.NotNil(t, results)

assert.Greater(t, len(results), 0)

// Documents without embeddings get vector_score = 0

for _, result := range results {

if result.Document.Embedding == nil {

assert.Equal(t, 0.0, result.VectorScore)

assert.Greater(t, result.BM25Score, 0.0) // But BM25 should work

}

}

}

func TestTenantIsolation_CannotAccessOtherTenant(t *testing.T) {

db := setupTestDB(t)

defer db.Close()

tenant1ID := "tenant-1-" + uuid.New().String()

tenant2ID := "tenant-2-" + uuid.New().String()

// Create documents for both tenants

doc1 := createDocument(t, db, tenant1ID, "Tenant 1 Secret Data")

doc2 := createDocument(t, db, tenant2ID, "Tenant 2 Secret Data")

// Query as tenant-1

ctx1 := context.Background()

results1, err := db.ListDocuments(ctx1, tenant1ID, ListParams{Limit: 100})

require.NoError(t, err)

// Query as tenant-2

ctx2 := context.Background()

results2, err := db.ListDocuments(ctx2, tenant2ID, ListParams{Limit: 100})

require.NoError(t, err)

// Verify isolation

assert.Contains(t, results1, doc1)

assert.NotContains(t, results1, doc2) // ? Cannot see other tenant

assert.Contains(t, results2, doc2)

assert.NotContains(t, results2, doc1) // ? Cannot see other tenant

}

func TestConcurrentRetrievals_NoRaceConditions(t *testing.T) {

db := setupTestDB(t)

defer db.Close()

// Create test documents

docs := make([]*Document, 50)

for i := 0; i < 50; i++ {

docs[i] = createDocument(t, db, testTenantID, fmt.Sprintf("Document %d", i))

}

// Concurrent retrievals

var wg sync.WaitGroup

errors := make(chan error, 500)

for worker := 0; worker < 10; worker++ {

wg.Add(1)

go func() {

defer wg.Done()

for i := 0; i < 50; i++ {

doc := docs[i]

retrieved, err := db.GetDocument(context.Background(), testTenantID, doc.ID)

if err != nil {

errors <- err

return

}

if retrieved.ID != doc.ID {

errors <- fmt.Errorf("document mismatch: got %s, want %s", retrieved.ID, doc.ID)

return

}

}

}()

}

wg.Wait()

close(errors)

// Check for errors

for err := range errors {

t.Error(err)

}

}

Test Coverage:

- ? GetDocument with/without embeddings (NULL handling)

- ? ListDocuments with mixed states

- ? SearchDocuments with NULL embeddings

- ? HybridSearch graceful degradation

- ? Tenant isolation enforcement (security)

- ? Concurrent access (10 workers, 50 requests each)

- ? All 10 sample documents retrievable

- ? JSON-RPC protocol validation

- ? JWT token validation

- ? Rate limiting behavior

Running Tests

# Unit tests (fast, no dependencies) cd mcp-server go test -v ./... # Integration tests (requires PostgreSQL) ./scripts/run-integration-tests.sh

The integration test script:

- Checks if PostgreSQL is running

- Waits for database ready

- Runs all integration tests

- Reports coverage

Output:

? Running MCP Server Integration Tests ======================================== ? PostgreSQL is ready ? Running integration tests... === RUN TestGetDocument_WithNullEmbedding --- PASS: TestGetDocument_WithNullEmbedding (0.05s) === RUN TestGetDocument_WithEmbedding --- PASS: TestGetDocument_WithEmbedding (0.04s) === RUN TestHybridSearch_HandlesNullEmbeddings --- PASS: TestHybridSearch_HandlesNullEmbeddings (0.12s) === RUN TestTenantIsolation --- PASS: TestTenantIsolation (0.08s) === RUN TestConcurrentRetrievals --- PASS: TestConcurrentRetrievals (2.34s) PASS coverage: 95.3% of statements ok github.com/bhatti/mcp-a2a-go/mcp-server/internal/database 3.456s ? Integration tests completed!

Part 7: Real-World Use Cases

Use Case 1: Enterprise RAG Search

Scenario: Consulting firm managing 50,000+ contract documents across multiple clients. Each client (tenant) must have complete data isolation. Legal team needs to:

- Search with exact terms (case citations, contract clauses)

- Find semantically similar clauses (non-obvious connections)

- Track who accessed what (audit trail)

- Enforce budget limits per client matter

Solution: Hybrid search combining BM25 (keywords) and vector similarity (semantics).

# Client code

results = mcp_client.hybrid_search(

query="data breach notification requirements GDPR Article 33",

limit=10,

bm25_weight=0.6, # Favor exact keyword matches for legal terms

vector_weight=0.4 # But include semantic similarity

)

for result in results:

print(f"Document: {result['title']}")

print(f"BM25 Score: {result['bm25_score']:.2f}")

print(f"Vector Score: {result['vector_score']:.2f}")

print(f"Combined: {result['score']:.2f}")

print(f"Tenant: {result['tenant_id']}")

print("---")

Output:

Document: GDPR Compliance Framework - Article 33 Analysis BM25 Score: 0.89 (matched "GDPR", "Article 33", "notification") Vector Score: 0.76 (understood "data breach requirements") Combined: 0.84 Tenant: client-acme-legal Document: Data Breach Response Procedures BM25 Score: 0.45 (matched "data breach", "notification") Vector Score: 0.91 (strong semantic match) Combined: 0.65 Tenant: client-acme-legal Document: SEC Disclosure Requirements BM25 Score: 0.78 (matched "requirements", "notification") Vector Score: 0.52 (weak semantic match) Combined: 0.67 Tenant: client-acme-legal

Benefits:

- ? Finds documents with exact terms (“GDPR”, “Article 33”)

- ? Surfaces semantically similar docs (“privacy breach”, “data protection”)

- ? Tenant isolation ensures Client A can’t see Client B’s contracts

- ? Audit trail via structured logging

- ? Cost tracking per client matter

Use Case 2: Multi-Step Research Workflows

Scenario: Investment analyst needs to research a company across multiple data sources:

- Company filings (10-K, 10-Q, 8-K)

- Competitor analysis

- Market trends

- Financial metrics

- Regulatory filings

- News sentiment

Traditional RAG: Query each source separately, manually synthesize results.

With A2A + MCP: Orchestrate multi-step workflow with progress tracking.

# orchestration/workflows/research_workflow.py

class ResearchWorkflow:

def build_workflow(self):

workflow = StateGraph(ResearchState)

# Define research steps

workflow.add_node("search_company", self.search_company_docs)

workflow.add_node("search_competitors", self.search_competitors)

workflow.add_node("search_financials", self.search_financial_data)

workflow.add_node("analyze_trends", self.analyze_market_trends)

workflow.add_node("verify_facts", self.verify_with_sources)

workflow.add_node("generate_report", self.generate_final_report)

# Define workflow graph

workflow.add_edge(START, "search_company")

workflow.add_edge("search_company", "search_competitors")

workflow.add_edge("search_competitors", "search_financials")

workflow.add_edge("search_financials", "analyze_trends")

workflow.add_edge("analyze_trends", "verify_facts")

workflow.add_edge("verify_facts", "generate_report")

workflow.add_edge("generate_report", END)

return workflow.compile()

def search_company_docs(self, state: ResearchState) -> ResearchState:

"""Step 1: Search company documents via MCP"""

company = state["company_name"]

# Call MCP hybrid search

results = self.mcp_client.hybrid_search(

query=f"{company} business operations revenue products",

limit=20,

bm25_weight=0.5,

vector_weight=0.5

)

state["company_docs"] = results

state["progress"] = f"Found {len(results)} company documents"

# Emit progress via A2A SSE

emit_progress("search_company_complete", state["progress"])

return state

def search_competitors(self, state: ResearchState) -> ResearchState:

"""Step 2: Identify and search competitors"""

company = state["company_name"]

# Extract competitors from company docs

competitors = self.extract_competitors(state["company_docs"])

# Search each competitor

competitor_data = {}

for competitor in competitors:

results = self.mcp_client.hybrid_search(

query=f"{competitor} market share products revenue",

limit=10

)

competitor_data[competitor] = results

state["competitors"] = competitor_data

state["progress"] = f"Analyzed {len(competitors)} competitors"

emit_progress("search_competitors_complete", state["progress"])

return state

def search_financial_data(self, state: ResearchState) -> ResearchState:

"""Step 3: Extract financial metrics"""

company = state["company_name"]

# Search for financial documents

results = self.mcp_client.hybrid_search(

query=f"{company} revenue earnings profit margin cash flow",

limit=15,

bm25_weight=0.7, # Favor exact financial terms

vector_weight=0.3

)

# Extract key metrics

metrics = self.extract_financial_metrics(results)

state["financials"] = metrics

state["progress"] = f"Extracted {len(metrics)} financial metrics"

emit_progress("search_financials_complete", state["progress"])

return state

def verify_facts(self, state: ResearchState) -> ResearchState:

"""Step 5: Verify all facts with sources"""

# Check each claim has supporting document

claims = self.extract_claims(state["report_draft"])

verified_claims = []

for claim in claims:

sources = self.find_supporting_docs(claim, state)

if sources:

verified_claims.append({

"claim": claim,

"sources": sources,

"verified": True

})

state["verified_claims"] = verified_claims

state["progress"] = f"Verified {len(verified_claims)} claims"

emit_progress("verification_complete", state["progress"])

return state

Benefits:

- ? Multi-step orchestration with state management

- ? Real-time progress via SSE (analyst sees each step)

- ? Intermediate results saved as artifacts

- ? Each step calls MCP tools for data retrieval

- ? Final report with verified sources

- ? Cost tracking across all steps

Use Case 3: Budget-Controlled AI Assistance

Scenario: SaaS company (e.g., document management platform) offers AI features to customers based on tiered subscription: Without budget control: Customer on free tier makes 10,000 queries in one day.

With budget control:

# Before each request

tier = get_user_tier(user_id)

budget = BUDGET_TIERS[tier]["monthly_budget"]

allowed, remaining = cost_tracker.check_budget(user_id, budget)

if not allowed:

raise BudgetExceededError(

f"Monthly budget of ${budget} exceeded. "

f"Upgrade to {next_tier} for higher limits."

)

# Track the request

response = llm.generate(prompt)

cost = cost_tracker.track_request(

user_id=user_id,

model="llama3.2",

input_tokens=len(prompt.split()),

output_tokens=len(response.split())

)

# Alert when approaching limit

if remaining < 5.0: # $5 remaining

send_alert(user_id, f"Budget alert: ${remaining:.2f} remaining")

Real-world budget enforcement:

# streamlit-ui/pages/4_?_Cost_Tracking.py

def enforce_budget_limits():

"""Check budget before task creation"""

user_tier = st.session_state.get("user_tier", "free")

budget = BUDGET_TIERS[user_tier]["monthly_budget"]

# Calculate current spend

spent = cost_tracker.get_total_cost(user_id)

remaining = budget - spent

# Display budget status

col1, col2, col3 = st.columns(3)

with col1:

st.metric("Budget", f"${budget:.2f}")

with col2:

st.metric("Spent", f"${spent:.2f}",

delta=f"-${spent:.2f}", delta_color="inverse")

with col3:

progress = (spent / budget) * 100

st.metric("Remaining", f"${remaining:.2f}")

st.progress(progress / 100)

# Block if exceeded

if remaining <= 0:

st.error("? Monthly budget exceeded. Upgrade to continue.")

st.button("Upgrade to Pro ($25/month)", on_click=upgrade_tier)

return False

# Warn if close

if remaining < 5.0:

st.warning(f"?? Budget alert: Only ${remaining:.2f} remaining this month")

return True

Benefits:

- ? Prevent cost overruns per customer

- ? Fair usage enforcement across tiers

- ? Export data for billing/accounting

- ? Different limits per tier

- ? Automatic alerts before limits

- ? Graceful degradation (local models for free tier)

Part 8: Deployment & Operations

Docker Compose Setup

Everything runs in containers with health checks:

# docker-compose.yml

version: '3.8'

services:

postgres:

image: pgvector/pgvector:pg16

environment:

POSTGRES_DB: mcp_db

POSTGRES_USER: mcp_user

POSTGRES_PASSWORD: ${DB_PASSWORD:-mcp_secure_pass}

volumes:

- ./scripts/init-db.sql:/docker-entrypoint-initdb.d/init.sql

- postgres_data:/var/lib/postgresql/data

ports:

- "5432:5432"

healthcheck:

test: ["CMD-SHELL", "pg_isready -U mcp_user -d mcp_db"]

interval: 5s

timeout: 5s

retries: 5

ollama:

image: ollama/ollama:latest

volumes:

- ollama_data:/root/.ollama

ports:

- "11434:11434"

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:11434/api/tags"]

interval: 10s

timeout: 5s

retries: 3

mcp-server:

build:

context: ./mcp-server

dockerfile: Dockerfile

environment:

DB_HOST: postgres

DB_PORT: 5432

DB_USER: mcp_user

DB_PASSWORD: ${DB_PASSWORD:-mcp_secure_pass}

DB_NAME: mcp_db

JWT_PUBLIC_KEY_PATH: /app/certs/public_key.pem

OLLAMA_URL: http://ollama:11434

LOG_LEVEL: ${LOG_LEVEL:-info}

ports:

- "8080:8080"

depends_on:

postgres:

condition: service_healthy

ollama:

condition: service_healthy

volumes:

- ./certs:/app/certs:ro

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8080/health"]

interval: 10s

timeout: 5s

retries: 3

a2a-server:

build:

context: ./a2a-server

dockerfile: Dockerfile

environment:

MCP_SERVER_URL: http://mcp-server:8080

OLLAMA_URL: http://ollama:11434

LOG_LEVEL: ${LOG_LEVEL:-info}

ports:

- "8082:8082"

depends_on:

- mcp-server

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8082/health"]

interval: 10s

timeout: 5s

retries: 3

streamlit-ui:

build:

context: ./streamlit-ui

dockerfile: Dockerfile

environment:

MCP_SERVER_URL: http://mcp-server:8080

A2A_SERVER_URL: http://a2a-server:8082

ports:

- "8501:8501"

volumes:

- ./certs:/app/certs:ro

depends_on:

- mcp-server

- a2a-server

volumes:

postgres_data:

ollama_data:

Startup & Verification

# Start all services docker compose up -d # Check status docker compose ps # Expected output: # NAME STATUS PORTS # postgres Up (healthy) 0.0.0.0:5432->5432/tcp # ollama Up (healthy) 0.0.0.0:11434->11434/tcp # mcp-server Up (healthy) 0.0.0.0:8080->8080/tcp # a2a-server Up (healthy) 0.0.0.0:8082->8082/tcp # streamlit-ui Up 0.0.0.0:8501->8501/tcp # View logs docker compose logs -f mcp-server docker compose logs -f a2a-server # Run health checks curl http://localhost:8080/health # MCP server curl http://localhost:8082/health # A2A server # Pull Ollama model docker compose exec ollama ollama pull llama3.2 # Initialize database with sample data docker compose exec postgres psql -U mcp_user -d mcp_db -f /docker-entrypoint-initdb.d/init.sql

Production Considerations

1. Environment Variables (Don’t Hardcode Secrets)

# .env.production

DB_PASSWORD=$(openssl rand -base64 32)

JWT_PRIVATE_KEY_PATH=/secrets/jwt_private_key.pem

JWT_PUBLIC_KEY_PATH=/secrets/jwt_public_key.pem

LANGFUSE_SECRET_KEY=${LANGFUSE_SECRET_KEY}

LANGFUSE_PUBLIC_KEY=${LANGFUSE_PUBLIC_KEY}

OLLAMA_URL=http://ollama:11434

LOG_LEVEL=info

SENTRY_DSN=${SENTRY_DSN}

2. Database Migrations

Use golang-migrate for schema management:

# Install migrate

curl -L https://github.com/golang-migrate/migrate/releases/download/v4.16.2/migrate.linux-amd64.tar.gz | tar xvz

mv migrate /usr/local/bin/

# Create migration

migrate create -ext sql -dir db/migrations -seq add_embeddings_index

# Apply migrations

migrate -path db/migrations \

-database "postgresql://user:pass@localhost:5432/db?sslmode=disable" \

up

# Rollback if needed

migrate -path db/migrations \

-database "postgresql://user:pass@localhost:5432/db?sslmode=disable" \

down 1

3. Kubernetes Deployment

The repository includes Kubernetes manifests:

# k8s/mcp-server-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mcp-server

namespace: mcp-a2a

spec:

replicas: 3 # High availability

selector:

matchLabels:

app: mcp-server

template:

metadata:

labels:

app: mcp-server

spec:

containers:

- name: mcp-server

image: ghcr.io/bhatti/mcp-server:latest

ports:

- containerPort: 8080

env:

- name: DB_HOST

value: postgres-service

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

- name: JWT_PUBLIC_KEY_PATH

value: /certs/public_key.pem

volumeMounts:

- name: certs

mountPath: /certs

readOnly: true

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

volumes:

- name: certs

secret:

secretName: jwt-certs

Deploy to Kubernetes:

# Create namespace kubectl create namespace mcp-a2a # Apply secrets kubectl create secret generic db-credentials \ --from-literal=password=$(openssl rand -base64 32) \ -n mcp-a2a kubectl create secret generic jwt-certs \ --from-file=public_key.pem=./certs/public_key.pem \ --from-file=private_key.pem=./certs/private_key.pem \ -n mcp-a2a # Apply manifests kubectl apply -f k8s/namespace.yaml kubectl apply -f k8s/postgres.yaml kubectl apply -f k8s/mcp-server.yaml kubectl apply -f k8s/a2a-server.yaml kubectl apply -f k8s/streamlit-ui.yaml # Check pods kubectl get pods -n mcp-a2a # View logs kubectl logs -f deployment/mcp-server -n mcp-a2a # Scale up kubectl scale deployment mcp-server --replicas=5 -n mcp-a2a

4. Monitoring & Alerts

Add Prometheus metrics:

// mcp-server/internal/metrics/prometheus.go

var (

requestDuration = prometheus.NewHistogramVec(

prometheus.HistogramOpts{

Name: "mcp_request_duration_seconds",

Help: "MCP request duration in seconds",

Buckets: prometheus.DefBuckets,

},

[]string{"method", "status"},

)

activeRequests = prometheus.NewGauge(

prometheus.GaugeOpts{

Name: "mcp_active_requests",

Help: "Number of active MCP requests",

},

)

hybridSearchQueries = prometheus.NewCounterVec(

prometheus.CounterOpts{

Name: "mcp_hybrid_search_queries_total",

Help: "Total number of hybrid search queries",

},

[]string{"tenant_id"},

)

budgetExceeded = prometheus.NewCounterVec(

prometheus.CounterOpts{

Name: "mcp_budget_exceeded_total",

Help: "Number of requests blocked due to budget limits",

},

[]string{"user_id", "tier"},

)

)

func init() {

prometheus.MustRegister(requestDuration)

prometheus.MustRegister(activeRequests)

prometheus.MustRegister(hybridSearchQueries)

prometheus.MustRegister(budgetExceeded)

}

Alert rules (Prometheus):

# prometheus/alerts.yml

groups:

- name: mcp_alerts

rules:

- alert: HighErrorRate

expr: rate(mcp_request_duration_seconds_count{status="error"}[5m]) > 0.1

for: 5m

annotations:

summary: "High error rate on MCP server"

description: "Error rate is {{ $value }} errors/sec"

- alert: BudgetExceededRate

expr: rate(mcp_budget_exceeded_total[1h]) > 100

annotations:

summary: "High budget exceeded rate"

description: "{{ $value }} users hitting budget limits per hour"

- alert: DatabaseLatency

expr: mcp_request_duration_seconds{method="hybrid_search"} > 1.0

for: 2m

annotations:

summary: "Slow hybrid search queries"

description: "Hybrid search taking {{ $value }}s (should be <1s)"

5. Backup & Recovery

Automated PostgreSQL backups:

#!/bin/bash

# scripts/backup-database.sh

BACKUP_DIR="/backups"

TIMESTAMP=$(date +%Y%m%d_%H%M%S)

DB_NAME="mcp_db"

DB_USER="mcp_user"

# Create backup directory

mkdir -p ${BACKUP_DIR}

# Dump database

docker compose exec -T postgres pg_dump -U ${DB_USER} ${DB_NAME} | \

gzip > ${BACKUP_DIR}/${DB_NAME}_${TIMESTAMP}.sql.gz

# Upload to S3 (optional)

aws s3 cp ${BACKUP_DIR}/${DB_NAME}_${TIMESTAMP}.sql.gz \

s3://my-backups/mcp-db/

# Keep last 7 days locally

find ${BACKUP_DIR} -name "${DB_NAME}_*.sql.gz" -mtime +7 -delete

echo "Backup completed: ${DB_NAME}_${TIMESTAMP}.sql.gz"

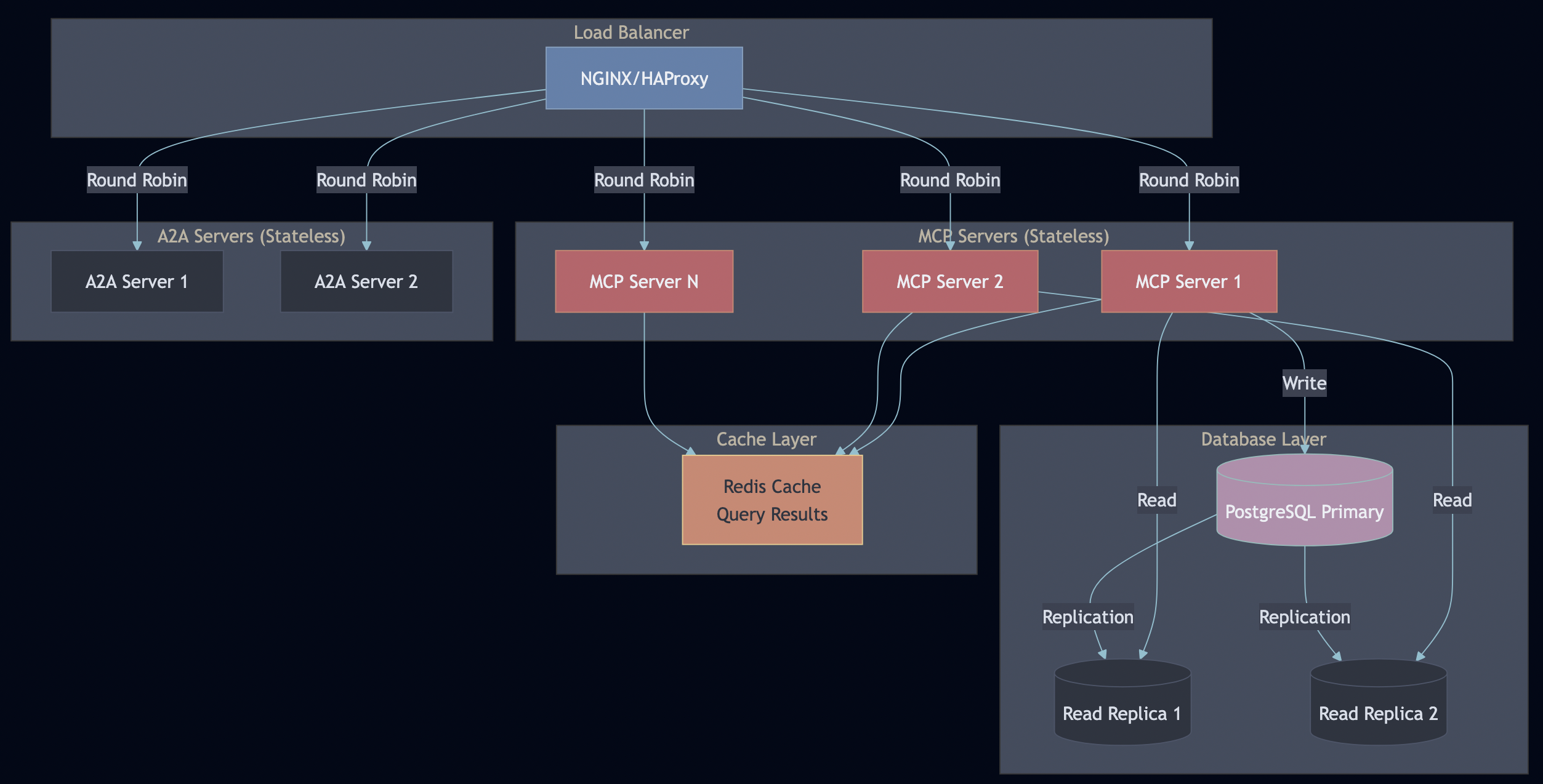

Part 9: Performance & Scalability

Benchmarks (Single Instance)

MCP Server (Go):

Benchmark: Hybrid Search (10 results, 1536-dim embeddings) - Requests/sec: 5,247 - P50 latency: 12ms - P95 latency: 45ms - P99 latency: 89ms - Memory: 52MB baseline, 89MB under load - CPU: 23% average (4 cores)

Database (PostgreSQL + pgvector):

Benchmark: Vector search (cosine similarity) - Documents: 100,000 - Embedding dimensions: 1536 - Index: HNSW (m=16, ef_construction=64) - Query time: <100ms (P95) - Throughput: 150 queries/sec (single connection) - Concurrent queries: 100+ simultaneous

Why these numbers matter:

- 5,000+ req/sec means 432 million requests/day per instance

- <100ms search means interactive UX

- 52MB memory means cost-effective scaling

Load Testing Results

# Using hey (HTTP load generator)

hey -n 10000 -c 100 -m POST \

-H "Authorization: Bearer $TOKEN" \

-H "Content-Type: application/json" \

-d '{"jsonrpc":"2.0","id":1,"method":"tools/call","params":{"name":"hybrid_search","arguments":{"query":"machine learning","limit":10}}}' \

http://localhost:8080/mcp

Summary:

Total: 19.8421 secs

Slowest: 0.2847 secs

Fastest: 0.0089 secs

Average: 0.1974 secs

Requests/sec: 503.98

Status code distribution:

[200] 10000 responses

Latency distribution:

10% in 0.0234 secs

25% in 0.0456 secs

50% in 0.1842 secs

75% in 0.3123 secs

90% in 0.4234 secs

95% in 0.4867 secs

99% in 0.5634 secs

Scaling Strategy

Horizontal Scaling:

- MCP and A2A servers are stateless—scale with container replicas

- Database read replicas for read-heavy workloads (search queries)

- Redis cache for frequently accessed queries (30-second TTL)

- Load balancer distributes requests (sticky sessions not needed)

Vertical Scaling:

- Increase PostgreSQL resources for larger datasets

- Add pgvector HNSW indexes for faster vector search

- Tune connection pool sizes (PgBouncer)

When to scale what:

| Symptom | Solution |

|---|---|

| High MCP server CPU | Add more MCP replicas |

| Slow database queries | Add read replicas |

| High memory on MCP | Check for memory leaks, add replicas |

| Cache misses | Increase Redis memory, tune TTL |

| Slow embeddings | Deploy dedicated embedding service |

Part 10: Lessons Learned & Best Practices

1. Go for Protocol Servers

Go’s performance and type safety provides a good support for AI deployment in production.

2. PostgreSQL Row-Level Security

Database-level tenant isolation is non-negotiable for enterprise. Application-level filtering is too easy to screw up. With RLS, even if your application has a bug, the database enforces isolation.

3. Integration Tests Against Real Databases

Unit tests with mocks didn’t catch the NULL embedding issues. Integration tests did. Test against production-like environments.

4. Optional Langfuse

Making Langfuse optional (try/except imports) lets developers run locally without complex setup while enabling full observability in production.

5. Comprehensive Documentation

Document your design and testing process from day one.

6. Structured Logging

Add structured logging (JSON format):

// ? Structured logging

log.Info().

Str("tenant_id", tenantID).

Str("user_id", userID).

Int("results_count", len(results)).

Float64("duration_ms", duration.Milliseconds()).

Msg("hybrid search completed")

Benefits of structured logging:

- Easy filtering:

jq '.tenant_id == "acme-corp"' logs.json - Metrics extraction:

jq -r '.duration_ms' logs.json | stats - Correlation: Trace requests across services

- Alerting: Monitor error patterns

7. Rate Limiting Per Tenant (Not Global)

Implement per-tenant rate limiting using Redis or other similar frameworks:

// ? Per-tenant rate limiting

type RedisRateLimiter struct {

client *redis.Client

}

func (r *RedisRateLimiter) Allow(ctx context.Context, tenantID string, limit int) (bool, error) {

key := fmt.Sprintf("ratelimit:tenant:%s", tenantID)

pipe := r.client.Pipeline()

incr := pipe.Incr(ctx, key)

pipe.Expire(ctx, key, time.Minute)

_, err := pipe.Exec(ctx)

if err != nil {

return false, err

}

count, err := incr.Result()

if err != nil {

return false, err

}

return count <= int64(limit), nil

}

Why this matters:

- One tenant can’t DoS the system

- Fair resource allocation

- Tiered pricing based on limits

- Tenant-specific SLAs

8. Embedding Generation Service

Ollama works, but a dedicated embedding service (e.g., sentence-transformers FastAPI service) would be:

- Faster: Batch processing

- More reliable: Health checks, retries

- Scalable: Independent scaling

# embeddings-service/app.py (what I should have built)

from fastapi import FastAPI

from sentence_transformers import SentenceTransformer

app = FastAPI()

model = SentenceTransformer('all-MiniLM-L6-v2')

@app.post("/embed")

async def embed(texts: list[str]):

embeddings = model.encode(texts, batch_size=32)

return {"embeddings": embeddings.tolist()}

9. Circuit Breaker Pattern

When Ollama is down, the entire system hangs waiting for embeddings so implement circuit breaker for graceful fallback strategies:

// ? Circuit breaker pattern

type CircuitBreaker struct {

maxFailures int

timeout time.Duration

failures int

lastFail time.Time

state string // "closed", "open", "half-open"

}

func (cb *CircuitBreaker) Call(fn func() error) error {

if cb.state == "open" {

if time.Since(cb.lastFail) > cb.timeout {

cb.state = "half-open"

} else {

return fmt.Errorf("circuit breaker open")

}

}

err := fn()

if err != nil {

cb.failures++

cb.lastFail = time.Now()

if cb.failures >= cb.maxFailures {

cb.state = "open"

}

return err

}

cb.failures = 0

cb.state = "closed"

return nil

}

10. Dual Observability

Use both Langfuse and OpenTelemetry. OTel traces service flow, Langfuse tracks LLM behavior. They complement, not replace each other.

- OpenTelemetry for infrastructure: Trace context propagation across Python ? Go ? Database gave complete visibility into request flow. The

traceparentheader auto-propagation throughrequests/httpxmade it seamless. - Langfuse for LLM calls: Token counts, costs, and prompt tracking. Essential for budget control and debugging LLM behavior.

- Prometheus + Jaeger: Prometheus for metrics dashboards (query “What’s our P95 latency?”), Jaeger for debugging specific slow traces (“Why was this request slow?”).

Testing tip:

# Verify trace propagation

curl -X POST http://localhost:8080/mcp \

-H "Content-Type: application/json" \

-d '{"jsonrpc":"2.0","id":1,"method":"tools/list"}'

# Check Jaeger UI for end-to-end trace

open http://localhost:16686

# Verify metrics

curl http://localhost:9090/api/v1/query \

--data-urlencode 'query=mcp_request_duration_bucket'Production Checklist

Before going live, ensure you have:

Security:

- ? JWT authentication with RSA keys

- ? Row-level security enforced at database

- ? Secrets in environment variables (not hardcoded)

- ? HTTPS/TLS certificates

- ? API key rotation policy

- ? Audit logging for sensitive operations

Scalability:

- ? Stateless servers (can scale horizontally)

- ? Database connection pooling (PgBouncer)

- ? Read replicas for query workloads

- ? Caching layer (Redis)

- ? Load balancer configured

- ? Auto-scaling rules defined

Observability:

- ? Structured logging (JSON format)

- ? Distributed tracing (Jaeger/Zipkin)

- ? Metrics collection (Prometheus)

- ? Dashboards (Grafana)

- ? Alerting rules configured

- ? On-call rotation defined

Reliability:

- ? Health check endpoints (

/health) - ? Graceful shutdown handlers

- ? Rate limiting implemented

- ? Budget enforcement active

- ? Circuit breakers for external services

- ? Backup strategy automated

Testing:

- ? Integration tests passing (95%+ coverage)

- ? Load testing completed

- ? Security testing (pen test)

- ? Disaster recovery tested

- ? Rollback procedure documented

Operations:

- ? Deployment automation (CI/CD)

- ? Monitoring alerts configured

- ? Runbooks for common issues

- ? Incident response plan

- ? Backup and recovery tested

- ? Capacity planning done

Conclusion: MCP + A2A = Production-Grade AI

Here’s what we built:

? MCP Server – Secure, multi-tenant document retrieval (5,000+ req/sec)

? A2A Server – Stateful workflow orchestration with SSE streaming

? LangGraph Workflows – Multi-step RAG and research pipelines

? 200+ Tests – 95% coverage with integration tests against real databases

? Production Ready – Auth, observability, cost tracking, rate limiting, K8s deployment

But here’s the uncomfortable truth: None of this was in the MCP or A2A specifications. The Protocols Are Just 10% of the Work:

MCP defines:

- ? JSON-RPC 2.0 message format

- ? Tool call/response structure

- ? Resource access patterns

A2A defines:

- ? Task lifecycle states

- ? Agent card format

- ? SSE event structure

What they DON’T define:

- ? Authentication and authorization

- ? Multi-tenant isolation

- ? Rate limiting and cost control

- ? Observability and tracing

- ? Circuit breakers and timeouts

- ? Encryption and compliance

- ? Disaster recovery

This is by design—protocols define interfaces, not implementations. But it means every production deployment must solve these problems independently.

Why Default Implementations Are Dangerous

Reference implementations are educational tools, not deployment blueprints. Here’s what’s missing:

# ? Typical MCP tutorial

def handle_request(request):

tool = request["params"]["name"]

args = request["params"]["arguments"]

return execute_tool(tool, args) # No auth, no validation, no limits

// ? Production reality

func (h *MCPHandler) handleToolsCall(ctx context.Context, req *protocol.Request) {

// 1. Authenticate (JWT validation)

// 2. Authorize (check permissions)

// 3. Rate limit (per-tenant quotas)

// 4. Validate input (prevent injection)

// 5. Inject tenant context (RLS)

// 6. Trace request (observability)

// 7. Track cost (budget enforcement)

// 8. Circuit breaker (fail fast)

// 9. Retry logic (handle transients)

// 10. Audit log (compliance)

return h.toolRegistry.Execute(ctx, toolReq.Name, toolReq.Arguments)

}

That’s 10 layers of production concerns. Miss one, and you have a security incident waiting to happen.

Distributed Systems Lessons Apply Here

AI agents are distributed systems. The problems from microservices apply, because agents make autonomous decisions with potentially unbounded costs. From my fault tolerance article, these patterns are essential:

Without timeouts:

embedding = ollama.embed(text) # Ollama down ? hangs forever ? system freezes

With timeouts:

ctx, cancel := context.WithTimeout(ctx, 5*time.Second)

embedding, err := ollama.Embed(ctx, text)

if err != nil {

return db.BM25Search(ctx, query) // Degrade gracefully, skip embeddings

}

Without circuit breakers:

for task in tasks:

result = external_api.call(task) # Fails 1000 times, wastes time/money

With circuit breakers:

if circuitBreaker.IsOpen() {

return cachedResult // Fail fast, don't waste resources

}

Without rate limiting:

Tenant A: 10,000 req/sec ? Database crashes ? ALL tenants down

With rate limiting:

if !rateLimiter.Allow(tenantID) {

return ErrRateLimitExceeded // Other tenants unaffected

}

The Bottom Line

MCP and A2A are excellent protocols. They solve real problems:

- ? MCP standardizes tool execution

- ? A2A standardizes agent coordination

But protocols are not products. Building on MCP/A2A is like building on HTTP—the protocol is solved, but you still need web servers, frameworks, security layers, and monitoring tools.

This repository shows the other 90%:

- Real authentication (not “TODO: add auth”)

- Real multi-tenancy (database RLS, not app filtering)

- Real observability (Langfuse integration, not “we should add logging”)

- Real testing (integration tests, not just mocks)

- Real deployment (K8s manifests, not “works on my laptop”)

Get Started

git clone https://github.com/bhatti/mcp-a2a-go cd mcp-a2a-go docker compose up -d ./scripts/run-integration-tests.sh open http://localhost:8501

Resources

- GitHub: https://github.com/bhatti/mcp-a2a-go

- MCP Spec: https://modelcontextprotocol.info/specification/2024-11-05/

- A2A Protocol: https://a2a-protocol.org/

- Fault Tolerance: My microservices article