Introduction

Kubernetes has revolutionized how we deploy, scale, and manage applications in the cloud. I’ve been using Kubernetes for many years to build scalable, resilient, and maintainable services. However, Kubernetes was primarily designed for stateless applications – services that can scale horizontally. While such shared-nothing architecture is must-have for most modern microservices but it presents challenges for use-cases such as:

- Stateful/Singleton Processes: Applications that must run as a single instance across a cluster to avoid conflicts, race conditions, or data corruption. Examples include:

- Legacy applications not designed for distributed operation

- Batch processors that need exclusive access to resources

- Job schedulers that must ensure jobs run exactly once

- Applications with sequential ID generators

- Active/Passive Disaster Recovery: High-availability setups where you need a primary instance running with hot standbys ready to take over instantly if the primary fails.

Traditional Kubernetes primitives like StatefulSets provide stable network identities and ordered deployment but don’t solve the “exactly-one-active” problem. DaemonSets ensure one pod per node, but don’t address the need for a single instance across the entire cluster. This gap led me to develop K8 Highlander – a solution that ensures “there can be only one” active instance of your workloads while maintaining high availability through automatic failover.

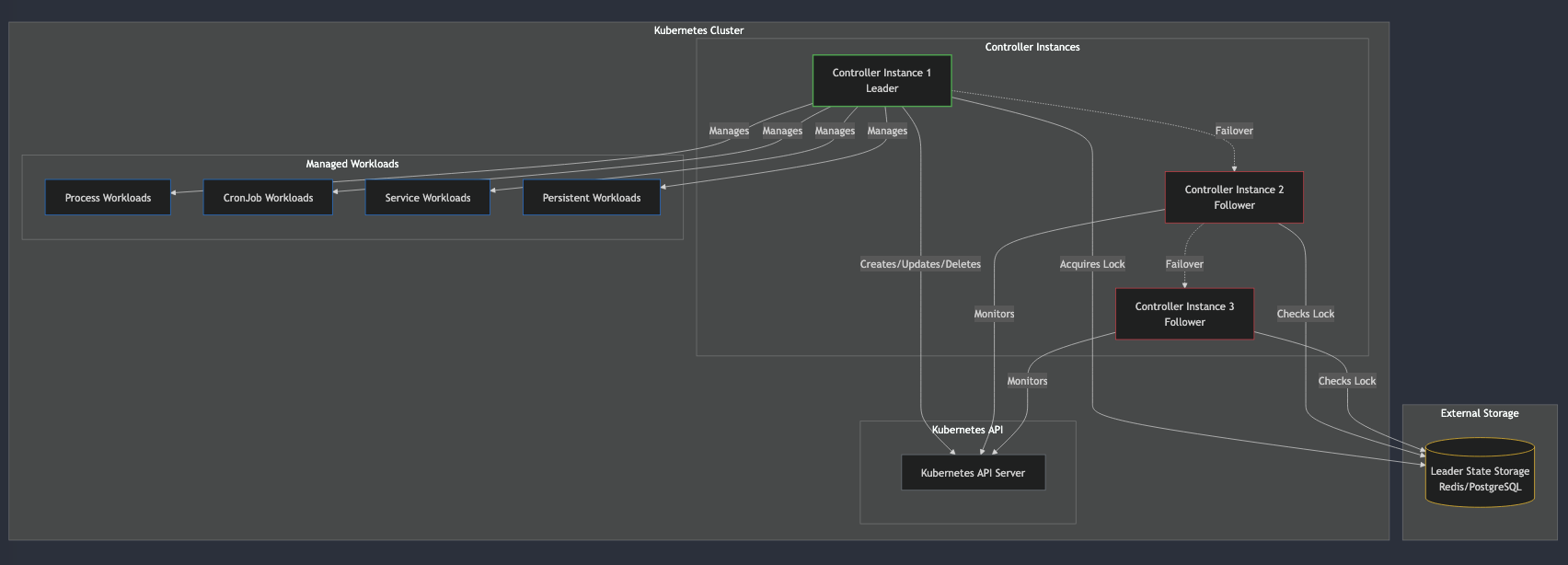

Architecture

K8 Highlander implements distributed leader election to ensure only one controller instance is active at any time, with others ready to take over if the leader fails. The name “Highlander” refers to the tagline from the 1980s movie & show: “There can be only one.”

Core Components

The system consists of several key components:

- Leader Election: Uses distributed locking (via Redis or a database) to ensure only one controller is active at a time. The leader periodically renews its lock, and if it fails, another controller can acquire the lock and take over.

- Workload Manager: Manages different types of workloads in Kubernetes, ensuring they’re running and healthy when this controller is the leader.

- Monitoring Server: Provides real-time metrics and status information about the controller and its workloads.

- HTTP Server: Serves a dashboard and API endpoints for monitoring and management.

How Leader Election Works

The leader election process follows these steps:

- Each controller instance attempts to acquire a distributed lock with a TTL (Time-To-Live)

- Only one instance succeeds and becomes the leader

- The leader periodically renews its lock to maintain leadership

- If the leader fails to renew (due to crash, network issues, etc.), the lock expires

- Another instance acquires the lock and becomes the new leader

- The new leader starts managing workloads

This approach ensures high availability while preventing split-brain scenarios where multiple instances might be active simultaneously.

Workload Types

K8 Highlander supports four types of workloads:

- Process Workloads: Single-instance processes running in pods

- CronJob Workloads: Scheduled tasks that run at specific intervals

- Service Workloads: Continuously running services using Deployments

- Persistent Workloads: Stateful applications with persistent storage using StatefulSets

Each workload type is managed to ensure exactly one instance is running across the cluster, with automatic recreation if terminated unexpectedly.

Deploying and Using K8 Highlander

Let me walk through how to deploy and use K8 Highlander for your singleton workloads.

Prerequisites

- Kubernetes cluster (v1.16+)

- Redis server or PostgreSQL database for leader state storage

- kubectl configured to access your cluster

Installation Using Docker

The simplest way to install K8 Highlander is using the pre-built Docker image:

# Create a namespace for k8-highlander

kubectl create namespace k8-highlander

# Create a ConfigMap with your configuration

kubectl create configmap k8-highlander-config \

--from-file=config.yaml=./config/config.yaml \

-n k8-highlander

# Deploy k8-highlander

kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: k8-highlander

namespace: k8-highlander

spec:

replicas: 2 # Run multiple instances for HA

selector:

matchLabels:

app: k8-highlander

template:

metadata:

labels:

app: k8-highlander

spec:

containers:

- name: controller

image: plexobject/k8-highlander:latest

env:

- name: HIGHLANDER_REDIS_ADDR

value: "redis:6379"

- name: HIGHLANDER_TENANT

value: "default"

- name: HIGHLANDER_NAMESPACE

value: "default"

- name: CONFIG_PATH

value: "/etc/k8-highlander/config.yaml"

ports:

- containerPort: 8080

name: http

volumeMounts:

- name: config-volume

mountPath: /etc/k8-highlander

volumes:

- name: config-volume

configMap:

name: k8-highlander-config

---

apiVersion: v1

kind: Service

metadata:

name: k8-highlander

namespace: k8-highlander

spec:

selector:

app: k8-highlander

ports:

- port: 8080

targetPort: 8080

EOF

This deploys K8 Highlander with your configuration, ensuring high availability with multiple replicas while maintaining the singleton behavior for your workloads.

Using K8 Highlander Locally for Testing

You can also run K8 Highlander locally for testing:

docker run -d --name k8-highlander \ -v $(pwd)/config.yaml:/etc/k8-highlander/config.yaml \ -e HIGHLANDER_REDIS_ADDR=redis-host:6379 \ -p 8080:8080 \ plexobject/k8-highlander:latest

Basic Configuration

K8 Highlander uses a YAML configuration file to define its behavior and workloads. Here’s a simple example:

id: "controller-1"

tenant: "default"

port: 8080

namespace: "default"

# Storage configuration

storageType: "redis"

redis:

addr: "redis:6379"

password: ""

db: 0

# Cluster configuration

cluster:

name: "primary"

kubeconfig: "" # Uses in-cluster config if empty

# Workloads configuration

workloads:

# Process workload example

processes:

- name: "data-processor"

image: "mycompany/data-processor:latest"

script:

commands:

- "echo 'Starting data processor'"

- "/app/process-data.sh"

shell: "/bin/sh"

env:

DB_HOST: "postgres.example.com"

resources:

cpuRequest: "200m"

memoryRequest: "256Mi"

restartPolicy: "OnFailure"

Example Workload Configurations

Let’s look at examples for each workload type:

Process Workload

Use this for single-instance processes that need to run continuously:

processes:

- name: "sequential-id-generator"

image: "mycompany/id-generator:latest"

script:

commands:

- "echo 'Starting ID generator'"

- "/app/run-id-generator.sh"

shell: "/bin/sh"

env:

DB_HOST: "postgres.example.com"

resources:

cpuRequest: "200m"

memoryRequest: "256Mi"

restartPolicy: "OnFailure"

CronJob Workload

For scheduled tasks that should run exactly once at specified intervals:

cronJobs:

- name: "daily-report"

schedule: "0 0 * * *" # Daily at midnight

image: "mycompany/report-generator:latest"

script:

commands:

- "echo 'Generating daily report'"

- "/app/generate-report.sh"

shell: "/bin/sh"

env:

REPORT_TYPE: "daily"

restartPolicy: "OnFailure"

Service Workload

For continuously running services that need to be singleton but highly available:

services:

- name: "admin-api"

image: "mycompany/admin-api:latest"

replicas: 1

ports:

- name: "http"

containerPort: 8080

servicePort: 80

env:

LOG_LEVEL: "info"

resources:

cpuRequest: "100m"

memoryRequest: "128Mi"

Persistent Workload

For stateful applications with persistent storage:

persistentSets:

- name: "message-queue"

image: "ibmcom/mqadvanced-server:latest"

replicas: 1

ports:

- name: "mq"

containerPort: 1414

servicePort: 1414

persistentVolumes:

- name: "data"

mountPath: "/var/mqm"

size: "10Gi"

env:

LICENSE: "accept"

MQ_QMGR_NAME: "QM1"

High Availability Setup

For production environments, run multiple instances of K8 Highlander to ensure high availability:

apiVersion: apps/v1

kind: Deployment

metadata:

name: k8-highlander

namespace: k8-highlander

spec:

replicas: 3 # Run multiple instances for HA

selector:

matchLabels:

app: k8-highlander

template:

metadata:

labels:

app: k8-highlander

spec:

containers:

- name: controller

image: plexobject/k8-highlander:latest

env:

- name: HIGHLANDER_REDIS_ADDR

value: "redis:6379"

- name: HIGHLANDER_TENANT

value: "production"

API and Monitoring Capabilities

K8 Highlander provides comprehensive monitoring, metrics, and API endpoints for observability and management.

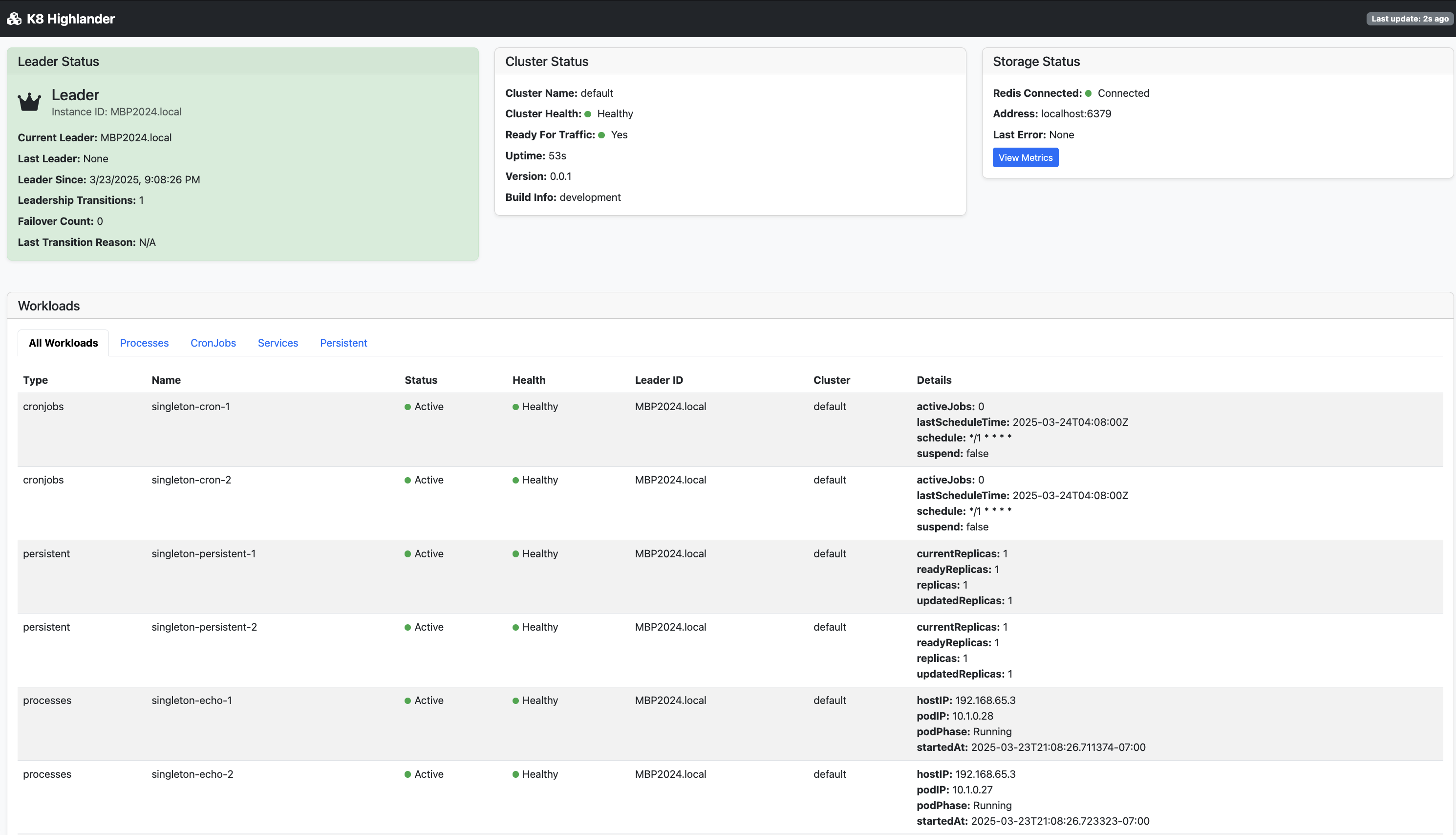

Dashboard

Access the built-in dashboard at http://<controller-address>:8080/ to see the status of the controller and its workloads in real-time.

The dashboard shows:

- Current leader status

- Workload health and status

- Redis/database connectivity

- Failover history

- Resource usage

API Endpoints

K8 Highlander exposes several HTTP endpoints for monitoring and integration:

- GET /status: Returns the current status of the controller

- GET /api/workloads: Lists all managed workloads and their status

- GET /api/workloads/{name}: Gets the status of a specific workload

- GET /healthz: Liveness probe endpoint

- GET /readyz: Readiness probe endpoint

Example API response:

{

"status": "success",

"data": {

"isLeader": true,

"leaderSince": "2023-05-01T12:34:56Z",

"lastLeaderTransition": "2023-05-01T12:34:56Z",

"uptime": "1h2m3s",

"leaderID": "controller-1",

"workloadStatus": {

"processes": {

"data-processor": {

"active": true,

"namespace": "default"

}

}

}

}

}

Prometheus Metrics

K8 Highlander exposes Prometheus metrics at /metrics for monitoring and alerting:

# HELP k8_highlander_is_leader Indicates if this instance is currently the leader (1) or not (0)

# TYPE k8_highlander_is_leader gauge

k8_highlander_is_leader 1

# HELP k8_highlander_leadership_transitions_total Total number of leadership transitions

# TYPE k8_highlander_leadership_transitions_total counter

k8_highlander_leadership_transitions_total 1

# HELP k8_highlander_workload_status Status of managed workloads (1=active, 0=inactive)

# TYPE k8_highlander_workload_status gauge

k8_highlander_workload_status{name="data-processor",namespace="default",type="process"} 1

Key metrics include:

- Leadership status and transitions

- Workload health and status

- Redis/database operations

- Failover events and duration

- System resource usage

Grafana Dashboard

A Grafana dashboard is available for visualizing K8 Highlander metrics. Import the dashboard from the dashboards directory in the repository.

Advanced Features

Multi-Tenant Support

K8 Highlander supports multi-tenant deployments, where different teams or environments can have their own isolated leader election and workload management:

# Tenant A configuration id: "controller-1" tenant: "tenant-a" namespace: "tenant-a"

# Tenant B configuration id: "controller-2" tenant: "tenant-b" namespace: "tenant-b"

Each tenant has its own leader election process, so one controller can be the leader for tenant A while another is the leader for tenant B.

Multi-Cluster Deployment

For disaster recovery scenarios, K8 Highlander can be deployed across multiple Kubernetes clusters with a shared Redis or database:

# Primary cluster id: "controller-1" tenant: "production" cluster: name: "primary" kubeconfig: "/path/to/primary-kubeconfig"

# Secondary cluster id: "controller-2" tenant: "production" cluster: name: "secondary" kubeconfig: "/path/to/secondary-kubeconfig"

If the primary cluster fails, a controller in the secondary cluster can become the leader and take over workload management.

Summary

K8 Highlander fills a critical gap in Kubernetes’ capabilities by providing reliable singleton workload management with automatic failover. It’s ideal for:

- Legacy applications that don’t support horizontal scaling

- Processes that need exclusive access to resources

- Scheduled jobs that should run exactly once

- Active/passive high-availability setups

The solution ensures high availability without sacrificing the “exactly one active” constraint that many applications require. By handling the complexity of leader election and workload management, K8 Highlander allows you to run stateful workloads in Kubernetes with confidence.

Where to Go from Here

- Check out the GitHub repository for the latest code and documentation

- Read the API Reference for detailed endpoint information

- Explore the Configuration Reference for all available options

- Review the Metrics Reference for monitoring capabilities

- See the Deployment Strategies for production deployment patterns

K8 Highlander is an open-source project with MIT license, and contributions are welcome! Feel free to submit issues, feature requests, or pull requests to help improve the project.