Introduction

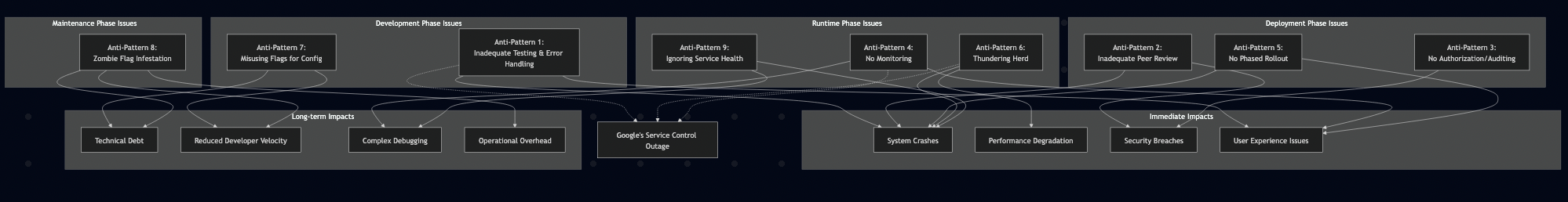

Error handling is often an afterthought in API development, yet it’s one of the most critical aspects of a good developer experience. For example, a cryptic error message like { "error": "An error occurred" } can lead to hours of frustrating debugging. In this guide, we will build a robust, production-grade error handling framework for a Go application that serves both gRPC and a REST/HTTP proxy based on industry standards like RFC9457 (Problem Details for HTTP APIs) and RFC7807 (obsoleted).

Tenets

Following are tenets of a great API error:

- Structured: machine-readable, not just a string.

- Actionable: explains the developer why the error occurred and, if possible, how to fix it.

- Consistent: all errors, from validation to authentication to server faults, follow the same format.

- Secure: never leaks sensitive internal information like stack traces or database schemas.

Our North Star for HTTP errors will be the Problem Details for HTTP APIs (RFC 9457/7807):

{

"type": "https://example.com/docs/errors/validation-failed",

"title": "Validation Failed",

"status": 400,

"detail": "The request body failed validation.",

"instance": "/v1/todos",

"invalid_params": [

{

"field": "title",

"reason": "must not be empty"

}

]

}We will adapt this model for gRPC by embedding a similar structure in the gRPC status details, creating a single source of truth for all errors.

API Design

Let’s start by defining our TODO API in Protocol Buffers:

syntax = "proto3";

package todo.v1;

import "google/api/annotations.proto";

import "google/api/field_behavior.proto";

import "google/api/resource.proto";

import "google/protobuf/timestamp.proto";

import "google/protobuf/field_mask.proto";

import "buf/validate/validate.proto";

option go_package = "github.com/bhatti/todo-api-errors/api/proto/todo/v1;todo";

// TodoService provides task management operations

service TodoService {

// CreateTask creates a new task

rpc CreateTask(CreateTaskRequest) returns (Task) {

option (google.api.http) = {

post: "/v1/tasks"

body: "*"

};

}

// GetTask retrieves a specific task

rpc GetTask(GetTaskRequest) returns (Task) {

option (google.api.http) = {

get: "/v1/{name=tasks/*}"

};

}

// ListTasks retrieves all tasks

rpc ListTasks(ListTasksRequest) returns (ListTasksResponse) {

option (google.api.http) = {

get: "/v1/tasks"

};

}

// UpdateTask updates an existing task

rpc UpdateTask(UpdateTaskRequest) returns (Task) {

option (google.api.http) = {

patch: "/v1/{task.name=tasks/*}"

body: "task"

};

}

// DeleteTask removes a task

rpc DeleteTask(DeleteTaskRequest) returns (DeleteTaskResponse) {

option (google.api.http) = {

delete: "/v1/{name=tasks/*}"

};

}

// BatchCreateTasks creates multiple tasks at once

rpc BatchCreateTasks(BatchCreateTasksRequest) returns (BatchCreateTasksResponse) {

option (google.api.http) = {

post: "/v1/tasks:batchCreate"

body: "*"

};

}

}

// Task represents a TODO item

message Task {

option (google.api.resource) = {

type: "todo.example.com/Task"

pattern: "tasks/{task}"

singular: "task"

plural: "tasks"

};

// Resource name of the task

string name = 1 [

(google.api.field_behavior) = IDENTIFIER,

(google.api.field_behavior) = OUTPUT_ONLY

];

// Task title

string title = 2 [

(google.api.field_behavior) = REQUIRED,

(buf.validate.field).string = {

min_len: 1

max_len: 200

}

];

// Task description

string description = 3 [

(google.api.field_behavior) = OPTIONAL,

(buf.validate.field).string = {

max_len: 1000

}

];

// Task status

Status status = 4 [

(google.api.field_behavior) = REQUIRED

];

// Task priority

Priority priority = 5 [

(google.api.field_behavior) = OPTIONAL

];

// Due date for the task

google.protobuf.Timestamp due_date = 6 [

(google.api.field_behavior) = OPTIONAL,

(buf.validate.field).timestamp = {

gt_now: true

}

];

// Task creation time

google.protobuf.Timestamp create_time = 7 [

(google.api.field_behavior) = OUTPUT_ONLY

];

// Task last update time

google.protobuf.Timestamp update_time = 8 [

(google.api.field_behavior) = OUTPUT_ONLY

];

// User who created the task

string created_by = 9 [

(google.api.field_behavior) = OUTPUT_ONLY

];

// Tags associated with the task

repeated string tags = 10 [

(buf.validate.field).repeated = {

max_items: 10

items: {

string: {

pattern: "^[a-z0-9-]+$"

max_len: 50

}

}

}

];

}

// Task status enumeration

enum Status {

STATUS_UNSPECIFIED = 0;

STATUS_PENDING = 1;

STATUS_IN_PROGRESS = 2;

STATUS_COMPLETED = 3;

STATUS_CANCELLED = 4;

}

// Task priority enumeration

enum Priority {

PRIORITY_UNSPECIFIED = 0;

PRIORITY_LOW = 1;

PRIORITY_MEDIUM = 2;

PRIORITY_HIGH = 3;

PRIORITY_CRITICAL = 4;

}

// CreateTaskRequest message

message CreateTaskRequest {

// Task to create

Task task = 1 [

(google.api.field_behavior) = REQUIRED,

(buf.validate.field).required = true

];

}

// GetTaskRequest message

message GetTaskRequest {

// Resource name of the task

string name = 1 [

(google.api.field_behavior) = REQUIRED,

(google.api.resource_reference) = {

type: "todo.example.com/Task"

},

(buf.validate.field).string = {

pattern: "^tasks/[a-zA-Z0-9-]+$"

}

];

}

// ListTasksRequest message

message ListTasksRequest {

// Maximum number of tasks to return

int32 page_size = 1 [

(buf.validate.field).int32 = {

gte: 0

lte: 1000

}

];

// Page token for pagination

string page_token = 2;

// Filter expression

string filter = 3;

// Order by expression

string order_by = 4;

}

// ListTasksResponse message

message ListTasksResponse {

// List of tasks

repeated Task tasks = 1;

// Token for next page

string next_page_token = 2;

// Total number of tasks

int32 total_size = 3;

}

// UpdateTaskRequest message

message UpdateTaskRequest {

// Task to update

Task task = 1 [

(google.api.field_behavior) = REQUIRED,

(buf.validate.field).required = true

];

// Fields to update

google.protobuf.FieldMask update_mask = 2 [

(google.api.field_behavior) = REQUIRED,

(buf.validate.field).required = true

];

}

// DeleteTaskRequest message

message DeleteTaskRequest {

// Resource name of the task

string name = 1 [

(google.api.field_behavior) = REQUIRED,

(google.api.resource_reference) = {

type: "todo.example.com/Task"

}

];

}

// DeleteTaskResponse message

message DeleteTaskResponse {

// Confirmation message

string message = 1;

}

// BatchCreateTasksRequest message

message BatchCreateTasksRequest {

// Tasks to create

repeated CreateTaskRequest requests = 1 [

(google.api.field_behavior) = REQUIRED,

(buf.validate.field).repeated = {

min_items: 1

max_items: 100

}

];

}

// BatchCreateTasksResponse message

message BatchCreateTasksResponse {

// Created tasks

repeated Task tasks = 1;

}syntax = "proto3";

package errors.v1;

import "google/protobuf/timestamp.proto";

import "google/protobuf/any.proto";

option go_package = "github.com/bhatti/todo-api-errors/api/proto/errors/v1;errors";

// ErrorDetail provides a structured, machine-readable error payload.

// It is designed to be embedded in the `details` field of a `google.rpc.Status` message.

message ErrorDetail {

// A unique, application-specific error code.

string code = 1;

// A short, human-readable summary of the problem type.

string title = 2;

// A human-readable explanation specific to this occurrence of the problem.

string detail = 3;

// A list of validation errors, useful for INVALID_ARGUMENT responses.

repeated FieldViolation field_violations = 4;

// Optional trace ID for request correlation

string trace_id = 5;

// Optional timestamp when the error occurred

google.protobuf.Timestamp timestamp = 6;

// Optional instance path where the error occurred

string instance = 7;

// Optional extensions for additional error context

map<string, google.protobuf.Any> extensions = 8;

}

// Describes a single validation failure.

message FieldViolation {

// The path to the field that failed validation, e.g., "title".

string field = 1;

// A developer-facing description of the validation rule that failed.

string description = 2;

// Application-specific error code for this validation failure

string code = 3;

}

// AppErrorCode defines a list of standardized, application-specific error codes.

enum AppErrorCode {

APP_ERROR_CODE_UNSPECIFIED = 0;

// Validation failures

VALIDATION_FAILED = 1;

REQUIRED_FIELD = 2;

TOO_SHORT = 3;

TOO_LONG = 4;

INVALID_FORMAT = 5;

MUST_BE_FUTURE = 6;

INVALID_VALUE = 7;

DUPLICATE_TAG = 8;

INVALID_TAG_FORMAT = 9;

OVERDUE_COMPLETION = 10;

EMPTY_BATCH = 11;

BATCH_TOO_LARGE = 12;

DUPLICATE_TITLE = 13;

// Resource errors

RESOURCE_NOT_FOUND = 1001;

RESOURCE_CONFLICT = 1002;

// Authentication and authorization

AUTHENTICATION_FAILED = 2001;

PERMISSION_DENIED = 2002;

// Rate limiting and service availability

RATE_LIMIT_EXCEEDED = 3001;

SERVICE_UNAVAILABLE = 3002;

// Internal errors

INTERNAL_ERROR = 9001;

}Error Handling Implementation

Now let’s implement our error handling framework:

package errors

import (

"fmt"

errorspb "github.com/bhatti/todo-api-errors/api/proto/errors/v1"

"google.golang.org/genproto/googleapis/rpc/errdetails"

"google.golang.org/grpc/codes"

"google.golang.org/grpc/status"

"google.golang.org/protobuf/types/known/anypb"

"google.golang.org/protobuf/types/known/timestamppb"

)

// AppError is our custom error type using protobuf definitions.

type AppError struct {

GRPCCode codes.Code

AppCode errorspb.AppErrorCode

Title string

Detail string

FieldViolations []*errorspb.FieldViolation

TraceID string

Instance string

Extensions map[string]*anypb.Any

CausedBy error // For internal logging

}

func (e *AppError) Error() string {

return fmt.Sprintf("gRPC Code: %s, App Code: %s, Title: %s, Detail: %s", e.GRPCCode, e.AppCode, e.Title, e.Detail)

}

// ToGRPCStatus converts our AppError into a gRPC status.Status.

func (e *AppError) ToGRPCStatus() *status.Status {

st := status.New(e.GRPCCode, e.Title)

errorDetail := &errorspb.ErrorDetail{

Code: e.AppCode.String(),

Title: e.Title,

Detail: e.Detail,

FieldViolations: e.FieldViolations,

TraceId: e.TraceID,

Timestamp: timestamppb.Now(),

Instance: e.Instance,

Extensions: e.Extensions,

}

// For validation errors, we also attach the standard BadRequest detail

// so that gRPC-Gateway and other standard tools can understand it.

if e.GRPCCode == codes.InvalidArgument && len(e.FieldViolations) > 0 {

br := &errdetails.BadRequest{}

for _, fv := range e.FieldViolations {

br.FieldViolations = append(br.FieldViolations, &errdetails.BadRequest_FieldViolation{

Field: fv.Field,

Description: fv.Description,

})

}

st, _ = st.WithDetails(br, errorDetail)

return st

}

st, _ = st.WithDetails(errorDetail)

return st

}

// Helper functions for creating common errors

func NewValidationFailed(violations []*errorspb.FieldViolation, traceID string) *AppError {

return &AppError{

GRPCCode: codes.InvalidArgument,

AppCode: errorspb.AppErrorCode_VALIDATION_FAILED,

Title: "Validation Failed",

Detail: fmt.Sprintf("The request contains %d validation errors", len(violations)),

FieldViolations: violations,

TraceID: traceID,

}

}

func NewNotFound(resource string, id string, traceID string) *AppError {

return &AppError{

GRPCCode: codes.NotFound,

AppCode: errorspb.AppErrorCode_RESOURCE_NOT_FOUND,

Title: "Resource Not Found",

Detail: fmt.Sprintf("%s with ID '%s' was not found.", resource, id),

TraceID: traceID,

}

}

func NewConflict(resource, reason string, traceID string) *AppError {

return &AppError{

GRPCCode: codes.AlreadyExists,

AppCode: errorspb.AppErrorCode_RESOURCE_CONFLICT,

Title: "Resource Conflict",

Detail: fmt.Sprintf("Conflict creating %s: %s", resource, reason),

TraceID: traceID,

}

}

func NewInternal(message string, traceID string, causedBy error) *AppError {

return &AppError{

GRPCCode: codes.Internal,

AppCode: errorspb.AppErrorCode_INTERNAL_ERROR,

Title: "Internal Server Error",

Detail: message,

TraceID: traceID,

CausedBy: causedBy,

}

}

func NewPermissionDenied(resource, action string, traceID string) *AppError {

return &AppError{

GRPCCode: codes.PermissionDenied,

AppCode: errorspb.AppErrorCode_PERMISSION_DENIED,

Title: "Permission Denied",

Detail: fmt.Sprintf("You don't have permission to %s %s", action, resource),

TraceID: traceID,

}

}

func NewServiceUnavailable(message string, traceID string) *AppError {

return &AppError{

GRPCCode: codes.Unavailable,

AppCode: errorspb.AppErrorCode_SERVICE_UNAVAILABLE,

Title: "Service Unavailable",

Detail: message,

TraceID: traceID,

}

}

func NewRequiredField(field, message string, traceID string) *AppError {

return &AppError{

GRPCCode: codes.InvalidArgument,

AppCode: errorspb.AppErrorCode_VALIDATION_FAILED,

Title: "Validation Failed",

Detail: "The request contains validation errors",

FieldViolations: []*errorspb.FieldViolation{

{

Field: field,

Code: errorspb.AppErrorCode_REQUIRED_FIELD.String(),

Description: message,

},

},

TraceID: traceID,

}

}Validation Framework

Let’s implement validation that returns all errors at once:

package validation

import (

"errors"

"fmt"

"regexp"

"strings"

"buf.build/gen/go/bufbuild/protovalidate/protocolbuffers/go/buf/validate"

"buf.build/go/protovalidate"

errorspb "github.com/bhatti/todo-api-errors/api/proto/errors/v1"

todopb "github.com/bhatti/todo-api-errors/api/proto/todo/v1"

apperrors "github.com/bhatti/todo-api-errors/internal/errors"

"google.golang.org/protobuf/proto"

)

var pv protovalidate.Validator

func init() {

var err error

pv, err = protovalidate.New()

if err != nil {

panic(fmt.Sprintf("failed to initialize protovalidator: %v", err))

}

}

// ValidateRequest checks a proto message and returns an AppError with all violations.

func ValidateRequest(req proto.Message, traceID string) error {

if err := pv.Validate(req); err != nil {

var validationErrs *protovalidate.ValidationError

if errors.As(err, &validationErrs) {

var violations []*errorspb.FieldViolation

for _, violation := range validationErrs.Violations {

fieldPath := ""

if violation.Proto.GetField() != nil {

fieldPath = formatFieldPath(violation.Proto.GetField())

}

ruleId := violation.Proto.GetRuleId()

message := violation.Proto.GetMessage()

violations = append(violations, &errorspb.FieldViolation{

Field: fieldPath,

Description: message,

Code: mapConstraintToCode(ruleId),

})

}

return apperrors.NewValidationFailed(violations, traceID)

}

return apperrors.NewInternal("Validation failed", traceID, err)

}

return nil

}

// ValidateTask performs additional business logic validation

func ValidateTask(task *todopb.Task, traceID string) error {

var violations []*errorspb.FieldViolation

// Proto validation first

if err := ValidateRequest(task, traceID); err != nil {

if appErr, ok := err.(*apperrors.AppError); ok {

violations = append(violations, appErr.FieldViolations...)

}

}

// Additional business rules

if task.Status == todopb.Status_STATUS_COMPLETED && task.DueDate != nil {

if task.UpdateTime != nil && task.UpdateTime.AsTime().After(task.DueDate.AsTime()) {

violations = append(violations, &errorspb.FieldViolation{

Field: "due_date",

Code: errorspb.AppErrorCode_OVERDUE_COMPLETION.String(),

Description: "Task was completed after the due date",

})

}

}

// Validate tags format

for i, tag := range task.Tags {

if !isValidTag(tag) {

violations = append(violations, &errorspb.FieldViolation{

Field: fmt.Sprintf("tags[%d]", i),

Code: errorspb.AppErrorCode_INVALID_TAG_FORMAT.String(),

Description: fmt.Sprintf("Tag '%s' must be lowercase letters, numbers, and hyphens only", tag),

})

}

}

// Check for duplicate tags

tagMap := make(map[string]bool)

for i, tag := range task.Tags {

if tagMap[tag] {

violations = append(violations, &errorspb.FieldViolation{

Field: fmt.Sprintf("tags[%d]", i),

Code: errorspb.AppErrorCode_DUPLICATE_TAG.String(),

Description: fmt.Sprintf("Tag '%s' appears multiple times", tag),

})

}

tagMap[tag] = true

}

if len(violations) > 0 {

return apperrors.NewValidationFailed(violations, traceID)

}

return nil

}

// ValidateBatchCreateTasks validates batch operations

func ValidateBatchCreateTasks(req *todopb.BatchCreateTasksRequest, traceID string) error {

var violations []*errorspb.FieldViolation

// Check batch size

if len(req.Requests) == 0 {

violations = append(violations, &errorspb.FieldViolation{

Field: "requests",

Code: errorspb.AppErrorCode_EMPTY_BATCH.String(),

Description: "Batch must contain at least one task",

})

}

if len(req.Requests) > 100 {

violations = append(violations, &errorspb.FieldViolation{

Field: "requests",

Code: errorspb.AppErrorCode_BATCH_TOO_LARGE.String(),

Description: fmt.Sprintf("Batch size %d exceeds maximum of 100", len(req.Requests)),

})

}

// Validate each task

for i, createReq := range req.Requests {

if createReq.Task == nil {

violations = append(violations, &errorspb.FieldViolation{

Field: fmt.Sprintf("requests[%d].task", i),

Code: errorspb.AppErrorCode_REQUIRED_FIELD.String(),

Description: "Task is required",

})

continue

}

// Validate task

if err := ValidateTask(createReq.Task, traceID); err != nil {

if appErr, ok := err.(*apperrors.AppError); ok {

for _, violation := range appErr.FieldViolations {

violation.Field = fmt.Sprintf("requests[%d].task.%s", i, violation.Field)

violations = append(violations, violation)

}

}

}

}

// Check for duplicate titles

titleMap := make(map[string][]int)

for i, createReq := range req.Requests {

if createReq.Task != nil && createReq.Task.Title != "" {

titleMap[createReq.Task.Title] = append(titleMap[createReq.Task.Title], i)

}

}

for title, indices := range titleMap {

if len(indices) > 1 {

for _, idx := range indices {

violations = append(violations, &errorspb.FieldViolation{

Field: fmt.Sprintf("requests[%d].task.title", idx),

Code: errorspb.AppErrorCode_DUPLICATE_TITLE.String(),

Description: fmt.Sprintf("Title '%s' is used by multiple tasks in the batch", title),

})

}

}

}

if len(violations) > 0 {

return apperrors.NewValidationFailed(violations, traceID)

}

return nil

}

// Helper functions

func formatFieldPath(fieldPath *validate.FieldPath) string {

if fieldPath == nil {

return ""

}

// Build field path from elements

var parts []string

for _, element := range fieldPath.GetElements() {

if element.GetFieldName() != "" {

parts = append(parts, element.GetFieldName())

} else if element.GetFieldNumber() != 0 {

parts = append(parts, fmt.Sprintf("field_%d", element.GetFieldNumber()))

}

}

return strings.Join(parts, ".")

}

func mapConstraintToCode(ruleId string) string {

switch {

case strings.Contains(ruleId, "required"):

return errorspb.AppErrorCode_REQUIRED_FIELD.String()

case strings.Contains(ruleId, "min_len"):

return errorspb.AppErrorCode_TOO_SHORT.String()

case strings.Contains(ruleId, "max_len"):

return errorspb.AppErrorCode_TOO_LONG.String()

case strings.Contains(ruleId, "pattern"):

return errorspb.AppErrorCode_INVALID_FORMAT.String()

case strings.Contains(ruleId, "gt_now"):

return errorspb.AppErrorCode_MUST_BE_FUTURE.String()

case ruleId == "":

return errorspb.AppErrorCode_VALIDATION_FAILED.String()

default:

return errorspb.AppErrorCode_INVALID_VALUE.String()

}

}

var validTagPattern = regexp.MustCompile(`^[a-z0-9-]+$`)

func isValidTag(tag string) bool {

return len(tag) <= 50 && validTagPattern.MatchString(tag)

}

Error Handler Middleware

Now let’s create middleware to handle errors consistently:

package middleware

import (

"context"

"errors"

"log"

apperrors "github.com/bhatti/todo-api-errors/internal/errors"

"google.golang.org/grpc"

"google.golang.org/grpc/status"

)

// UnaryErrorInterceptor translates application errors into gRPC statuses.

func UnaryErrorInterceptor(ctx context.Context, req interface{}, info *grpc.UnaryServerInfo, handler grpc.UnaryHandler) (interface{}, error) {

resp, err := handler(ctx, req)

if err == nil {

return resp, nil

}

var appErr *apperrors.AppError

if errors.As(err, &appErr) {

if appErr.CausedBy != nil {

log.Printf("ERROR: %s, Original cause: %v", appErr.Title, appErr.CausedBy)

}

return nil, appErr.ToGRPCStatus().Err()

}

if _, ok := status.FromError(err); ok {

return nil, err // Already a gRPC status

}

log.Printf("UNEXPECTED ERROR: %v", err)

return nil, apperrors.NewInternal("An unexpected error occurred", "", err).ToGRPCStatus().Err()

}package middleware

import (

"context"

"encoding/json"

"net/http"

"runtime/debug"

"time"

errorspb "github.com/bhatti/todo-api-errors/api/proto/errors/v1"

apperrors "github.com/bhatti/todo-api-errors/internal/errors"

"github.com/google/uuid"

"github.com/grpc-ecosystem/grpc-gateway/v2/runtime"

"go.opentelemetry.io/otel/trace"

"google.golang.org/grpc/status"

"google.golang.org/protobuf/encoding/protojson"

)

// HTTPErrorHandler handles errors for HTTP endpoints

func HTTPErrorHandler(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// Add trace ID to context

traceID := r.Header.Get("X-Trace-ID")

if traceID == "" {

traceID = uuid.New().String()

}

ctx := context.WithValue(r.Context(), "traceID", traceID)

r = r.WithContext(ctx)

// Create response wrapper to intercept errors

wrapped := &responseWriter{

ResponseWriter: w,

request: r,

traceID: traceID,

}

// Handle panics

defer func() {

if err := recover(); err != nil {

handlePanic(wrapped, err)

}

}()

// Process request

next.ServeHTTP(wrapped, r)

})

}

// responseWriter wraps http.ResponseWriter to intercept errors

type responseWriter struct {

http.ResponseWriter

request *http.Request

traceID string

statusCode int

written bool

}

func (w *responseWriter) WriteHeader(code int) {

if !w.written {

w.statusCode = code

w.ResponseWriter.WriteHeader(code)

w.written = true

}

}

func (w *responseWriter) Write(b []byte) (int, error) {

if !w.written {

w.WriteHeader(http.StatusOK)

}

return w.ResponseWriter.Write(b)

}

// handlePanic converts panics to proper error responses

func handlePanic(w *responseWriter, recovered interface{}) {

// Log stack trace

debug.PrintStack()

appErr := apperrors.NewInternal("An unexpected error occurred. Please try again later.", w.traceID, nil)

writeErrorResponse(w, appErr)

}

// CustomHTTPError handles gRPC gateway error responses

func CustomHTTPError(ctx context.Context, mux *runtime.ServeMux,

marshaler runtime.Marshaler, w http.ResponseWriter, r *http.Request, err error) {

// Extract trace ID

traceID := r.Header.Get("X-Trace-ID")

if traceID == "" {

if span := trace.SpanFromContext(ctx); span.SpanContext().IsValid() {

traceID = span.SpanContext().TraceID().String()

} else {

traceID = uuid.New().String()

}

}

// Convert gRPC error to HTTP response

st, _ := status.FromError(err)

// Check if we have our custom error detail in status details

for _, detail := range st.Details() {

if errorDetail, ok := detail.(*errorspb.ErrorDetail); ok {

// Update the error detail with current request context

errorDetail.TraceId = traceID

errorDetail.Instance = r.URL.Path

// Convert to JSON and write response

w.Header().Set("Content-Type", "application/problem+json")

w.WriteHeader(runtime.HTTPStatusFromCode(st.Code()))

// Create a simplified JSON response that matches RFC 7807

response := map[string]interface{}{

"type": getTypeForCode(errorDetail.Code),

"title": errorDetail.Title,

"status": runtime.HTTPStatusFromCode(st.Code()),

"detail": errorDetail.Detail,

"instance": errorDetail.Instance,

"traceId": errorDetail.TraceId,

"timestamp": errorDetail.Timestamp,

}

// Add field violations if present

if len(errorDetail.FieldViolations) > 0 {

violations := make([]map[string]interface{}, len(errorDetail.FieldViolations))

for i, fv := range errorDetail.FieldViolations {

violations[i] = map[string]interface{}{

"field": fv.Field,

"code": fv.Code,

"message": fv.Description,

}

}

response["errors"] = violations

}

// Add extensions if present

if len(errorDetail.Extensions) > 0 {

extensions := make(map[string]interface{})

for k, v := range errorDetail.Extensions {

// Convert Any to JSON

if jsonBytes, err := protojson.Marshal(v); err == nil {

var jsonData interface{}

if err := json.Unmarshal(jsonBytes, &jsonData); err == nil {

extensions[k] = jsonData

}

}

}

if len(extensions) > 0 {

response["extensions"] = extensions

}

}

if err := json.NewEncoder(w).Encode(response); err != nil {

http.Error(w, `{"error": "Failed to encode error response"}`, 500)

}

return

}

}

// Fallback: create new error response

fallbackErr := apperrors.NewInternal(st.Message(), traceID, nil)

fallbackErr.GRPCCode = st.Code()

writeAppErrorResponse(w, fallbackErr, r.URL.Path)

}

// Helper functions

func getTypeForCode(code string) string {

switch code {

case errorspb.AppErrorCode_VALIDATION_FAILED.String():

return "https://api.example.com/errors/validation-failed"

case errorspb.AppErrorCode_RESOURCE_NOT_FOUND.String():

return "https://api.example.com/errors/resource-not-found"

case errorspb.AppErrorCode_RESOURCE_CONFLICT.String():

return "https://api.example.com/errors/resource-conflict"

case errorspb.AppErrorCode_PERMISSION_DENIED.String():

return "https://api.example.com/errors/permission-denied"

case errorspb.AppErrorCode_INTERNAL_ERROR.String():

return "https://api.example.com/errors/internal-error"

case errorspb.AppErrorCode_SERVICE_UNAVAILABLE.String():

return "https://api.example.com/errors/service-unavailable"

default:

return "https://api.example.com/errors/unknown"

}

}

func writeErrorResponse(w http.ResponseWriter, err error) {

if appErr, ok := err.(*apperrors.AppError); ok {

writeAppErrorResponse(w, appErr, "")

} else {

http.Error(w, err.Error(), http.StatusInternalServerError)

}

}

func writeAppErrorResponse(w http.ResponseWriter, appErr *apperrors.AppError, instance string) {

statusCode := runtime.HTTPStatusFromCode(appErr.GRPCCode)

response := map[string]interface{}{

"type": getTypeForCode(appErr.AppCode.String()),

"title": appErr.Title,

"status": statusCode,

"detail": appErr.Detail,

"traceId": appErr.TraceID,

"timestamp": time.Now(),

}

if instance != "" {

response["instance"] = instance

}

if len(appErr.FieldViolations) > 0 {

violations := make([]map[string]interface{}, len(appErr.FieldViolations))

for i, fv := range appErr.FieldViolations {

violations[i] = map[string]interface{}{

"field": fv.Field,

"code": fv.Code,

"message": fv.Description,

}

}

response["errors"] = violations

}

w.Header().Set("Content-Type", "application/problem+json")

w.WriteHeader(statusCode)

json.NewEncoder(w).Encode(response)

}Service Implementation

Now let’s implement our TODO service with proper error handling:

package service

import (

"context"

"fmt"

todopb "github.com/bhatti/todo-api-errors/api/proto/todo/v1"

"github.com/bhatti/todo-api-errors/internal/errors"

"github.com/bhatti/todo-api-errors/internal/repository"

"github.com/bhatti/todo-api-errors/internal/validation"

"github.com/google/uuid"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/attribute"

"go.opentelemetry.io/otel/trace"

"google.golang.org/protobuf/types/known/fieldmaskpb"

"google.golang.org/protobuf/types/known/timestamppb"

"strings"

)

var tracer = otel.Tracer("todo-service")

// TodoService implements the TODO API

type TodoService struct {

todopb.UnimplementedTodoServiceServer

repo repository.TodoRepository

}

// NewTodoService creates a new TODO service

func NewTodoService(repo repository.TodoRepository) (*TodoService, error) {

return &TodoService{

repo: repo,

}, nil

}

// CreateTask creates a new task

func (s *TodoService) CreateTask(ctx context.Context, req *todopb.CreateTaskRequest) (*todopb.Task, error) {

ctx, span := tracer.Start(ctx, "CreateTask")

defer span.End()

// Get trace ID for error responses

traceID := span.SpanContext().TraceID().String()

// Validate request

if req.Task == nil {

return nil, errors.NewRequiredField("task", "Task object is required", traceID)

}

// Validate task fields using the new validation package

if err := validation.ValidateTask(req.Task, traceID); err != nil {

span.SetAttributes(attribute.String("validation.error", err.Error()))

return nil, err

}

// Check for duplicate title

existing, err := s.repo.GetTaskByTitle(ctx, req.Task.Title)

if err != nil && !repository.IsNotFound(err) {

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

if existing != nil {

return nil, errors.NewConflict("task", "A task with this title already exists", traceID)

}

// Generate task ID

taskID := uuid.New().String()

task := &todopb.Task{

Name: fmt.Sprintf("tasks/%s", taskID),

Title: req.Task.Title,

Description: req.Task.Description,

Status: req.Task.Status,

Priority: req.Task.Priority,

DueDate: req.Task.DueDate,

Tags: req.Task.Tags,

CreateTime: timestamppb.Now(),

UpdateTime: timestamppb.Now(),

CreatedBy: s.getUserFromContext(ctx),

}

// Set defaults

if task.Status == todopb.Status_STATUS_UNSPECIFIED {

task.Status = todopb.Status_STATUS_PENDING

}

if task.Priority == todopb.Priority_PRIORITY_UNSPECIFIED {

task.Priority = todopb.Priority_PRIORITY_MEDIUM

}

// Save to repository

if err := s.repo.CreateTask(ctx, task); err != nil {

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

span.SetAttributes(

attribute.String("task.id", taskID),

attribute.String("task.title", task.Title),

)

return task, nil

}

// GetTask retrieves a specific task

func (s *TodoService) GetTask(ctx context.Context, req *todopb.GetTaskRequest) (*todopb.Task, error) {

ctx, span := tracer.Start(ctx, "GetTask")

defer span.End()

traceID := span.SpanContext().TraceID().String()

// Validate request using the new validation package

if err := validation.ValidateRequest(req, traceID); err != nil {

return nil, err

}

// Extract task ID

parts := strings.Split(req.Name, "/")

if len(parts) != 2 || parts[0] != "tasks" {

return nil, errors.NewRequiredField("name", "Task name must be in format 'tasks/{id}'", traceID)

}

taskID := parts[1]

span.SetAttributes(attribute.String("task.id", taskID))

// Get from repository

task, err := s.repo.GetTask(ctx, taskID)

if err != nil {

if repository.IsNotFound(err) {

return nil, errors.NewNotFound("Task", taskID, traceID)

}

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

// Check permissions

if !s.canAccessTask(ctx, task) {

return nil, errors.NewPermissionDenied("task", "read", traceID)

}

return task, nil

}

// ListTasks retrieves all tasks

func (s *TodoService) ListTasks(ctx context.Context, req *todopb.ListTasksRequest) (*todopb.ListTasksResponse, error) {

ctx, span := tracer.Start(ctx, "ListTasks")

defer span.End()

traceID := span.SpanContext().TraceID().String()

// Validate request using the new validation package

if err := validation.ValidateRequest(req, traceID); err != nil {

return nil, err

}

// Default page size

pageSize := req.PageSize

if pageSize == 0 {

pageSize = 50

}

if pageSize > 1000 {

pageSize = 1000

}

span.SetAttributes(

attribute.Int("page.size", int(pageSize)),

attribute.String("filter", req.Filter),

)

// Parse filter

filter, err := s.parseFilter(req.Filter)

if err != nil {

return nil, errors.NewRequiredField("filter", fmt.Sprintf("Failed to parse filter: %v", err), traceID)

}

// Get tasks from repository

tasks, nextPageToken, err := s.repo.ListTasks(ctx, repository.ListOptions{

PageSize: int(pageSize),

PageToken: req.PageToken,

Filter: filter,

OrderBy: req.OrderBy,

UserID: s.getUserFromContext(ctx),

})

if err != nil {

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

// Get total count

totalSize, err := s.repo.CountTasks(ctx, filter, s.getUserFromContext(ctx))

if err != nil {

// Log but don't fail the request

span.RecordError(err)

totalSize = -1

}

return &todopb.ListTasksResponse{

Tasks: tasks,

NextPageToken: nextPageToken,

TotalSize: int32(totalSize),

}, nil

}

// UpdateTask updates an existing task

func (s *TodoService) UpdateTask(ctx context.Context, req *todopb.UpdateTaskRequest) (*todopb.Task, error) {

ctx, span := tracer.Start(ctx, "UpdateTask")

defer span.End()

traceID := span.SpanContext().TraceID().String()

// Validate request

if req.Task == nil {

return nil, errors.NewRequiredField("task", "Task object is required", traceID)

}

if req.UpdateMask == nil || len(req.UpdateMask.Paths) == 0 {

return nil, errors.NewRequiredField("update_mask", "Update mask must specify which fields to update", traceID)

}

// Extract task ID

parts := strings.Split(req.Task.Name, "/")

if len(parts) != 2 || parts[0] != "tasks" {

return nil, errors.NewRequiredField("task.name", "Invalid task name format", traceID)

}

taskID := parts[1]

span.SetAttributes(attribute.String("task.id", taskID))

// Get existing task

existing, err := s.repo.GetTask(ctx, taskID)

if err != nil {

if repository.IsNotFound(err) {

return nil, errors.NewNotFound("Task", taskID, traceID)

}

return nil, s.handleRepositoryError(err, traceID)

}

// Check permissions

if !s.canModifyTask(ctx, existing) {

return nil, errors.NewPermissionDenied("task", "update", traceID)

}

// Apply updates based on field mask

updated := s.applyFieldMask(existing, req.Task, req.UpdateMask)

updated.UpdateTime = timestamppb.Now()

// Validate updated task using the new validation package

if err := validation.ValidateTask(updated, traceID); err != nil {

return nil, err

}

// Save to repository

if err := s.repo.UpdateTask(ctx, updated); err != nil {

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

return updated, nil

}

// DeleteTask removes a task

func (s *TodoService) DeleteTask(ctx context.Context, req *todopb.DeleteTaskRequest) (*todopb.DeleteTaskResponse, error) {

ctx, span := tracer.Start(ctx, "DeleteTask")

defer span.End()

traceID := span.SpanContext().TraceID().String()

// Validate request using the new validation package

if err := validation.ValidateRequest(req, traceID); err != nil {

return nil, err

}

// Extract task ID

parts := strings.Split(req.Name, "/")

if len(parts) != 2 || parts[0] != "tasks" {

return nil, errors.NewRequiredField("name", "Invalid task name format", traceID)

}

taskID := parts[1]

span.SetAttributes(attribute.String("task.id", taskID))

// Get existing task to check permissions

existing, err := s.repo.GetTask(ctx, taskID)

if err != nil {

if repository.IsNotFound(err) {

return nil, errors.NewNotFound("Task", taskID, traceID)

}

return nil, s.handleRepositoryError(err, traceID)

}

// Check permissions

if !s.canModifyTask(ctx, existing) {

return nil, errors.NewPermissionDenied("task", "delete", traceID)

}

// Delete from repository

if err := s.repo.DeleteTask(ctx, taskID); err != nil {

span.RecordError(err)

return nil, s.handleRepositoryError(err, traceID)

}

return &todopb.DeleteTaskResponse{

Message: fmt.Sprintf("Task %s deleted successfully", req.Name),

}, nil

}

// BatchCreateTasks creates multiple tasks at once

func (s *TodoService) BatchCreateTasks(ctx context.Context, req *todopb.BatchCreateTasksRequest) (*todopb.BatchCreateTasksResponse, error) {

ctx, span := tracer.Start(ctx, "BatchCreateTasks")

defer span.End()

traceID := span.SpanContext().TraceID().String()

// Validate batch request using the new validation package

if err := validation.ValidateBatchCreateTasks(req, traceID); err != nil {

span.SetAttributes(attribute.String("validation.error", err.Error()))

return nil, err

}

// Process each task

var created []*todopb.Task

var batchErrors []string

for i, createReq := range req.Requests {

task, err := s.CreateTask(ctx, createReq)

if err != nil {

// Collect errors for batch response

batchErrors = append(batchErrors, fmt.Sprintf("Task %d: %s", i, err.Error()))

continue

}

created = append(created, task)

}

// If all tasks failed, return error

if len(created) == 0 && len(batchErrors) > 0 {

return nil, errors.NewInternal("All batch operations failed", traceID, nil)

}

// Return partial success

response := &todopb.BatchCreateTasksResponse{

Tasks: created,

}

// Add partial errors to response metadata if any

if len(batchErrors) > 0 {

span.SetAttributes(

attribute.Int("batch.total", len(req.Requests)),

attribute.Int("batch.success", len(created)),

attribute.Int("batch.failed", len(batchErrors)),

)

}

return response, nil

}

// Helper methods

func (s *TodoService) handleRepositoryError(err error, traceID string) error {

if repository.IsConnectionError(err) {

return errors.NewServiceUnavailable("Unable to connect to the database. Please try again later.", traceID)

}

// Log internal error details

span := trace.SpanFromContext(context.Background())

if span != nil {

span.RecordError(err)

}

return errors.NewInternal("An unexpected error occurred while processing your request", traceID, err)

}

func (s *TodoService) getUserFromContext(ctx context.Context) string {

// In a real implementation, this would extract user info from auth context

if user, ok := ctx.Value("user").(string); ok {

return user

}

return "anonymous"

}

func (s *TodoService) canAccessTask(ctx context.Context, task *todopb.Task) bool {

// In a real implementation, check if user can access this task

user := s.getUserFromContext(ctx)

return user == task.CreatedBy || user == "admin"

}

func (s *TodoService) canModifyTask(ctx context.Context, task *todopb.Task) bool {

// In a real implementation, check if user can modify this task

user := s.getUserFromContext(ctx)

return user == task.CreatedBy || user == "admin"

}

func (s *TodoService) parseFilter(filter string) (map[string]interface{}, error) {

// Simple filter parser - in production, use a proper parser

parsed := make(map[string]interface{})

if filter == "" {

return parsed, nil

}

// Example: "status=COMPLETED AND priority=HIGH"

parts := strings.Split(filter, " AND ")

for _, part := range parts {

kv := strings.Split(strings.TrimSpace(part), "=")

if len(kv) != 2 {

return nil, fmt.Errorf("invalid filter expression: %s", part)

}

key := strings.TrimSpace(kv[0])

value := strings.Trim(strings.TrimSpace(kv[1]), "'\"")

// Validate filter keys

switch key {

case "status", "priority", "created_by":

parsed[key] = value

default:

return nil, fmt.Errorf("unknown filter field: %s", key)

}

}

return parsed, nil

}

func (s *TodoService) applyFieldMask(existing, update *todopb.Task, mask *fieldmaskpb.FieldMask) *todopb.Task {

result := *existing

for _, path := range mask.Paths {

switch path {

case "title":

result.Title = update.Title

case "description":

result.Description = update.Description

case "status":

result.Status = update.Status

case "priority":

result.Priority = update.Priority

case "due_date":

result.DueDate = update.DueDate

case "tags":

result.Tags = update.Tags

}

}

return &result

}Server Implementation

Now let’s put it all together in our server:

package main

import (

"context"

"fmt"

"log"

"net"

"net/http"

"os"

"os/signal"

"syscall"

"time"

todopb "github.com/bhatti/todo-api-errors/api/proto/todo/v1"

"github.com/bhatti/todo-api-errors/internal/middleware"

"github.com/bhatti/todo-api-errors/internal/monitoring"

"github.com/bhatti/todo-api-errors/internal/repository"

"github.com/bhatti/todo-api-errors/internal/service"

"github.com/grpc-ecosystem/grpc-gateway/v2/runtime"

"github.com/prometheus/client_golang/prometheus/promhttp"

"google.golang.org/grpc"

"google.golang.org/grpc/codes"

"google.golang.org/grpc/credentials/insecure"

"google.golang.org/grpc/reflection"

"google.golang.org/grpc/status"

"google.golang.org/protobuf/encoding/protojson"

)

func main() {

// Initialize monitoring

if err := monitoring.InitOpenTelemetryMetrics(); err != nil {

log.Printf("Failed to initialize OpenTelemetry metrics: %v", err)

// Continue without OpenTelemetry - Prometheus will still work

}

// Initialize repository

repo := repository.NewInMemoryRepository()

// Initialize service

todoService, err := service.NewTodoService(repo)

if err != nil {

log.Fatalf("Failed to create service: %v", err)

}

// Start gRPC server

grpcPort := ":50051"

go func() {

if err := startGRPCServer(grpcPort, todoService); err != nil {

log.Fatalf("Failed to start gRPC server: %v", err)

}

}()

// Start HTTP gateway

httpPort := ":8080"

go func() {

if err := startHTTPGateway(httpPort, grpcPort); err != nil {

log.Fatalf("Failed to start HTTP gateway: %v", err)

}

}()

// Start metrics server

go func() {

http.Handle("/metrics", promhttp.Handler())

if err := http.ListenAndServe(":9090", nil); err != nil {

log.Printf("Failed to start metrics server: %v", err)

}

}()

log.Printf("TODO API server started")

log.Printf("gRPC server listening on %s", grpcPort)

log.Printf("HTTP gateway listening on %s", httpPort)

log.Printf("Metrics available at :9090/metrics")

// Wait for interrupt signal

sigCh := make(chan os.Signal, 1)

signal.Notify(sigCh, syscall.SIGINT, syscall.SIGTERM)

<-sigCh

log.Println("Shutting down...")

}

func startGRPCServer(port string, todoService todopb.TodoServiceServer) error {

lis, err := net.Listen("tcp", port)

if err != nil {

return fmt.Errorf("failed to listen: %w", err)

}

// Create gRPC server with interceptors - now using the new UnaryErrorInterceptor

opts := []grpc.ServerOption{

grpc.ChainUnaryInterceptor(

middleware.UnaryErrorInterceptor, // Using new protobuf-based error interceptor

loggingInterceptor(),

recoveryInterceptor(),

),

}

server := grpc.NewServer(opts...)

// Register service

todopb.RegisterTodoServiceServer(server, todoService)

// Register reflection for debugging

reflection.Register(server)

return server.Serve(lis)

}

func startHTTPGateway(httpPort, grpcPort string) error {

ctx := context.Background()

// Create gRPC connection

conn, err := grpc.DialContext(

ctx,

"localhost"+grpcPort,

grpc.WithTransportCredentials(insecure.NewCredentials()),

)

if err != nil {

return fmt.Errorf("failed to dial gRPC server: %w", err)

}

// Create gateway mux with custom error handler

mux := runtime.NewServeMux(

runtime.WithErrorHandler(middleware.CustomHTTPError), // Using new protobuf-based error handler

runtime.WithMarshalerOption(runtime.MIMEWildcard, &runtime.JSONPb{

MarshalOptions: protojson.MarshalOptions{

UseProtoNames: true,

EmitUnpopulated: false,

},

UnmarshalOptions: protojson.UnmarshalOptions{

DiscardUnknown: true,

},

}),

)

// Register service handler

if err := todopb.RegisterTodoServiceHandler(ctx, mux, conn); err != nil {

return fmt.Errorf("failed to register service handler: %w", err)

}

// Create HTTP server with middleware

handler := middleware.HTTPErrorHandler( // Using new protobuf-based HTTP error handler

corsMiddleware(

authMiddleware(

loggingHTTPMiddleware(mux),

),

),

)

server := &http.Server{

Addr: httpPort,

Handler: handler,

ReadTimeout: 10 * time.Second,

WriteTimeout: 10 * time.Second,

IdleTimeout: 120 * time.Second,

}

return server.ListenAndServe()

}

// Middleware implementations

func loggingInterceptor() grpc.UnaryServerInterceptor {

return func(ctx context.Context, req interface{}, info *grpc.UnaryServerInfo, handler grpc.UnaryHandler) (interface{}, error) {

start := time.Now()

// Call handler

resp, err := handler(ctx, req)

// Log request

duration := time.Since(start)

statusCode := "OK"

if err != nil {

statusCode = status.Code(err).String()

}

log.Printf("gRPC: %s %s %s %v", info.FullMethod, statusCode, duration, err)

return resp, err

}

}

func recoveryInterceptor() grpc.UnaryServerInterceptor {

return func(ctx context.Context, req interface{}, info *grpc.UnaryServerInfo, handler grpc.UnaryHandler) (resp interface{}, err error) {

defer func() {

if r := recover(); r != nil {

log.Printf("Recovered from panic: %v", r)

monitoring.RecordPanicRecovery(ctx)

err = status.Error(codes.Internal, "Internal server error")

}

}()

return handler(ctx, req)

}

}

func loggingHTTPMiddleware(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

// Wrap response writer to capture status

wrapped := &statusResponseWriter{ResponseWriter: w, statusCode: http.StatusOK}

// Process request

next.ServeHTTP(wrapped, r)

// Log request

duration := time.Since(start)

log.Printf("HTTP: %s %s %d %v", r.Method, r.URL.Path, wrapped.statusCode, duration)

})

}

func corsMiddleware(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Access-Control-Allow-Origin", "*")

w.Header().Set("Access-Control-Allow-Methods", "GET, POST, PUT, DELETE, OPTIONS, PATCH")

w.Header().Set("Access-Control-Allow-Headers", "Content-Type, Authorization, X-Trace-ID")

if r.Method == "OPTIONS" {

w.WriteHeader(http.StatusOK)

return

}

next.ServeHTTP(w, r)

})

}

func authMiddleware(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// Simple auth for demo - in production use proper authentication

authHeader := r.Header.Get("Authorization")

if authHeader == "" {

authHeader = "Bearer anonymous"

}

// Extract user from token

user := "anonymous"

if len(authHeader) > 7 && authHeader[:7] == "Bearer " {

user = authHeader[7:]

}

// Add user to context

ctx := context.WithValue(r.Context(), "user", user)

next.ServeHTTP(w, r.WithContext(ctx))

})

}

type statusResponseWriter struct {

http.ResponseWriter

statusCode int

}

func (w *statusResponseWriter) WriteHeader(code int) {

w.statusCode = code

w.ResponseWriter.WriteHeader(code)

}Example API Usage

Let’s see our error handling in action with some example requests:

Example 1: Validation Error with Multiple Issues

Request with multiple validation errors

curl -X POST http://localhost:8080/v1/tasks \

-H "Content-Type: application/json" \

-d '{

"task": {

"title": "",

"description": "This description is wayyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy too long…",

"status": "INVALID_STATUS",

"tags": ["INVALID TAG", "tag-1", "tag-1"]

}

}'Response

< HTTP/1.1 422 Unprocessable Entity

< Content-Type: application/problem+json

{

"detail": "The request contains 5 validation errors",

"errors": [

{

"code": "TOO_SHORT",

"field": "title",

"message": "value length must be at least 1 characters"

},

{

"code": "TOO_LONG",

"field": "description",

"message": "value length must be at most 100 characters"

},

{

"code": "INVALID_FORMAT",

"field": "tags",

"message": "value does not match regex pattern `^[a-z0-9-]+$`"

},

{

"code": "INVALID_TAG_FORMAT",

"field": "tags[0]",

"message": "Tag 'INVALID TAG' must be lowercase letters, numbers, and hyphens only"

},

{

"code": "DUPLICATE_TAG",

"field": "tags[2]",

"message": "Tag 'tag-1' appears multiple times"

}

],

"instance": "/v1/tasks",

"status": 400,

"timestamp": {

"seconds": 1755288524,

"nanos": 484865000

},

"title": "Validation Failed",

"traceId": "eb4bfb3f-9397-4547-8618-ce9952a16067",

"type": "https://api.example.com/errors/validation-failed"

}Example 2: Not Found Error

Request for non-existent task

curl http://localhost:8080/v1/tasks/non-existent-id

Response

< HTTP/1.1 404 Not Found

< Content-Type: application/problem+json

{

"detail": "Task with ID 'non-existent-id' was not found.",

"instance": "/v1/tasks/non-existent-id",

"status": 404,

"timestamp": {

"seconds": 1755288565,

"nanos": 904607000

},

"title": "Resource Not Found",

"traceId": "6ce00cd8-d0b7-47f1-b6f6-9fc1375c26a4",

"type": "https://api.example.com/errors/resource-not-found"

}Example 3: Conflict Error

curl -X POST http://localhost:8080/v1/tasks \

-H "Content-Type: application/json" \

-d '{

"task": {

"title": "Existing Task Title"

}

}'

curl -X POST http://localhost:8080/v1/tasks \

-H "Content-Type: application/json" \

-d '{

"task": {

"title": "Existing Task Title"

}

}'Response

< HTTP/1.1 409 Conflict

< Content-Type: application/problem+json

{

"detail": "Conflict creating task: A task with this title already exists",

"instance": "/v1/tasks",

"status": 409,

"timestamp": {

"seconds": 1755288593,

"nanos": 594458000

},

"title": "Resource Conflict",

"traceId": "ed2e78d2-591d-492a-8d71-6b6843ce86f7",

"type": "https://api.example.com/errors/resource-conflict"

}Example 4: Service Unavailable (Transient Error)

When database is down

curl http://localhost:8080/v1/tasks

Response

HTTP/1.1 503 Service Unavailable

Content-Type: application/problem+json

Retry-After: 30

{

"type": "https://api.example.com/errors/service-unavailable",

"title": "Service Unavailable",

"status": 503,

"detail": "Database connection pool exhausted. Please try again later.",

"instance": "/v1/tasks",

"traceId": "db-pool-001",

"timestamp": "2025-08-15T10:30:00Z",

"extensions": {

"retryable": true,

"retryAfter": "2025-08-15T10:30:30Z",

"maxRetries": 3,

"backoffType": "exponential",

"backoffMs": 1000,

"errorCategory": "database"

}

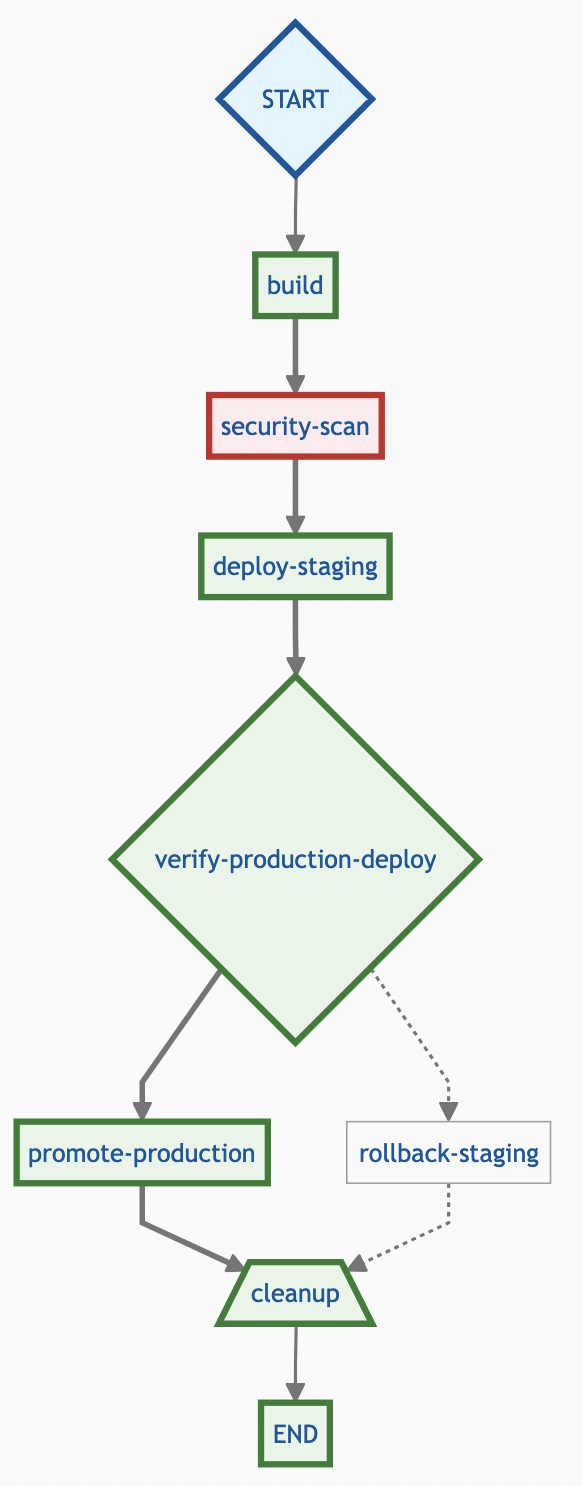

}Best Practices Summary

Our implementation demonstrates several key best practices:

1. Consistent Error Format

All errors follow RFC 9457 (Problem Details) format, providing:

- Machine-readable type URIs

- Human-readable titles and details

- HTTP status codes

- Request tracing

- Extensible metadata

2. Comprehensive Validation

- All validation errors are returned at once, not one by one

- Clear field paths for nested objects

- Descriptive error codes and messages

- Support for batch operations with partial success

3. Security-Conscious Design

- No sensitive information in error messages

- Internal errors are logged but not exposed

- Generic messages for authentication failures

- Request IDs for support without exposing internals

4. Developer Experience

- Clear, actionable error messages

- Helpful suggestions for fixing issues

- Consistent error codes across protocols

- Rich metadata for debugging

5. Protocol Compatibility

- Seamless translation between gRPC and HTTP

- Proper status code mapping

- Preservation of error details across protocols

6. Observability

- Structured logging with trace IDs

- Prometheus metrics for monitoring

- OpenTelemetry integration

- Error categorization for analysis

Conclusion

This comprehensive guide demonstrates how to build robust error handling for modern APIs. By treating errors as a first-class feature of our API, we’ve achieved several key benefits:

- Consistency: All errors, regardless of their source, are presented to clients in a predictable format.

- Clarity: Developers consuming our API get clear, actionable feedback, helping them debug and integrate faster.

- Developer Ergonomics: Our internal service code is cleaner, as handlers focus on business logic while the middleware handles the boilerplate of error conversion.

- Security: We have a clear separation between internal error details (for logging) and public error responses, preventing leaks.

Additional Resources

- RFC 9457: Problem Details for HTTP APIs

- Google API Improvement Proposals (AIPs)

- gRPC Error Handling Best Practices

- OpenTelemetry Error Tracking

You can find the full source code for this example in this GitHub repository.