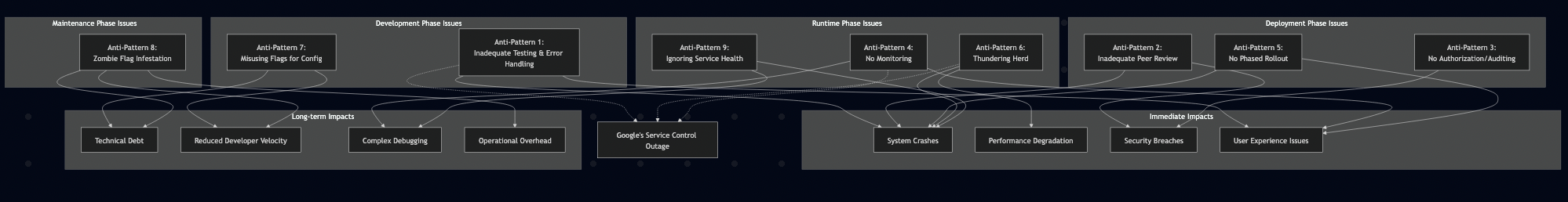

Feature flags are key components of modern infrastructure for shipping faster, testing in production, and reducing risk. However, they can also be a fast track to complex outages if not handled with discipline. Google’s recent major outage serves as a case study, and I’ve seen similar issues arise from missteps with feature flags. The core of Google’s incident revolved around a new code path in their “Service Control” system that should have been protected with a feature flag but wasn’t. This path, designed for an additional quota policy check, went directly to production without flag protection. When a policy change with unintended blank fields was replicated globally within seconds, it triggered the untested code path, causing a null pointer that crashed binaries globally. This incident perfectly illustrates why feature flags aren’t just nice-to-have—they’re essential guardrails that prevent exactly these kinds of global outages. Google also didn’t implement proper error handling, and the system didn’t use randomized exponential backoff that resulted in “thundering herd” effect that prolonged recovery.

Let’s dive into common anti-patterns I’ve observed and how we can avoid them:

Anti-Pattern 1: Inadequate Testing & Error Handling

This is perhaps the most common and dangerous anti-pattern. It involves deploying code behind a feature flag without comprehensive testing all states of that flag (on, off) and the various condition that interact with the flagged feature. It also includes neglecting robust error handling within the flagged code itself without defaulting flags to “off” in production. For example, Google’s Service Control binary crashed due to a null pointer when a new policy was propagated globally. This didn’t adequately tested the code path with empty input and failed to implement proper error handling. I’ve seen similar issues where teams didn’t test the code path protected with a feature flag in a test environment that only manifest in production. In other cases, the flag was accidentally left ON by default for production, leading to immediate issues upon deployment. The Google incident also mentions the problematic code “did not have appropriate error handling.” If the code within your feature flag assumes perfect conditions, it’s a ticking time bomb. These issues can be remedied by:

- Default Off in Production: Ensure all new feature flags are disabled by default in production.

- Comprehensive Testing: Test the feature with the flag ON and OFF. Crucially, test the specific conditions, data inputs, and configurations that trigger with the new code paths enabled by the flag.

- Robust Error Handling: Implement proper error handling within the code controlled by the flag. It should fail gracefully or revert to a safe state if an unexpected issue occurs, not bring down the service.

- Consider Testing Costs: If testing all combinations becomes prohibitively expensive or complex, it might indicate the feature is too large for a single flag and should be broken down.

Anti-Pattern 2: Inadequate Peer Review

This anti-pattern manifests when feature flag changes occur without a proper review process. It’s like making direct database changes in production without a change request. For example, Google’s issue was a policy metadata change rather than a direct flag toggle where metadata replicated globally within seconds. It is analogous to flipping a critical global flag without due diligence. If that policy metadata change had been managed like a code change (e.g., via GitOps or Config as a Code with canary rollout, the issue might have been caught earlier. This can be remedied with:

- GitOps/Config-as-Code: Manage feature flag configurations as code within your Git repository. This enforces PRs, peer reviews, and provides an auditable history.

- Test Flag Rollback: As part of your process, ensure you can easily and reliably roll back a feature flag configuration change, just like you would with code.

- Detect Configuration Drift: Ensure that the actual state in production does not drift from what’s expected or version-controlled.

Anti-Pattern 3: Inadequate Authorization and Auditing

This means not protecting enabling/disabling feature flags with proper permissions. Internally, if anyone can flip a production flag via a UI without a PR or a second pair of eyes, we’re exposed. Also, if there’s no clear record of who changed it, when, and why, incident response becomes a frantic scramble. Remedies include:

- Strict Access Control: Implement strong Role-Based Access Control (or Relationship-Based Access Control) to limit who can modify flag states or configurations in production.

- Comprehensive Auditing: Ensure your feature flagging system provides detailed audit logs for every change: who made the change, what was changed, and when.

Anti-Pattern 4: No Monitoring

Deploying a feature behind a flag and then flipping it on for everyone without closely monitoring its impact is like walking into a dark room and hoping you don’t trip. This can be remedied by actively monitoring feature flags and collecting metrics on your observability platform. This includes tracking not just the flag’s state (on/off) but also its real-time impact on key system metrics (error rates, latency, resource consumption) and relevant business KPIs.

Anti-Pattern 5: No Phased Rollout or Kill Switch

This means turning a new, complex feature on for 100% of users simultaneously with a flag. For example, during Google’s incident, major changes to quota management settings were propagated immediately causing global outage. The “red-button” to disable the problematic serving path was crucial for their recovery. Remedies for this anti-pattern include:

- Canary Releases & Phased Rollouts: Don’t enable features for everyone at once. Perform canary releases: enable for internal users, then a small percentage of production users while monitoring metrics.

- “Red Button” Control: Have a clear, a “kill switch” or “red button” mechanism for quickly and globally disabling any problematic feature flag if issues arise.

Anti-Pattern 6: Thundering Herd

Enabling a feature flag can potentially change traffic patterns for incoming requests. For example, Google didn’t implement randomized exponential backoff in Service Control that caused “thundering herd” on underlying infrastructure. To prevent such issues, implement exponential backoff with jitter for request retries, combined with comprehensive monitoring.

Anti-Pattern 7: Misusing Flags for Config or Entitlements

Using feature flags as a general-purpose configuration management system or to manage complex user entitlements (e.g., free vs. premium tiers). For example, I’ve seen teams use feature flags to store API endpoints, timeout values, or rules about which customer tier gets which sub-feature. This means that your feature flag system becomes a de-facto distributed configuration database. This can be remedied with:

- Purposeful Flags: Use feature flags primarily for controlling the lifecycle of discrete features: progressive rollout, A/B testing, kill switches.

- Dedicated Systems: Use proper configuration management tools for application settings and robust entitlement systems for user permissions and plans.

Anti-Pattern 8: The “Zombie Flag” Infestation

Introducing feature flags but never removing them once a feature is fully rolled out or stable. I’ve seen codebases littered with if (isFeatureXEnabled) checks for features that have been live for years or were abandoned. This can be remedied with:

- Lifecycle Management: Treat flags as having a defined lifespan.

- Scheduled Cleanup: Regularly audit flags. Once a feature is 100% rolled out and stable (or definitively killed), schedule work to remove the flag and associated dead code.

Anti-Pattern 9: Ignoring Flagging Service Health

This means not considering how your application behaves if the feature flagging service itself experiences an outage or is unreachable. A crucial point in Google’s RCA was that their “Cloud Service Health infrastructure being down due to this outage” delayed communication. A colleague once pointed out: what happens if LaunchDarkly is down? This can be remedied with:

- Safe Defaults in Code: When your code requests a flag’s state from the SDK (e.g.,

ldClient.variation("my-feature", user, **false**)), the provided default value is critical. For new or potentially risky features, this default must be the “safe” state (feature OFF). - SDK Resilience: Feature-Flag SDKs are designed to cache flag values and use them if the service is unreachable (stasis). But on a fresh app start before any cache is populated, your coded defaults are your safety net.

Summary

Feature flags are incredibly valuable for modern software development. They empower teams to move faster and release with more confidence. But as the Google incident and my own experiences show, they require thoughtful implementation and ongoing discipline. By avoiding these anti-patterns – by testing thoroughly, using flags for their intended purpose, managing their lifecycle, governing changes, and planning for system failures – we can ensure feature flags remain a powerful asset.