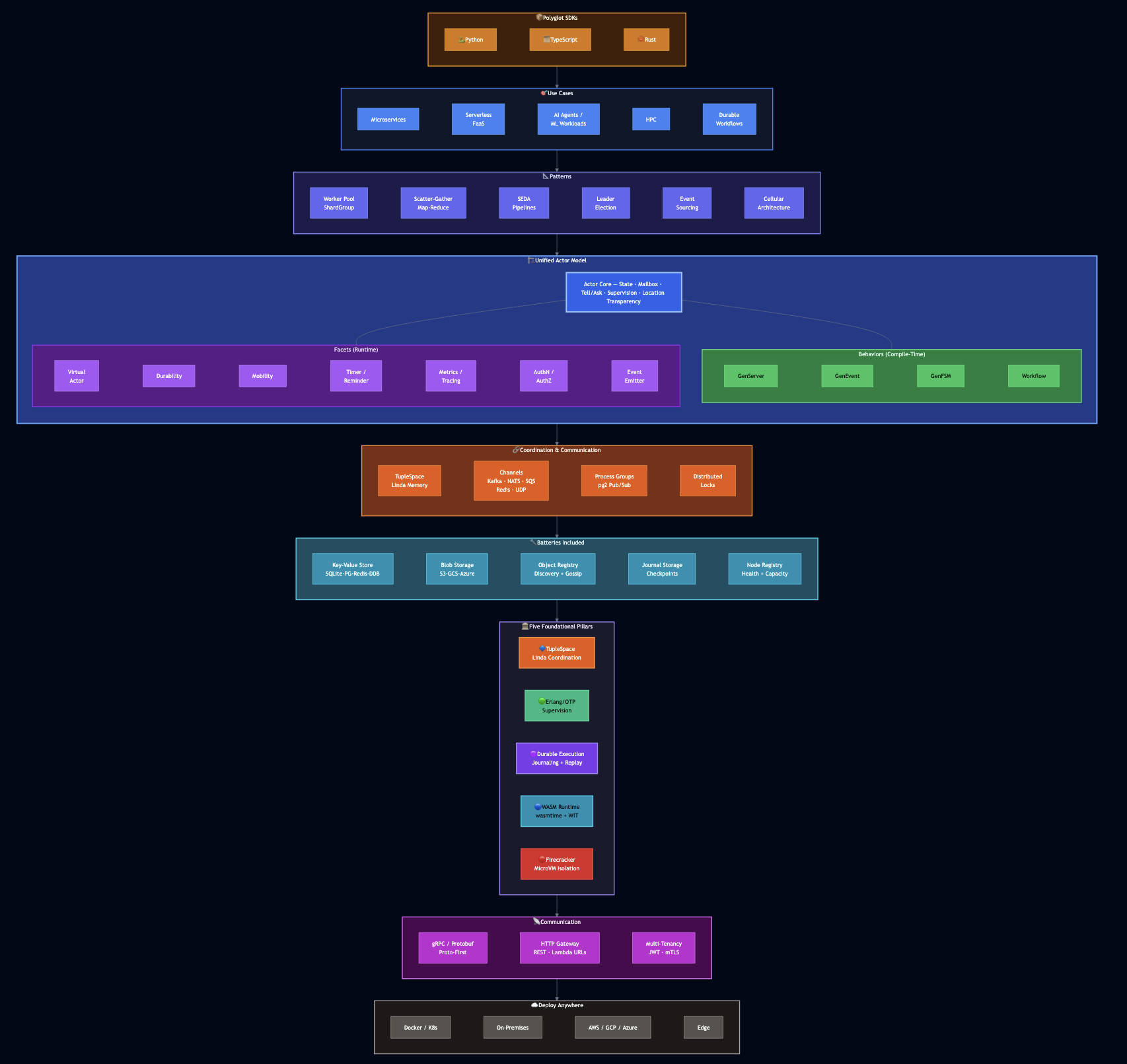

I previously shared my experience with distributed systems over the last three decades that included IBM mainframes, BSD sockets, Sun RPC, CORBA, Java RMI, SOAP, Erlang actors, service meshes, gRPC, serverless functions, etc. I kept solving the same problems in different languages, on different platforms, with different tooling. Each one of these frameworks taught me something essential but they also left something on the table. PlexSpaces pulls those lessons together into a single open-source framework: a polyglot application server that handles microservices, serverless functions, durable workflows, AI workloads, and high-performance computing using one unified actor abstraction. You write actors in Python, Rust, or TypeScript, compile them to WebAssembly, deploy them on-premises or in the cloud, and the framework handles persistence, fault tolerance, observability, and scaling. No service mesh. No vendor lock-in. Same binary on your laptop and in production.

Why Now?

Three things converged over the last few years that made this the right moment to build PlexSpaces:

- WebAssembly matured. WASI stabilized enough to run real server workloads. Java promised “Write Once, Run Anywhere” — WASM actually delivers it. Docker’s creator Solomon Hykes captured it in 2019: “If WASM+WASI existed in 2008, we wouldn’t have needed to create Docker.” Today that future has arrived.

- AI agents exploded. Every AI agent is fundamentally an actor: it maintains state (conversation history), processes messages (user queries), calls tools (side effects), and needs fault tolerance (LLM APIs fail). The actor model maps naturally to agent orchestration but existing frameworks either lack durability, lock you to one language, or require separate infrastructure.

- Multi-cloud pressure intensified. I’ve watched teams at multiple companies build on AWS in production but struggle to develop locally. Bugs surface only after deployment because Lambda, DynamoDB, and SQS behave differently from their local mocks. Modern enterprises need code that runs identically on a developer’s laptop, on-premises, and in any cloud.

PlexSpaces addresses all three: polyglot via WASM, actor-native for AI workloads, and local-first by design.

The Lessons That Shaped PlexSpaces

Every era of distributed computing burned a lesson into my thinking. Here’s what stuck and how I applied each lesson to PlexSpaces.

- Efficiency runs deep: When I programmed BSD sockets in C, I controlled every byte on the wire. That taught me to respect the transport layer.

Applied: PlexSpaces uses gRPC and Protocol Buffers for binary communication not because JSON is bad, but because high-throughput systems deserve binary protocols with proper schemas. - Contracts prevent chaos: Sun RPC introduced me to XDR and

rpcgenfor defining a contract, generate the code. CORBA reinforced this with IDL. I have seen countless times where teams sprinkle Swagger annotations on code and assumes that they have APIs, which keep growing without any standards or consistency.

Applied: PlexSpaces follows a proto-first philosophy – every API lives in Protocol Buffers, every contract generates typed stubs across languages. - Parallelism needs multiple primitives: During my PhD research, I built JavaNow – a parallel computing framework that combined Linda-style tuple spaces, MPI collective operations, and actor-based concurrency on networks of workstations. That research taught me something frameworks keep forgetting: different coordination problems need different primitives. You can’t force everything through message passing alone.

Applied: PlexSpaces provides actors and tuple spaces and channels and process groups — because real systems need all of them. - Developer experience decides adoption: Java RMI made remote objects feel local. JINI added service discovery. Then J2EE and EJB buried developer hearts under XML configuration.

Applied: PlexSpaces SDK provides decorator-based development (Python), inheritance-based development (TypeScript), and annotation-based development (Rust) to eliminate boilerplate. - Simplicity defeats complexity every time: With SOAP, WSDL, EJB, J2EE, I watched the Java enterprise ecosystem collapse under its own weight. REST won not because it was more powerful, but because it was simpler.

Applied: One actor abstraction with composable capabilities beats a zoo of specialized types. - Cross-cutting concerns belong in the platform: Spring and AOP taught me to handle observability, security, and throttling consistently. But microservices in polyglot environments broke that model. Service meshes like Istio and Dapr tried to fix it with sidecar proxies but it requires another networking hop, another layer of YAML to debug.

Applied: PlexSpaces bakes these concerns directly into the runtime. No service mesh. No extra hops. - Serverless is the right idea with the wrong execution: AWS Lambda showed me the future: auto-scaling, built-in observability, zero server management. But Lambda also showed me the problem: vendor lock-in, cold starts, and the inability to run locally.

Applied: PlexSpaces delivers serverless semantics that run identically on your laptop and in the cloud. - Application servers got one thing right: Despite all the complexity of J2EE, I loved one idea: the application server that hosts multiple applications. You deployed WAR files to Tomcat, and it handled routing, lifecycle, and shared services. That model survived even after EJB died.

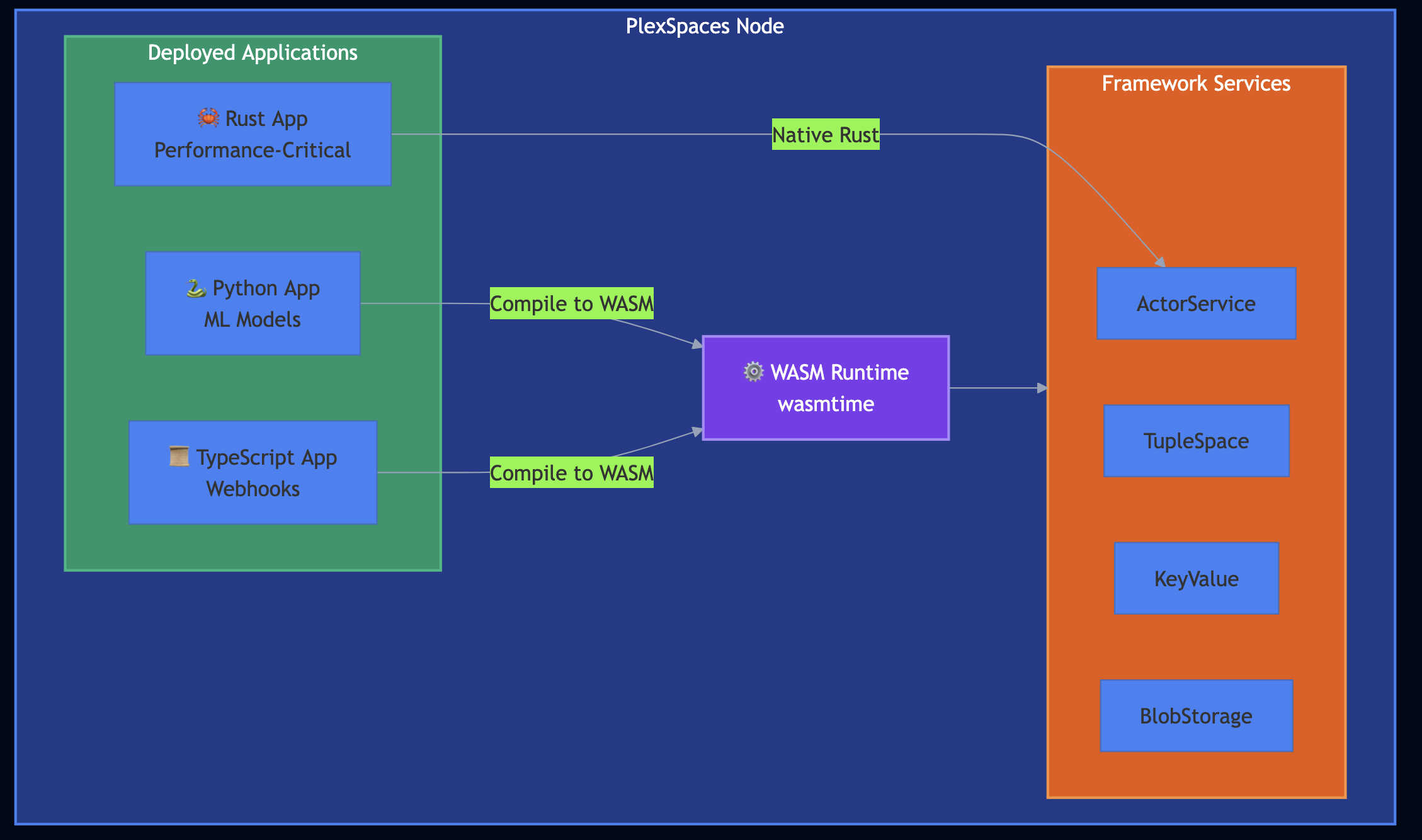

Applied: PlexSpaces revives this concept for the polyglot serverless era where you can deploy Python ML models, TypeScript webhooks, and Rust performance-critical code to the same node.

I also built formicary, a framework for durable executions with graph-based workflow processing. That experience directly shaped PlexSpaces’ workflow and durability abstractions.

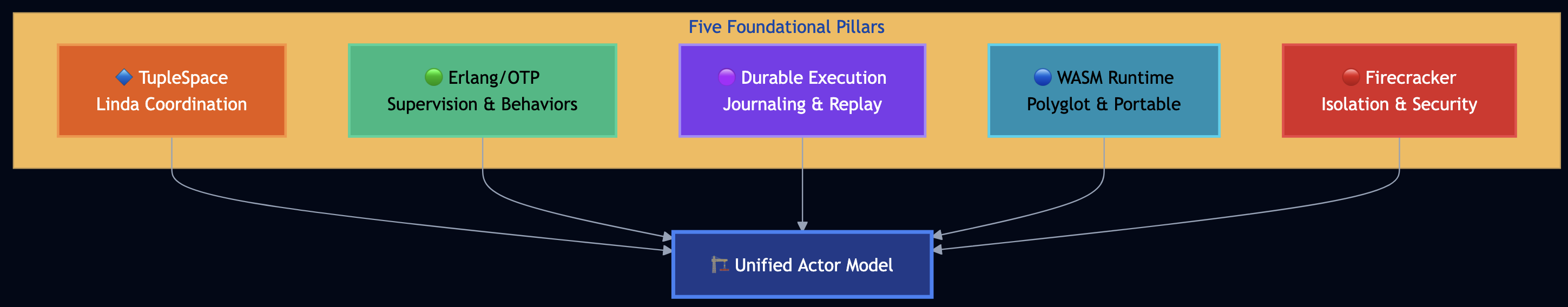

What PlexSpaces Actually Does

PlexSpaces combines five foundational pillars into a unified distributed computing platform:

- TupleSpace Coordination (Linda Model): Decouples producers and consumers through associative memory. Actors write tuples, read them by pattern, and never need to know who’s on the other side.

- Erlang/OTP Philosophy: Supervision trees restart failed actors. Behaviors define message-handling patterns.

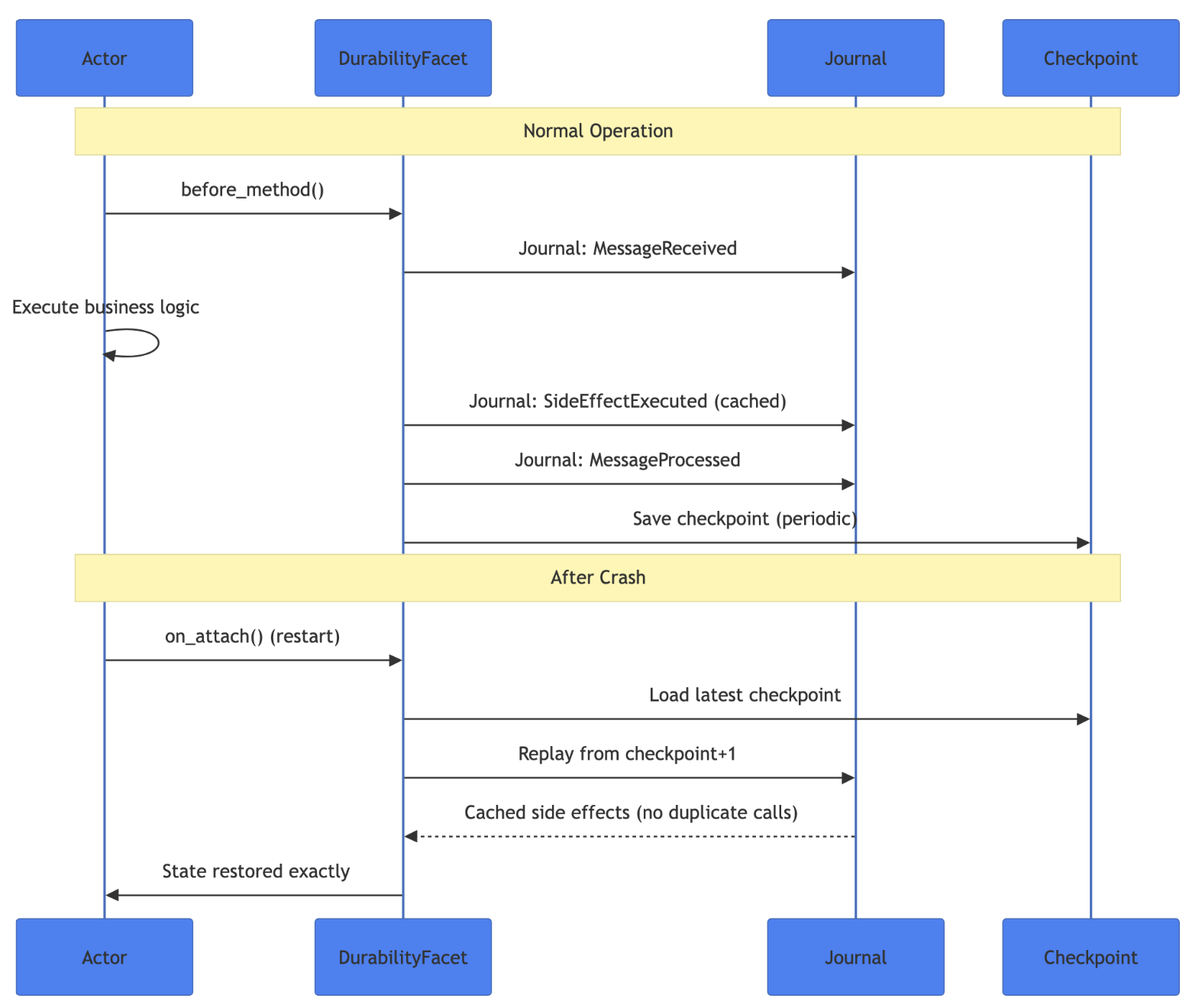

- Durable Execution: Every actor operation gets journaled. When a node crashes, the framework replays the journal and restores state exactly. Side effects get cached during replay, so external calls don’t fire twice. Inspired by Restate and my earlier work on formicary.

- WASM Runtime: Actors compile to WebAssembly and run in a sandboxed environment. Python, TypeScript, Rust with same deployment model, same security guarantees.

- Firecracker Isolation: For workloads that need hardware-level isolation, PlexSpaces supports Firecracker microVMs alongside WASM sandboxing.

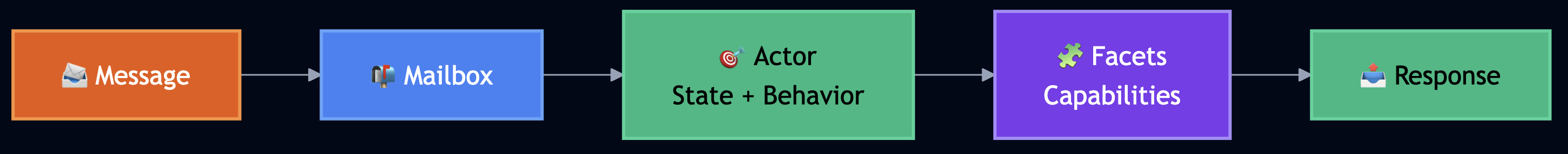

Core Abstractions: Actors, Behaviors, and Facets

One Actor to Rule Them All

PlexSpaces follows a design principle I arrived at after years of watching frameworks proliferate actor types: one powerful abstraction with composable capabilities beats multiple specialized types. Every actor in PlexSpaces maintains private state, processes messages sequentially (eliminating race conditions), operates transparently across local and remote boundaries, and recovers automatically through supervision.

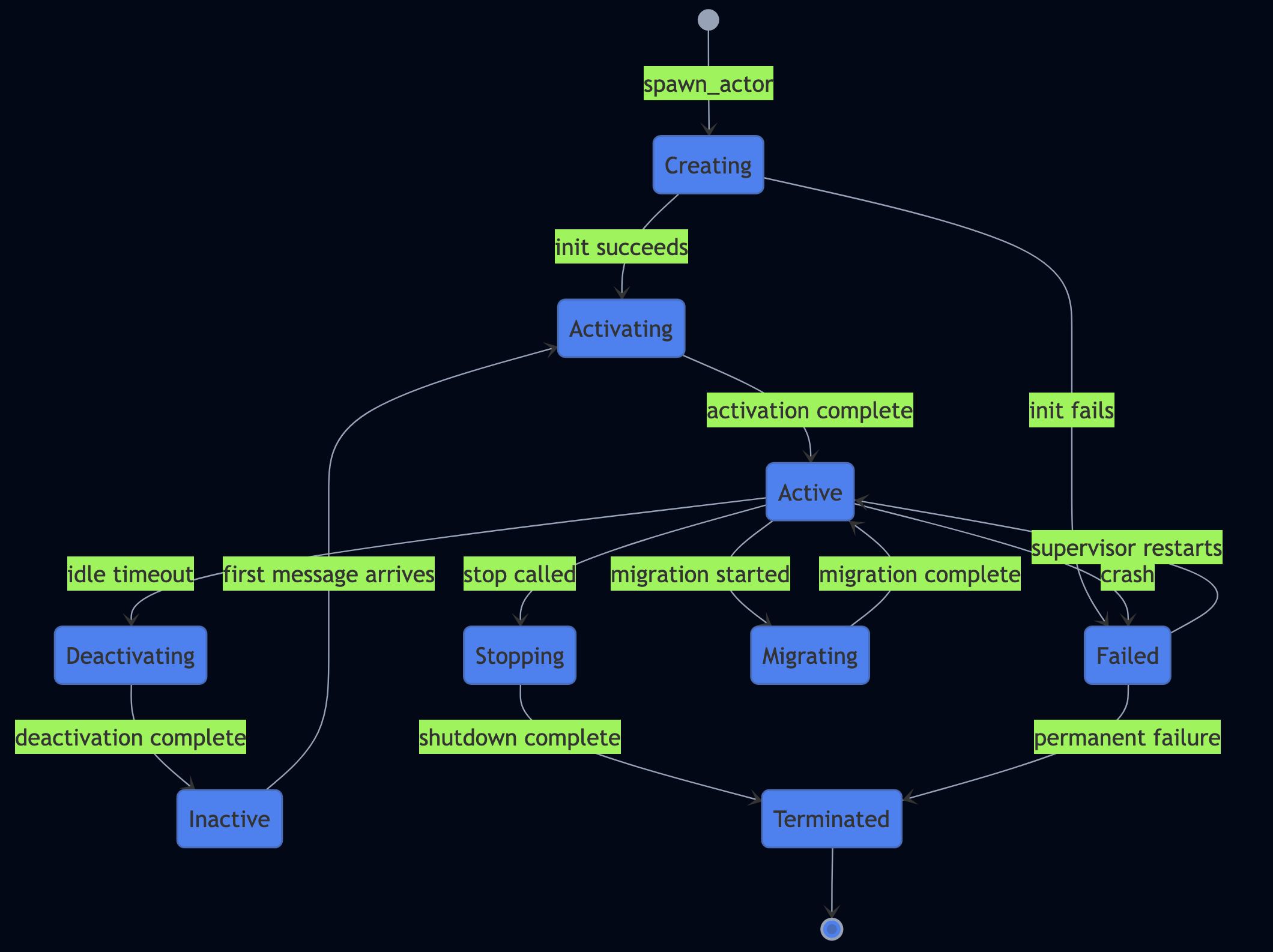

Actor Lifecycle

Actors move through a well-defined lifecycle — one of the details that distinguishes PlexSpaces from simpler actor frameworks:

PlexSpaces supports Virtual actors (with VirtualActorFacet inspired by Orleans Actor Model) leverage this lifecycle automatically, which activate on first message, deactivate after idle timeout, and reactivate transparently on the next message. No manual lifecycle management.

Tell vs Ask: Two Message Patterns

PlexSpaces supports two fundamental communication patterns:

- Tell (fire-and-forget): The sender dispatches a message and moves on. Use this for events, notifications, and one-way commands.

- Ask (request-reply): The sender dispatches a request and waits for a response with a timeout. Use this for queries and operations that need confirmation.

from plexspaces import actor, handler, host

@actor

class OrderService:

@handler("place_order")

def place_order(self, order: dict) -> dict:

# Tell: fire-and-forget notification to analytics

host.tell("analytics-actor", "order_placed", order)

# Ask: request-reply to inventory service (5s timeout)

inventory = host.ask("inventory-actor", "check_stock",

{"sku": order["sku"]}, timeout_ms=5000)

if inventory["available"]:

return {"status": "confirmed", "order_id": order["id"]}

return {"status": "out_of_stock"}

Behaviors: Compile-Time Patterns

Behaviors define how an actor processes messages. You choose a behavior at compile time:

| Behavior | Annotation | Pattern | Best For |

|---|---|---|---|

| GenServer | @actor | Request-reply | Stateful services, CRUD |

| GenEvent | @event_actor | Fire-and-forget | Event processing, logging |

| GenFSM | @fsm_actor | State machine | Order processing, approval flows |

| Workflow | @workflow_actor | Durable orchestration | Long-running processes |

Facets: Runtime Capabilities

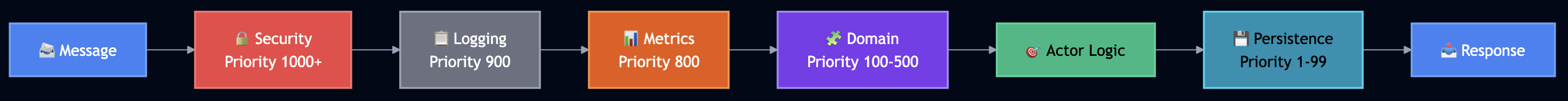

Facets attach dynamic capabilities to actors without changing the actor type. I wrote about the pattern of dynamic facets and runtime composition previously. This allows adding dynamic behavior through facets, combined with Erlang’s static behavior model. Think of facets as middleware that wraps your actor. They execute in priority order like security facets fire first, then logging, then metrics, then your business logic, then persistence:

Available facets include:

- Infrastructure:

VirtualActorFacet(Orleans-style auto-activation),DurabilityFacet(persistence + replay),MobilityFacet(actor migration) - Storage:

KeyValueFacet,BlobStorageFacet,LockFacet - Communication:

ProcessGroupFacet(Erlang pg2-style groups),RegistryFacet - Scheduling:

TimerFacet(transient),ReminderFacet(durable) - Observability:

MetricsFacet,TracingFacet,LoggingFacet - Security:

AuthenticationFacet,AuthorizationFacet - Events:

EventEmitterFacet(reactive patterns)

Facets compose freely, e.g., add facets=["durability", "timer", "metrics"] and your actor gains persistence, scheduled execution, and Prometheus metrics with zero additional code.

Custom Facets: Extending the Framework

The facet system opens for extension. You can build domain-specific facets and register them with the framework:

use plexspaces_core::{Facet, FacetError, InterceptResult};

pub struct FraudDetectionFacet {

threshold: f64,

}

#[async_trait]

impl Facet for FraudDetectionFacet {

fn name(&self) -> &str { "fraud_detection" }

fn priority(&self) -> u32 { 200 } // Run after security, before domain logic

async fn before_method(

&mut self, method: &str, payload: &[u8]

) -> Result<InterceptResult, FacetError> {

let score = self.score_transaction(payload).await?;

if score > self.threshold {

return Err(FacetError::Custom("fraud_detected".into()));

}

Ok(InterceptResult::Continue)

}

}

Register it once, attach it to any actor by name. This extensibility distinguishes PlexSpaces from frameworks with fixed capability sets.

Hands-On: Building Actors in Three Languages

Let me show you how PlexSpaces works in practice across all three SDKs.

Python: Decorator-Based Development

from plexspaces import actor, state, handler

@actor

class CounterActor:

count: int = state(default=0)

@handler("increment")

def increment(self, amount: int = 1) -> dict:

self.count += amount

return {"count": self.count} # => {"count": 5}

@handler("get")

def get(self) -> dict:

return {"count": self.count} # => {"count": 5}

The SDK eliminates over 100 lines of WASM boilerplate. You declare state with state(), mark handlers with @handler, and return dictionaries. The framework handles serialization, lifecycle, and state management.

TypeScript: Inheritance-Based Development

import { PlexSpacesActor } from "@plexspaces/sdk";

interface CounterState { count: number; }

export class CounterActor extends PlexSpacesActor<CounterState> {

getDefaultState(): CounterState { return { count: 0 }; }

onIncrement(payload: Record<string, unknown>) {

const amount = Number(payload.amount ?? 1);

this.state.count += amount;

return { count: this.state.count }; // => {"count": 5}

}

onGet() { return { count: this.state.count }; }

}

Rust: Annotation-Based Development

use plexspaces_sdk::{gen_server_actor, plexspaces_handlers, handler, json};

#[gen_server_actor]

struct Counter { count: i32 }

#[plexspaces_handlers]

impl Counter {

#[handler("increment")]

async fn increment(&mut self, _ctx: &ActorContext, msg: &Message)

-> Result<serde_json::Value, BehaviorError> {

let payload: serde_json::Value = serde_json::from_slice(&msg.payload)?;

self.count += payload["amount"].as_i64().unwrap_or(1) as i32;

Ok(json!({ "count": self.count })) // => {"count": 5}

}

}

Building, Deploying, and Invoking

# Build Python actor to WebAssembly

plexspaces-py build counter_actor.py -o counter.wasm

# Deploy to a running node

curl -X POST http://localhost:8094/api/v1/deploy \

-F "namespace=default" \

-F "actor_type=counter" \

-F "wasm=@counter.wasm"

# Invoke via HTTP — FaaS-style (POST = tell, GET = ask)

curl -X POST "http://localhost:8080/api/v1/actors/default/default/counter" \

-H "Content-Type: application/json" \

-d '{"action":"increment","amount":5}'

# Request-reply on POST (use invocation=call)

curl -X POST "http://localhost:8080/api/v1/actors/default/default/counter?invocation=call" \

-H "Content-Type: application/json" \

-d '{"action":"get"}'

# => {"count": 5}

That’s it. No Kubernetes manifests. No Terraform. No sidecar containers. Deploy a WASM module, invoke it over HTTP. The same endpoint works as an AWS Lambda Function URL.

Durable Execution: Crash and Recover Without Losing State

Durable execution solves a problem I’ve encountered at every company I’ve worked for: what happens when a node crashes mid-operation?

PlexSpaces journals every actor operation, when messages received, side effects executed, state changes applied. When a node crashes and restarts, the framework loads the latest checkpoint and replays journal entries from that point. Side effects return cached results during replay, so external API calls don’t fire twice.

Example: A Durable Bank Account

from plexspaces import actor, state, handler

@actor(facets=["durability"])

class BankAccount:

balance: int = state(default=0)

transactions: list = state(default_factory=list)

@handler("deposit")

def deposit(self, amount: int = 0) -> dict:

self.balance += amount

self.transactions.append({

"type": "deposit", "amount": amount,

"balance_after": self.balance

})

return {"status": "ok", "balance": self.balance}

@handler("withdraw")

def withdraw(self, amount: int = 0) -> dict:

if amount > self.balance:

return {"status": "insufficient_funds", "balance": self.balance}

self.balance -= amount

self.transactions.append({

"type": "withdraw", "amount": amount,

"balance_after": self.balance

})

return {"status": "ok", "balance": self.balance}

@handler("replay")

def replay_transactions(self) -> dict:

"""Rebuild balance from transaction log to verify consistency."""

rebuilt = 0

for tx in self.transactions:

rebuilt += tx["amount"] if tx["type"] == "deposit" else -tx["amount"]

return {

"replayed": len(self.transactions),

"rebuilt_balance": rebuilt,

"current_balance": self.balance,

"consistent": rebuilt == self.balance

}

Adding facets=["durability"] activates journaling and checkpointing. If the node crashes after processing ten deposits, the framework restores all ten sono data loss, no duplicate charges. Periodic checkpoints accelerate recovery by 90%+ and the framework loads the latest snapshot and replays only recent entries.

Data-Parallel Actors: Worker Pools and Scatter-Gather

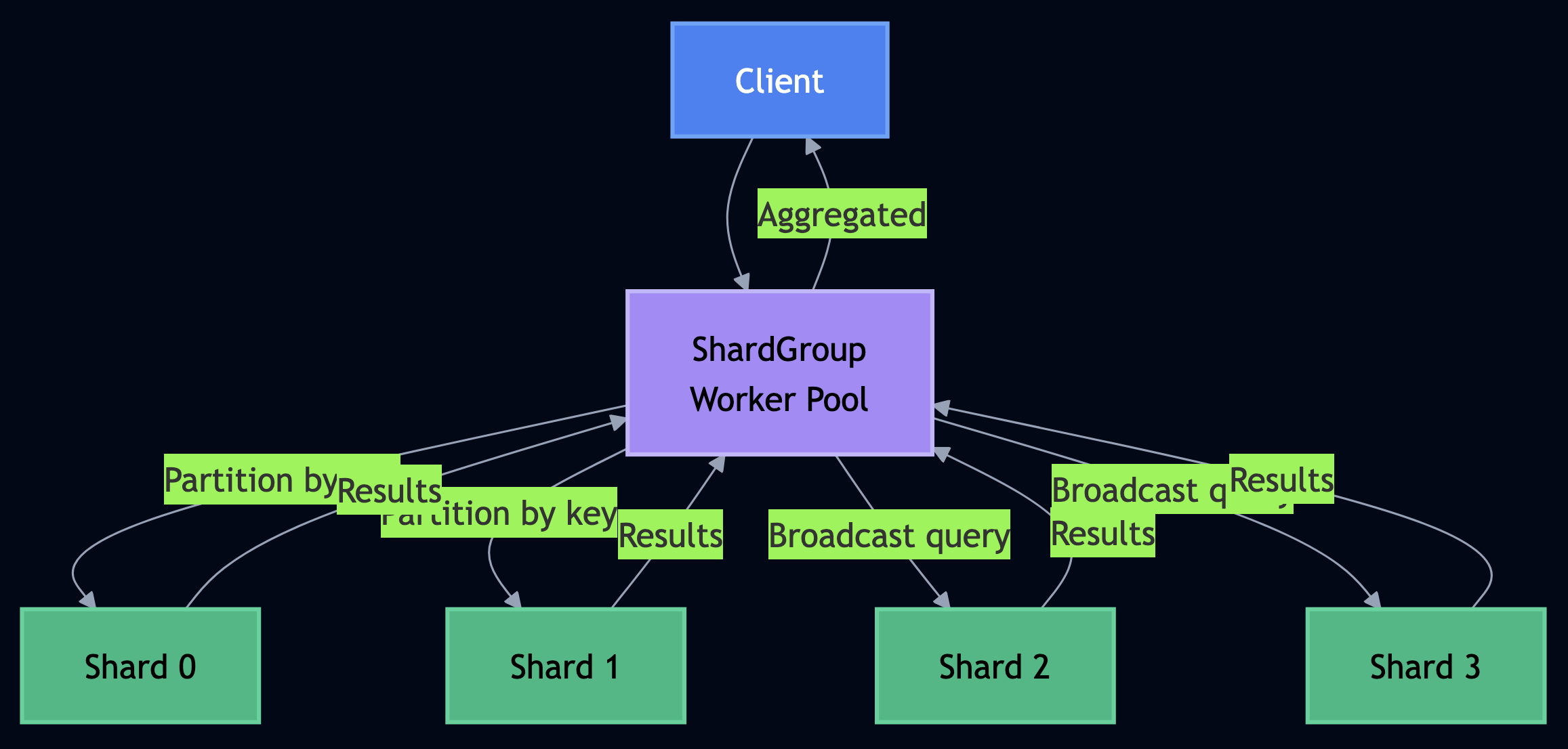

When I built JavaNow during my PhD, I implemented MPI-style scatter-gather and parallel map operations. PlexSpaces brings these patterns to production through ShardGroups adata-parallel actor pools inspired by the DPA paper. A ShardGroup partitions data across multiple actor shards and supports three core operations:

- Bulk Update: Routes writes to the correct shard based on a partition key (hash, consistent hash, or range)

- Parallel Map: Queries all shards simultaneously and collects results

- Scatter-Gather: Broadcasts a query and aggregates responses with fault tolerance

Example: Data-Parallel Worker Pool with Scatter-Gather

This pattern comes from the PlexSpaces examples. Each worker actor in the ShardGroup holds a partition of state and processes tasks independently and the framework handles routing, fan-out, and aggregation:

#[gen_server_actor]

pub struct WorkerActor {

worker_id: String,

state: Arc<RwLock<HashMap<String, Value>>>,

tasks_processed: u64,

total_processing_time_ms: u64,

}

#[plexspaces_handlers]

impl WorkerActor {

#[handler("*")]

async fn process(&mut self, _ctx: &ActorContext, msg: &Message)

-> Result<Value, BehaviorError> {

let payload: Value = serde_json::from_slice(&msg.payload)?;

match payload["action"].as_str().unwrap_or("unknown") {

"set" => {

let key = payload["key"].as_str().unwrap_or("default");

self.state.write().await.insert(key.to_string(), payload["value"].clone());

self.tasks_processed += 1;

Ok(json!({ "action": "set", "key": key, "worker_id": self.worker_id }))

}

"get_total_count" => {

let state = self.state.read().await;

let total: u64 = state.values().filter_map(|v| v.as_u64()).sum();

Ok(json!({

"total": total, "worker_id": self.worker_id,

"keys_processed": state.len()

}))

}

"stats" => {

let avg_time = if self.tasks_processed > 0 {

self.total_processing_time_ms / self.tasks_processed

} else { 0 };

Ok(json!({

"worker_id": self.worker_id,

"tasks_processed": self.tasks_processed,

"avg_processing_time_ms": avg_time,

"keys_in_state": self.state.read().await.len()

}))

}

_ => Err(BehaviorError::ProcessingError(format!("Unknown action")))

}

}

}

The #[handler("*")] wildcard routes all messages to a single dispatch method — the worker decides what to do based on the action field. Each worker tracks its own processing statistics, so you can identify hot shards or slow workers.

The orchestration code shows all three data-parallel operations in sequence including bulk update, parallel map, and parallel reduce:

// Create a pool of 20 workers with hash-based partitioning

let pool_id = client.create_worker_pool(

"worker-pool-1", "worker", 20,

PartitionStrategy::PartitionStrategyHash,

HashMap::new(),

).await?;

// Bulk update: route 10,000 messages to the right shard by key

let mut updates = HashMap::new();

for i in 0..10_000 {

let key = format!("key-{:05}", i);

updates.insert(key.clone(), json!({ "action": "set", "key": key, "value": i }));

}

client.parallel_update(&pool_id, updates,

ConsistencyLevel::ConsistencyLevelEventual, false).await?;

// Parallel map: query every worker simultaneously

let results = client.parallel_map(&pool_id,

json!({ "action": "get_total_count" })).await?;

// => 20 responses, one per worker, each with its partition's total

// Parallel reduce: aggregate stats across all workers

let stats = client.parallel_reduce(&pool_id,

json!({ "action": "stats" }),

ShardGroupAggregationStrategy::ShardGroupAggregationConcat, 20).await?;

// => Combined stats: tasks_processed, avg_processing_time_ms per workerparallel_update routes each key to its shard via consistent hashing: 10,000 messages fan out across 20 workers without the caller managing any routing logic. parallel_map broadcasts a query to every shard and collects results. parallel_reduce does the same but aggregates the responses using a configurable strategy (concat, sum, merge). This maps directly to distributed ML (partition model parameters across shards, push gradient updates through parallel_update, collect the full parameter set via parallel_map) or any workload that benefits from partitioned state with scatter-gather queries.

TupleSpace: Linda’s Associative Memory for Coordination

During my PhD work on JavaNow, I was blown away by the simplicity of Linda’s tuple space model for writing data flow based applications for coordination with different actors. The actors communicate through direct message passing, tuple spaces provide associative shared memory where producers write tuples, consumers read or take them with blocking or non-blocking patterns. This decouples components in three dimensions: spatial (actors don’t need references to each other), temporal (producers and consumers don’t need to run simultaneously), and pattern-based (consumers retrieve data by structure, not by address).

from plexspaces import actor, handler, host

import json

@actor

class OrderProducer:

@handler("create_order")

def create_order(self, order_id: str, items: list) -> dict:

# Write a tuple — any consumer can pick it up

host.ts_write(json.dumps(["order", order_id, "pending", items]))

return {"status": "created", "order_id": order_id}

@actor

class OrderProcessor:

@handler("process_next")

def process_next(self) -> dict:

# Take the next pending order (destructive read — removes from space)

pattern = json.dumps(["order", None, "pending", None]) # Wildcards

result = host.ts_take(pattern)

if result:

data = json.loads(result)

order_id = data[1]

# Process order, then write completion tuple

host.ts_write(json.dumps(["order", order_id, "completed", data[3]]))

return {"processed": order_id}

return {"status": "no_pending_orders"}

I use TupleSpace heavily for dataflow pipelines: each stage writes results as tuples, and downstream stages pick them up by pattern. Stages can run at different speeds, on different nodes, in different languages. The tuple space absorbs the mismatch.

Batteries Included: Everything You Need, Built In

At every company I’ve worked at, the first three months after adopting a framework go to integrating storage, messaging, and locks. PlexSpaces ships all of these as built-in services in the same codebase, no extra infrastructure, no service mesh.

What’s in the Box

| Service | Backends | What It Does |

|---|---|---|

| Key-Value Store | SQLite, PostgreSQL, Redis, DynamoDB | Distributed KV storage with TTL |

| Blob Storage | MinIO/S3, GCS, Azure Blob | Large object storage with presigned URLs |

| Distributed Locks | SQLite, PostgreSQL, Redis, DynamoDB | Lease-based mutual exclusion |

| Process Groups | Built-in | Erlang pg2-style group messaging and pub/sub |

| Channels | InMemory, Redis, Kafka, NATS, SQS, SQLite, UDP | Queue and topic messaging |

| Object Registry | SQLite, PostgreSQL, DynamoDB | Service discovery with TTL + gossip |

| Observability | Built-in | Metrics (Prometheus), tracing (OpenTelemetry), structured logging |

| Security | Built-in | JWT auth (HTTP), mTLS (gRPC), tenant isolation, secret masking |

Channels deserve special mention: PlexSpaces auto-selects the best available backend using a priority chain (Kafka ? NATS ? SQS ? ProcessGroup ? InMemory). Start developing with in-memory channels, deploy to production with Kafka withoutcode changes. Actors using non-memory channels also support graceful shutdown: they stop accepting new messages but complete in-progress work.

Multi-Tenancy: Enterprise-Grade Isolation

PlexSpaces enforces two-level tenant isolation. The tenant_id comes from JWT tokens (HTTP) or mTLS certificates (gRPC). The namespace provides sub-tenant isolation for environments/applications. All queries filter by tenant automatically at the repository layer. This gives you secure multi-tenant deployments without trusting application code to enforce boundaries.

Example: Payment Processing with Built-In Services

from plexspaces import actor, handler, host

@actor(facets=["durability", "metrics"])

class PaymentProcessor:

@handler("process_refund")

def process_refund(self, tx_id: str, amount: int) -> dict:

# Distributed lock prevents duplicate refunds

lock_version = host.lock_acquire(f"refund:{tx_id}", 5000)

if not lock_version:

return {"error": "could_not_acquire_lock"}

try:

# Store refund record in built-in key-value store

host.kv_put(f"refund:{tx_id}", json.dumps({

"amount": amount, "status": "processed"

}))

return {"status": "refunded", "amount": amount}

finally:

host.lock_release(f"refund:{tx_id}", lock_version)

No Redis cluster to manage. No DynamoDB table to provision. The framework handles it.

Process Groups: Erlang pg2-Style Communication

Process groups provide distributed pub/sub and group messaging, which is one of Erlang’s most powerful patterns. Here’s a chat room that demonstrates joining, broadcasting, and member queries:

from plexspaces import actor, handler, host

@actor

class ChatRoom:

@handler("join")

def join_room(self, room_name: str) -> dict:

actor_id = host.get_actor_id()

host.process_groups.join(room_name, actor_id)

return {"status": "joined", "room": room_name}

@handler("send")

def send_message(self, room_name: str, text: str) -> dict:

host.process_groups.publish(room_name, {"text": text})

return {"status": "sent"}

@handler("members")

def get_members(self, room_name: str) -> dict:

members = host.process_groups.get_members(room_name)

return {"room": room_name, "members": members}

Groups support topic-based subscriptions within groups and scope automatically by tenant_id and namespace.

Polyglot Development: One Server, Many Languages

A single PlexSpaces node hosts actors written in different languages simultaneously: Python ML models, TypeScript webhook handlers, and Rust performance-critical paths sharing the same actor runtime, storage services, and observability stack:

Same WASM module deploys anywhere: no Docker images, no container registries, no “it works on my machine”:

# Build and deploy to on-premises plexspaces-py build ml_model.py -o ml_model.wasm curl -X POST http://on-prem:8094/api/v1/deploy \ -F "namespace=prod" -F "actor_type=ml_model" -F "wasm=@ml_model.wasm" # Deploy to cloud — same command, same binary curl -X POST http://cloud:8094/api/v1/deploy \ -F "namespace=prod" -F "actor_type=ml_model" -F "wasm=@ml_model.wasm"

Common Patterns

Over three decades, I’ve watched the same architectural patterns emerge at every company and every scale. PlexSpaces supports the most important ones natively.

Durable Workflows with Signals and Queries

Long-running processes with automatic recovery, external signals, and read-only queries — think order fulfillment, onboarding flows, or CI/CD pipelines:

from plexspaces import workflow_actor, state, run_handler, signal_handler, query_handler

@workflow_actor(facets=["durability"])

class OrderWorkflow:

order_id: str = state(default="")

status: str = state(default="pending")

steps_completed: list = state(default_factory=list)

@run_handler

def run(self, input_data: dict) -> dict:

"""Main execution — exclusive, one at a time."""

self.order_id = input_data.get("order_id", "")

self.status = "validating"

self.steps_completed.append("validation")

self.status = "charging"

self.steps_completed.append("payment")

self.status = "shipping"

self.steps_completed.append("shipment")

self.status = "completed"

return {"status": "completed", "order_id": self.order_id}

@signal_handler("cancel")

def on_cancel(self, data: dict) -> None:

"""External signals can alter workflow state."""

self.status = "cancelled"

@query_handler("status")

def get_status(self) -> dict:

"""Read-only queries can run concurrently with execution."""

return {"order_id": self.order_id, "status": self.status,

"steps": self.steps_completed}

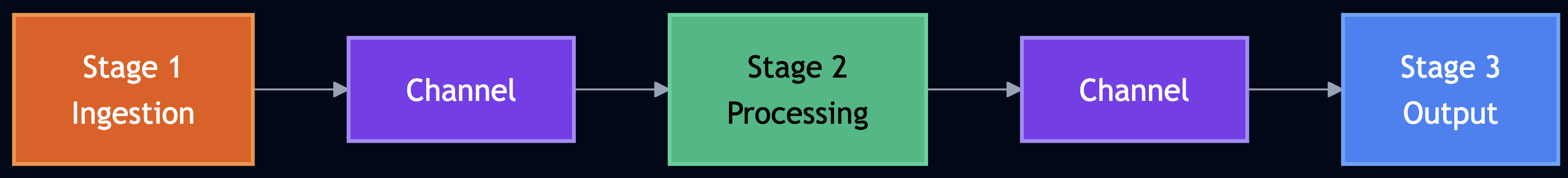

Staged Event-Driven Architecture (SEDA)

Chain processing stages through channels. Each stage runs at its own pace, and channels provide natural backpressure:

Leader Election

Distributed locks elect a leader with lease-based failover. The leader holds a lock and renews it periodically. If the leader crashes, the lease expires and another candidate acquires leadership:

@actor

class LeaderElection:

candidate_id: str = state(default="")

lock_version: str = state(default="")

@handler("try_lead")

def try_lead(self, candidate_id: str = None) -> dict:

holder_id = candidate_id or self.candidate_id

result = host.lock_acquire("", "leader-election", holder_id, "leader", 30, 0)

if result and not result.startswith("ERROR"):

self.lock_version = json.loads(result).get("version", result)

return {"leader": True, "candidate_id": holder_id}

return {"leader": False}

Resource-Based Affinity

Label actors with hardware requirements (gpu: true, memory: high) and PlexSpaces schedules them on matching nodes. This maps naturally to ML training pipelines where different stages need different hardware.

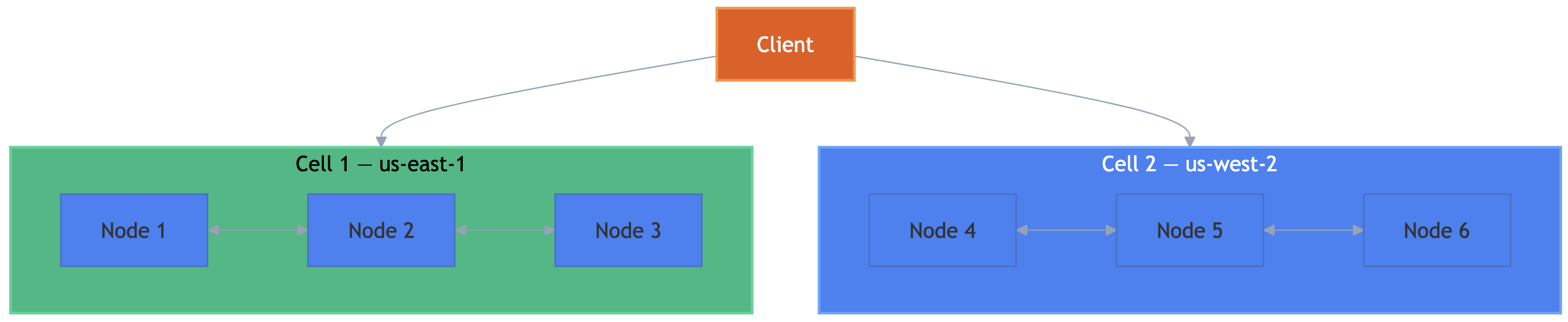

Cellular Architecture

PlexSpaces organizes nodes into cells using the SWIM protocol (gossip-based node discovery). Cells provide fault isolation, geographic distribution, and low-latency routing to the nearest cell. Nodes within a cell share channels via the cluster_name configuration, enabling UDP multicast for low-latency cluster-wide messaging.

How PlexSpaces Compares

PlexSpaces doesn’t replace any single framework, it unifies patterns from many. Here’s what it borrows from each, and what limitation of each it addresses:

| Framework | What PlexSpaces Borrows | Limitation PlexSpaces Addresses |

|---|---|---|

| Erlang/OTP | GenServer, supervision, “let it crash” | BEAM-only; no polyglot WASM |

| Akka | Actor model, message passing | No longer open source; JVM-only |

| Orleans | Virtual actors, grain lifecycle | .NET-only; no tuple spaces or HPC |

| Temporal | Durable workflows, replay | Requires separate server infrastructure |

| Restate | Durable execution, journaling | No full actor model; no HPC patterns |

| Ray | Distributed ML, parameter servers | Python-centric; no durable execution |

| AWS Lambda | Serverless invocation, auto-scaling | Vendor lock-in; no local dev parity |

| Azure Durable Functions | Durable orchestration | Azure-only; limited language support |

| Golem Cloud | WASM-based durability | No built-in storage/messaging/locks |

| Dapr | Sidecar service mesh, virtual actors | Extra networking hop; state management limits |

Key Differentiators

- No service mesh: Built-in observability, security, and throttling eliminate the extra networking hop

- Local-first: Same code runs on your laptop and in production. No cloud-only surprises.

- Polyglot via WASM: Write actors in Python, Rust, TypeScript. Same deployment model.

- Batteries included: KV store, blob storage, locks, channels, process groups — all built in

- One abstraction: Composable facets on a unified actor, not a zoo of specialized types

- Application server model: Deploy multiple polyglot applications to a single node

- Research-grade + production-ready: Linda tuple spaces, MPI patterns, and Erlang supervision in a single framework

Getting Started

Install and Run

# Docker (fastest) docker run -p 8080:8080 -p 8000:8000 -p 8001:8001 plexspaces/node:latest # From source git clone https://github.com/bhatti/PlexSpaces.git cd PlexSpaces && make build

Write -> Build -> Deploy -> Invoke

# greeter.py

from plexspaces import actor, state, handler

@actor

class GreeterActor:

greetings_count: int = state(default=0)

@handler("greet")

def greet(self, name: str = "World") -> dict:

self.greetings_count += 1

return {"message": f"Hello, {name}!", "total": self.greetings_count}

plexspaces-py build greeter.py -o greeter.wasm

curl -X POST http://localhost:8094/api/v1/deploy \

-F "namespace=default" -F "actor_type=greeter" -F "wasm=@greeter.wasm"

curl -X POST "http://localhost:8080/api/v1/actors/default/default/greeter?invocation=call" \

-H "Content-Type: application/json" -d '{"action":"greet","name":"PlexSpaces"}'

# => {"message": "Hello, PlexSpaces!", "total": 1}

Explore more in the examples directory: bank accounts with durability, task queues with distributed locks, leader election, chat rooms with process groups, and more.

Lessons Learned

After decades of distributed systems, I keep returning to the same truths:

- Efficiency matters. Respect the transport layer. Binary protocols with schemas outperform JSON for high-throughput systems.

- Contracts prevent chaos. Define APIs before implementations. Generate code from schemas.

- Simplicity defeats complexity. Every framework that collapsed — EJB, SOAP, CORBA — did so under the weight of accidental complexity. One powerful abstraction beats ten specialized ones.

- Developer experience decides adoption. If your framework requires 100 lines of boilerplate for a counter, developers will choose the one that needs 15.

- Local and production must match. Every bug I’ve seen that “only happens in production” stemmed from environmental differences.

- Cross-cutting concerns belong in the platform. Scatter them across codebases and you get inconsistency. Centralize them in a service mesh and you get latency. Build them in.

- Multiple coordination primitives solve multiple problems. Actors handle request-reply. Channels handle pub/sub. Tuple spaces handle coordination. Process groups handle broadcast. Real systems need all of them.

The distributed systems landscape keeps changing as WASM is maturing, AI agents are creating new coordination challenges, and enterprises are pushing back on vendor lock-in harder than ever. I believe the next generation of frameworks will converge on the patterns PlexSpaces brings together: polyglot runtimes, durable actors, built-in infrastructure, and local-first deployment. PlexSpaces distills years of lessons into a single framework. It’s the framework I wished existed at every company I’ve worked for tha handles the infrastructure so I can focus on the problem.

PlexSpaces is open source at github.com/bhatti/PlexSpaces. Try the counter example and provide your feedback.